Hey there, fellow tech enthusiasts and curious minds! Let’s dive into the magical world of supervised learning in neural networks. It’s a realm where computers learn from examples much like how we humans do, except they do it much faster and with a whole lot of data. Imagine teaching your dog to fetch by showing it how to do it. In a way, that’s pretty much what supervised learning in neural networks is all about—showing them lots of input-output pairs until they can make predictions on their own! So, buckle up, as we embark on a journey to discover how these intelligent systems are crafted and the wonders they can achieve.

Understanding the Basics

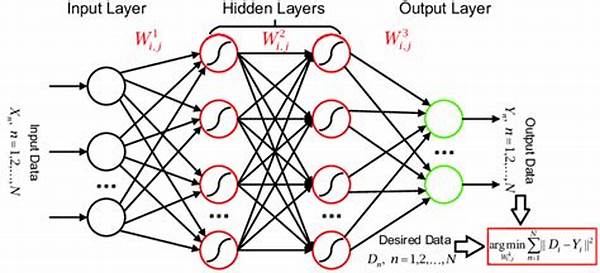

Supervised learning in neural networks acts like a teacher-student relationship, where the model learns from labeled data. Picture this: you have a massive dataset full of inputs and corresponding outputs. Neural networks process these data pairs during training. Through a series of iterations, often called epochs, the network adjusts its internal parameters to reduce errors in its predictions. The main aim? Equip the model with the ability to predict outputs from new, unseen inputs accurately.

The beauty of supervised learning in neural networks is its versatility. You can use it for a variety of tasks—think image recognition, speech synthesis, and even predicting stock market trends! These models can adapt to different types of data, thanks to the layers of neurons, each transforming the input data through weighted connections. It’s all about refining these weights through backpropagation and gradient descent. Wow, right?

Yet, supervised learning in neural networks isn’t without its challenges. Overfitting is a common pitfall, where the model gets too cozy with the training data, losing its generalization ability. That’s where techniques like regularization and dropout come into play, ensuring networks remain robust when facing new information. Despite the complexity, the impact of supervised learning in neural networks in modern AI applications is nothing short of remarkable.

Practical Applications

1. Image Recognition: Supervised learning in neural networks enables computers to identify objects in images, distinguishing between a cat and a dog with impressive accuracy.

2. Natural Language Processing: By training on labeled text data, networks understand and generate human language, powering chatbots and translation services.

3. Medical Diagnosis: Networks assist doctors by analyzing medical images, making them a valuable tool in diagnosing diseases like cancer.

4. Autonomous Vehicles: Through supervised learning, vehicles learn to navigate safely by recognizing traffic signs, pedestrians, and other vehicles.

5. Financial Forecasting: Neural networks predict market trends, helping investors make informed decisions with their money.

Training Challenges

In the realm of supervised learning in neural networks, it’s not just about feeding data and obtaining results. The training process can be a daunting task. First off, it demands massive computational power and GPU resources, especially when dealing with complex models and extensive datasets. Secondly, choosing the right architecture and hyperparameters is crucial. The learning rate, a seemingly small adjustment, can significantly affect the model’s performance. Too high, and the model overshoots the optimal solution; too low, and the training process becomes painfully slow.

Another challenge tied to supervised learning in neural networks is data quality. Clean, well-labeled data is essential for effective training. However, acquiring such data isn’t always straightforward. Real-world data may come with inconsistencies, missing values, or noise, which require pre-processing. Moreover, the infamous “class imbalance” issue, where certain classes in the dataset have fewer samples, can skew the learning process. Techniques like data augmentation and synthetic data creation help mitigate these problems, but they add an extra layer of complexity to the training pipeline.

Evolution of Techniques

The landscape of supervised learning in neural networks has evolved dramatically over the years. Initially limited by hardware and dataset size, the techniques used in modern networks today are a far cry from the earlier perceptron models. The advent of deep learning and advancements in hardware acceleration, like CUDA-enabled GPUs, have opened up new possibilities. Now, networks consist of tens, hundreds, even thousands of layers, each extracting progressively complex features from the data.

Moreover, novel architectures have emerged, advancing the field drastically. Convolutional Neural Networks (CNNs) revolutionized image processing tasks by mimicking the human visual cortex. Meanwhile, Recurrent Neural Networks (RNNs) and their variants, like LSTM and GRU, have become the go-to for sequential data processing, be it text, music, or time-series data. But it’s not just about deeper or more complex models—optimization techniques, like Adam optimizer and learning rate schedules, have drastically improved training stability and convergence speeds.

The Role of Supervised Learning in Future AI

Supervised learning in neural networks is set to continue playing a pivotal role in the future of AI. As datasets continue to grow and computational resources become more powerful and accessible, the effectiveness and application scope of these models will expand. There’s ongoing research focused on improving efficiency, reducing dependence on large datasets, and enhancing model interpretability. The integration of semi-supervised and unsupervised learning approaches also shows promise for reducing the amount of labeled data needed while maintaining high accuracy levels.

In addition, ethical considerations and explainability are likely to become more prominent topics. As neural networks play increasingly crucial roles in sectors like healthcare, finance, and autonomous systems, understanding the decision-making processes of these models is critical. Efforts towards explainable AI (XAI) strive to unravel the “black box” nature of neural networks, making them more transparent and trustworthy. In this light, supervised learning in neural networks will not only grow in technical capability but also in societal and ethical responsibility.

Summary

To sum it all up, supervised learning in neural networks is a cornerstone of modern AI technology. It’s like giving the neural networks a manual filled with the right answers to the questions they might face in the future. This manual, which comes in the form of labeled data, empowers networks to make accurate predictions, recognize objects, interpret human language, and much more with staggering proficiency.

The process of training these networks isn’t devoid of hurdles. From ensuring data quality to selecting optimal architectures and hyperparameters, every step demands meticulous attention. Despite these challenges, the progress in the field is evident, with evolving techniques constantly pushing the boundaries of what’s possible. Whether it’s spotting a cat video on social media or aiding in a critical medical diagnosis, the impact of supervised learning in neural networks is profound and far-reaching. As we look towards the horizon, there’s little doubt that these intelligent systems will continue to evolve, transcending their current limitations and ushering in a future brimming with potential.