In a world where artificial intelligence powers essential elements of our daily lives, from healthcare to personalized recommendations, ensuring fairness and eliminating bias has taken center stage. With AI systems being implemented in vital sectors, there’s a growing concern about biases inherent in data and algorithms influencing decisions, sometimes with severe consequences. The challenges with AI bias are like an elephant in the room: everyone is aware but only a few know how to tackle it effectively. Addressing AI bias isn’t just a tech issue; it’s about creating a fair and just society that embraces technology responsibly. Imagine an AI system in a hiring process that unfairly discriminates against a particular gender or ethnicity due to biased training data. This is not just an ethical issue but can also have legal and reputational repercussions for the company. This article delves into strategies for reducing AI bias, presenting unique perspectives and actionable insights to provoke thought and inspire action.

Read Now : Best Practices In Unbiased Data Collection

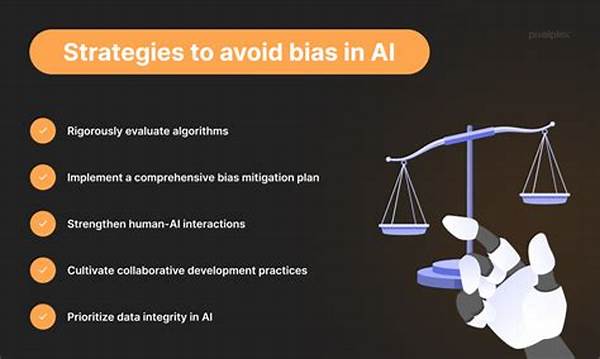

AI bias primarily stems from biases in the data used for training these models. Reducing this bias requires multifaceted strategies ranging from better data curation to algorithmic innovations. One promising strategy is diversifying data sets to include a broader spectrum of demographics. By ensuring data includes various backgrounds, cultures, and contexts, AI systems can potentially reduce biased outcomes. Another strategy is implementing fairness-aware algorithms that analyze the possible bias in data and adjust the decision-making process accordingly. Despite these measures being technically challenging, they are crucial for minimizing biases.

Moreover, fostering a culture of transparency and accountability in AI development will pave the way for ethical AI implementation. Companies should not only focus on developing robust algorithms but also engage in open dialogue with stakeholders to understand the implications of these technologies. Regular audits and impact assessments by third parties can provide an additional layer of scrutiny to ensure that AI models remain fair and unbiased. By integrating these strategies for reducing AI bias, we not only improve technological advancements but also stay committed to an inclusive future.

Exploring Advanced Strategies to Combat AI Bias

Understanding the intricacies of AI bias and the steps needed to mitigate it calls for cutting-edge strategies and a commitment to ethical AI development.

—

The conversation around artificial intelligence often revolves around its potential to revolutionize industries, but the shadow of AI bias lurks behind many success stories. At the core of these biases are the data sets, often reflecting existing societal prejudices. For instance, if an AI system is trained predominantly on data collected from one particular group, its outcomes are likely to skew in favor of that group. This isn’t just hypothetical; stories have surfaced where AI systems used in hiring, policing, and judicial evaluations made biased decisions that mimicked historical inequalities.

One might ask, how do we navigate this complex landscape to ensure AI is a force for good? The answer lies in a multi-pronged approach. One key strategy for reducing AI bias is employing diverse teams of developers and ethicists. By bringing varied perspectives into the AI development process, it becomes easier to spot potential biases before they are embedded into the algorithms. A diverse team can evaluate data sets and modeling decisions through different lenses, reducing the risk of bias.

Another suggestion often touted by experts is the need for ongoing education and training about bias in machine learning. This extends right from the developers to the end-users of AI systems. By educating stakeholders about potential biases and how they can affect decision-making, we arm people with the awareness they need to question and challenge biased outcomes effectively.

The Role of Regulatory Frameworks

A burgeoning area of interest is the formulation of regulatory frameworks that guide the development and deployment of AI systems. Governments and regulatory bodies are beginning to see the need to create guidelines that hold organizations accountable for the AI systems they bring into the market. These regulations could stipulate audits and provide a legal mechanism to address grievances related to biased AI systems.

Educating Stakeholders on AI Bias

Embedding knowledge about AI bias in the public discourse is paramount. Stakeholders should be aware of the implications of AI bias, equipping them with the foresight and confidence to engage with AI systems critically.

In line with these strategies, an increasing number of think tanks and research institutions are focusing on AI ethics. Their work is essential, not only in addressing current biases but also in forecasting potential future bias patterns. This preventive strategy places emphasis on the need to continuously monitor AI systems as they evolve, ensuring they remain aligned with societal values.

Achieving bias-free AI is a journey, not a destination. It demands sustained effort and innovation. However, by implementing robust strategies for reducing AI bias today, we can build a foundation for fairer AI systems tomorrow.

—

Key Takeaways on Strategies for Reducing AI Bias

—

As AI becomes increasingly integral to decision-making in various industries, the implications of bias in these systems cannot be overstated. The success of AI rests on its ability to provide objective insights and predictions. Yet, if biases creep in, the ripple effects can be far-reaching, affecting everything from individual opportunities to societal structures. When we address AI bias, we are ensuring not just the technological efficacy of these systems but also their moral and ethical integrity.

Read Now : Regulation Frameworks For Artificial Intelligence

Diving into data curation offers a quick win in the battle against AI bias. Training AI on balanced, well-rounded data sets means eliminating the chances for historical prejudices to flow into future decisions. Additionally, organizations can leverage AI to police AI; machine learning algorithms can analyze other algorithms for signs of bias before they are deployed.

Investigating the Impact of Biased AI

With headlines often flashing alarming instances of AI bias—like predictive policing systems disproportionately affecting minority communities—it’s time to realize the gravity of AI bias. These are not just news stories; they affect real people’s lives and have societal implications. Biases are often unconscious and systemic, making them even harder to detect.

Implementing Bias Detection Mechanisms

Techniques such as bias detection and mitigation should be ingrained in the AI development lifecycle. Teams should prioritize such mechanisms to ensure that models are vetted for bias extensively before hitting the real world.

As we power through this transformative period of digital awakening, individuals, companies, and governments must take an active role in ensuring ethical and unbiased AI. Relying solely on technical fixes won’t suffice. It requires rethinking methodologies, fostering inclusive development cultures, and setting a new standard for accountability. Visionary future steps must acknowledge the broader impact AI has and will continue to have on our lives. Through these conscious efforts and by utilizing effective strategies for reducing AI bias, stakeholders can ensure AI systems are not only innovative but also equitable.

—

In a world increasingly reliant on AI for everything from healthcare decisions to criminal justice, eliminating AI bias is no longer optional but essential. AI bias doesn’t only affect the technology industry but has social and economic repercussions. By integrating these strategies for reducing AI bias, businesses and individuals can harness AI’s full potential in a fair and just manner. The road to minimizing AI bias involves proactive steps, thoughtful deliberation, and the unyielding pursuit of equality and fairness. This ongoing journey demands that every stakeholder—be it a software developer, a business executive, or an end-user—plays their part actively. By doing so, we not only enhance the efficiency and reliability of AI systems but also ensure technology serves humanity in its truest sense.

—

AI bias is an issue that stands at the crossroads of technology and ethics, drawing attention from scholars, tech developers, and policy-makers. The strategies for reducing AI bias are diverse and require a robust framework that includes making educated design choices, broadening participation in AI development, and ensuring transparent decision pathways. These approaches open up the possibility of an equitable technological landscape that fosters trust and inclusivity.

A promising strategy involves the design and deployment of fairness-aware algorithms. These algorithms are programmed to calibrate and correct themselves upon identifying potential bias in their patterns, functioning with higher integrity and alignment with human values. Moreover, fostering inclusive environments where diverse teams work on AI systems brings invaluable insights, mitigating the risk of one-dimensional perspectives leading to biased solutions.

The Importance of Transparency and Accountability

Arguably the most critical component in this arsenal is transparency and accountability. Companies should foster environments where stakeholders can easily access and understand AI decision-making processes. This transparency not only builds trust but also invites collaborative efforts in bias mitigation.

Encouraging Interdisciplinary Dialogues

A call to action extends to interdisciplinary dialogue, bringing together minds from different sectors to discuss, understand, and act on AI bias issues proactively. This collaboration ensures the creation of balanced AI systems.

A leap forward can be made by strengthening the regulatory frameworks surrounding AI deployment. These regulations can mandate regular audits of AI systems, ensuring they adhere to predefined ethical standards, thereby embedding a culture of accountability. Additionally, integrating public input into AI development not only enhances transparency but validates the societal impact of these technologies. This multifaceted approach embodies the essence of strategies for reducing AI bias—ensuring a balanced, fair, and inclusive technological future.