In the ever-evolving world of natural language processing (NLP), the ability to correctly assign parts of speech (POS) tags to words in a sentence is paramount. This process, known as POS tagging, is foundational for more advanced language processing tasks such as syntactic parsing, machine translation, and sentiment analysis. However, despite advancements in technology, errors in POS tagging remain a common hurdle. These errors can stem from various factors, including ambiguity in language, the intricacy of linguistic nuances, or even a lack of context. As a result, mastering the art of pos tagging error reduction techniques has become a vital skill for anyone serious about NLP.

Imagine this scenario: a company investing heavily in NLP technology for a sentiment analysis project encounters skewed data results due to inaccurate POS tagging. The frustration and confusion can be overwhelming. Herein lies the importance of understanding and employing effective pos tagging error reduction techniques. These techniques not only save time and resources but also enhance the accuracy and reliability of your NLP projects. It’s like having a finely tuned engine in a sports car—the smoother the function, the better the performance.

As we dive into the world of pos tagging error reduction techniques, it’s essential to realize that every error presents an opportunity for learning and improvement. From fine-tuning algorithms to adopting hybrid approaches and leveraging annotated corpora, the strategies available are both diverse and promising. There’s a story of an up-and-coming tech startup that turned its fortunes around by investing in error reduction techniques. By addressing the root causes of their POS tagging errors, they managed to deliver a product that skyrocketed in popularity due to its newfound reliability and precision.

Exploring Advanced Strategies

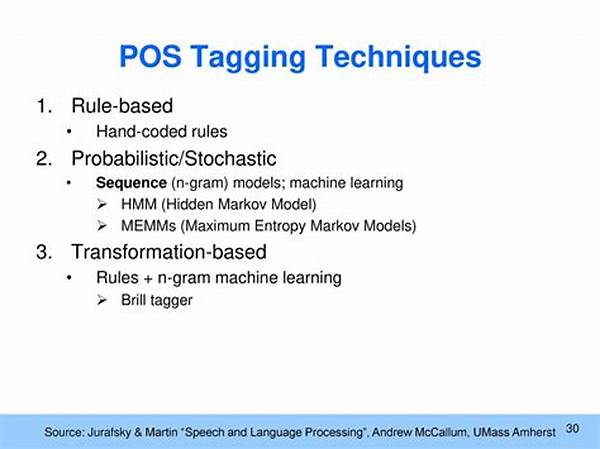

Incorporating more sophisticated statistical models is one of the key strategies in pos tagging error reduction. Machine learning and deep learning models have shown remarkable efficacy in reducing tagging errors by learning from vast datasets. Hybrid models that combine rule-based and statistical methods can further enhance tagging accuracy, offering a dynamic approach to handle complex language structures. Furthermore, creating comprehensive and annotated training corpora ensures that the tagging models are exposed to diverse linguistic scenarios, allowing for a more robust error reduction process.

Equipped with these strategies, tech companies can significantly boost the reliability of their language processing tools. Imagine a world where language barriers shrink, thanks to error-free multilingual translation apps or highly accurate sentiment analysis widgets, allowing businesses to thrive in a global market. By staying ahead with pos tagging error reduction techniques, the possibilities are endless.

Keys to Implementing Error Reduction Techniques Effectively

It’s one thing to understand pos tagging error reduction techniques, and another to implement them effectively. Starting with a clear understanding of the errors most pertinent to your projects can guide the efficient application of specific error reduction methods. Regularly evaluating the performance of your tagging systems through benchmarking and reviews ensures that the chosen techniques remain relevant and effective. Establishing a culture of continuous improvement encourages creative thinking and adaptation, crucial elements for staying on top in the competitive world of natural language processing.

By diving deep into these techniques, not only can you elevate your NLP game, but you can also contribute to the broader advancements in language technologies. After all, every error reducing refinement adds up to a future where human and computer interactions are seamless and intuitive.

Expanding Your Knowledge of POS Tagging

As you embark on integrating pos tagging error reduction techniques into your workflow, there are numerous additional strategies and insights to consider. Below are some practical examples and guidance to further enhance your understanding and capability.

Specific Techniques for POS Tagging Error Reduction

Harnessing Techniques for Optimal Results

The goal of implementing pos tagging error reduction techniques is to create a more precise and intelligent NLP system that can enhance user experience, drive innovation, and facilitate global communication. In such a fast-paced digital landscape, understanding and applying these techniques could be the differentiator your business needs to lead the way in cutting-edge technology. So, why not start now? Dive deeper into these techniques, experiment with them, and transform your NLP capabilities to meet the challenges of tomorrow, today.