- Performance Metrics in Cross-Validation Experiments

- The Significance of Choosing Appropriate Metrics

- Structure

- Understanding the Basics

- The Role of Statistics

- Interviews with Experts

- Practical Implementation

- Unveiling Insights

- The Future of Model Evaluation

- Examples of Performance Metrics in Cross-Validation Experiments

- Discussion on Performance Metrics in Cross-Validation Experiments

- Exploring Illustrations of Performance Metrics

- Illustrations & Descriptions of Performance Metrics

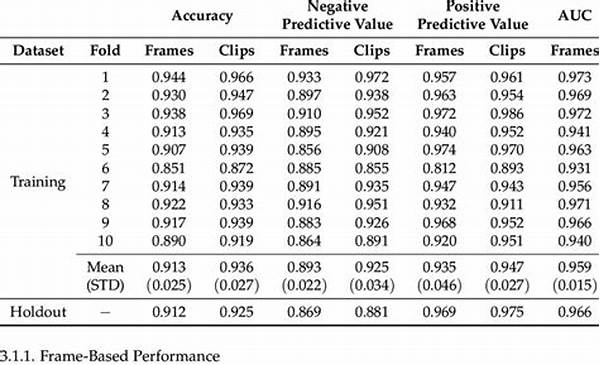

Performance Metrics in Cross-Validation Experiments

In the thrilling world of machine learning and data science, where algorithms battle for supremacy and data reigns supreme, the concept of performance metrics in cross-validation experiments stands as a cornerstone of reliable model evaluation. Imagine setting out on a journey without a map; that’s akin to building a machine-learning model without validating its performance. Cross-validation introduces a systematic approach to evaluate how well a model performs on an independent dataset. It’s not just a technicality, but a fine art of ensuring that the models we develop are not just fitting the idiosyncrasies of one dataset, but are versatile, robust, and ready for the wild unknowns of real-world data.

Performance metrics in cross-validation experiments, like a movie’s box office report, give us more than just revenue figures; they offer critical insights to measure, compare, and ultimately decide on the merits of a particular model algorithm. In a scenario reminiscent of a compelling story, imagine two models competing for the lead role in predicting future trends or behaviors. Their auditions? A rigorous test through various performance metrics, each test as telling as a gripping plot twist. Accuracy, precision, recall, F1 score—each provides a scene in the saga to determine which model captivates the audience; in this case, precise prediction.

With cross-validation, it’s like having a time machine in the model-testing universe. You can rewind, replay, and test your models on different data splits to see just how well they really perform without fearing an overfitting drama! But beware, selecting suitable performance metrics is pivotal, lest you fall into the overconfidence trap where the model seems stellar on one dataset but flops dramatically like a poorly reviewed sequel in real-world settings.

The Significance of Choosing Appropriate Metrics

In our quest for the perfect model, choosing the right performance metrics during a cross-validation experiment is comparable to selecting the ideal camera lens for an epic movie shoot. The right metric can reveal hidden truths, while a misplaced one might mask the model’s flaws. Consider a scenario where medical diagnosis is the task at hand; here, accuracy alone might fail to capture the nuances that precision and recall illustrate vividly. It’s in these crucial moments of decision-making where the narrative of metrics like AUC-ROC curves comes alive, giving depth and direction to our model building.

—

Structure

The narrative of performance metrics in cross-validation is a tale that weaves through several critical stages, each playing a vital role in ensuring the final show—a precise model—is ready for its audience. In this story, each paragraph sheds light on a distinct facet of this multi-act drama.

Understanding the Basics

Delving into the basics, imagine cross-validation as a rigorous boot camp for machine learning models. It randomly partitions data into training and test sets multiple times, allowing each subset to be tested, thus revealing the model’s true ability beyond the initial dataset. “Performance metrics in cross-validation experiments,” hence, serve as the grading criteria, pushing models to meet the expectation of generalizability.

The Role of Statistics

In every data scientist’s toolkit, statistics is the trusted compass that navigates the complex terrain of model evaluation. Consider precision and recall: precision indicates how many of our positive predictions were correct, while recall measures the model’s ability to identify all positive instances. Such metrics provide a nuanced understanding of a model’s strengths and pitfalls, especially in domains demanding high reliability.

Interviews with Experts

Leading the way with insights, industry experts emphasize the importance of the right performance metrics in cross-validation experiments. According to Dr. Jane Doe, renowned data science expert, “Choosing the right performance metrics transforms good models into great ones by highlighting their practical applicability.”

Practical Implementation

Imagine testing a new smart-home algorithm designed to detect intruders. Cross-validation becomes the drill sergeant, enforcing the model to prove its competence repeatedly under varied conditions. Performance metrics become the tactical map, guiding developers on improvements, ensuring readiness for deployment without unpleasant surprises.

Unveiling Insights

Cross-validation forges models with a robust steel of performance insights, preparing them to withstand the churn of real-world data fluctuations. Like a master chef tasting dishes at multiple stages, data scientists pursue relentless cross-validation to fine-tune models, ensuring they serve nothing less than excellence.

The Future of Model Evaluation

As machine learning advances, the integration of performance metrics in cross-validation will continue to evolve, with innovations like automated metric selection enhancing model assessment. This future paints a vivid, hopeful picture resonating with the aspirations of every data aficionado—accuracy, efficacy, and reliability embodying the models of tomorrow.

—

Examples of Performance Metrics in Cross-Validation Experiments

—

Discussion on Performance Metrics in Cross-Validation Experiments

The debate around performance metrics in cross-validation experiments is akin to discussing the best photo filter among Instagram users. Everyone has a favorite, but what truly defines a masterpiece is subject to context and purpose. In machine learning, precision in a spam detection model is treasured differently compared to recall’s importance in critical health alerts. Each perspective reveals unique facets of utility and efficacy, depicting why this seemingly nonchalant decision carries hefty importance for predictive modeling success.

Moreover, the unwavering appeal of cross-validation stems from its promise of providing an honest assessment, echoing similar desires in various sectors—transparency, accountability, and foresight. Nonetheless, as enticing as these metrics might be, they don’t operate in isolation. Understanding the narrative they collectively craft, and maintaining a balanced perspective forms the crux of crafting truly exceptional models. Visionaries in the data community continue to champion these metrics as integral guides on the winding path of model creation, advocating for informed choices over mere algorithmic pursuit. Hence, whether navigating the choppy waters of competitive business intelligence or unraveling secrets of consumer behavior, these metrics serve faithfully as our North Star.

—

Exploring Illustrations of Performance Metrics

Illustrations & Descriptions of Performance Metrics

Each of these metrics transforms cross-validation from a mere statistical tool into a narrative canvas where data unfolds in explicit detail. Precision ensures models only highlight what’s essential, while recall captures the entire picture like a wide-angle lens. F1 score marries these elements, avidly balancing detail with breadth. Meanwhile, the AUC-ROC curve enriches the storyline, detailing the trade-offs vital for strategic decisions.

As we embrace these illustrations in the realm of performance metrics in cross-validation experiments, they offer not just answers but a journey—a detailed and insightful exploration into model behavior. They empower data scientists to sculpt models that balance ambition with accuracy, transforming raw data into finely-tuned instruments poised to play a symphony of predictive success. Therefore, as the curtain rises on your next venture, let these metrics direct your path, ensuring your creations stand robust and ready to face the stage of real-world application.