The realm of neural networks offers vast potential across diverse sectors, from healthcare to automotive, and finance to entertainment. At the heart of a neural network’s power lies, quite literally, in its weights. Optimizing these weights can dramatically improve a model’s performance, ensuring that it not only captures data intricacies effectively but also generalizes well to novel inputs. This is where the magic begins – turning a neural network from a simple collection of nodes and connections into a sophisticated predictive model. How weights are initialized, adjusted, and refined during training can make the difference between a model that stumbles and one that succeeds spectacularly. The process of optimizing neural network weights, therefore, is not just a technical step; it is a transformative journey towards model excellence.

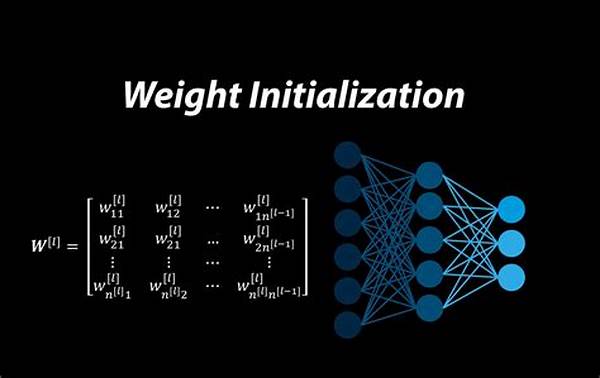

Managing and fine-tuning these weights stand at the nexus of art and science. It’s akin to a sculptor chiseling away at a block of marble, revealing the masterpiece within. This optimization journey is often fraught with challenges but equally filled with opportunities for innovation and creativity. Each neural network model has its unique characteristics, demanding a tailored approach to weight adjustment. From setting initial weights using techniques like Xavier or He initialization to utilizing dynamic algorithms such as Stochastic Gradient Descent or Adam, the path to optimizing neural network weights is both complex and rewarding.

Historically, the optimization of neural network weights has seen substantial advancements. Initial approaches were rudimentary, often leading to models that either overfitted or couldn’t generalize well. However, with the advent of new techniques and algorithms, it’s now possible to achieve breathtaking improvements in model accuracy and efficiency. In a fast-evolving landscape, the way forward involves continuous experimentation, leveraging cutting-edge research, and adapting to new insights. For businesses and researchers aiming for the neural network prowess, mastering the optimization of weights is not just an option, but a necessity.

Today, the market is flooded with tools and platforms offering innovative solutions for optimizing neural network weights. From TensorFlow’s versatile offerings to PyTorch’s dynamic computation graph, developers have a plethora of choices. These tools streamline the process by offering pre-built functions and libraries, ensuring that even those new to neural network technology can achieve impressive results. However, it’s crucial to not only rely on these tools but also understand the theoretical foundations of weight optimization. Knowing why and how these weights are tweaked can empower developers to push boundaries and unlock new potentials in their models.

The Importance of Weights in Neural Networks

Weights play a pivotal role in shaping the capabilities of a neural network, acting as adjustable parameters that determine the strength and direction of the connections between nodes. When optimizing neural network weights, the precision of these adjustments directly correlates with the model’s predictive performance. Consider weights as the levers that can either amplify or dampen signals, thus governing how information flows within the network. With the current advancements, optimizing these weights is both an art and a science, demanding a keen understanding of underlying algorithms and innovative problem-solving approaches.

—

Detailed Description of Optimizing Neural Network Weights

Mastering the Art of Weight Optimization

If the core of a song lies in its melody and rhythm, then in neural networks, the essence is captured by the weights. They dictate how input signals are transformed and processed through the layers of a network. Effective weight optimization can lead to striking improvements, making it the unsung hero behind successful AI models. Imagine, for a moment, tuning a musical instrument; each string must be aligned perfectly to produce the desired symphony. Similarly, in the terrain of neural networks, weights must be optimized to conjure a spectacular result.

Organizations today are heavily investing in optimizing neural network weights, driven by the compelling need for accuracy and efficiency. Diverse applications from personalized recommendations on streaming platforms to detecting anomalies in financial transactions rely on impeccable neural network models. But how do we achieve that immaculate optimization? The pathway includes rigorous sampling, iterative testing, and employing advanced algorithms such as RMSProp or Adagrad, alongside modern computational tools which aid in detecting inconsistencies and identifying areas for enhancement.

The Role of Algorithms in Weight Optimization

While the backbone of any significant optimization lies in the strategies employed, the real engine that propels it forward is the algorithm. Various algorithms are being employed to achieve fine-tuned models. These include adaptive learning rates and methodologies like Batch Normalization which can prevent the ‘exploding or vanishing gradient’ problem. As we venture deeper, the efficacy of these strategies in optimizing neural network weights is making a long-lasting impact, shaping industries, and prognosticating trends like never before.

However, the journey doesn’t end with choosing the right algorithm. It’s about blending them to align with the specific nature of tasks, understanding the data intricacies, and leveraging computational resources optimally. Organizations that have mastered this art describe the optimization process as transformative, helping them achieve levels of predictability and reliability once thought impossible. Narratives emerging from these ventures reveal testimonies not just of improved outcomes but of a drastic reduction in processing time and resource utilization.

Challenges in Weight Optimization

Even though the discourse around optimizing neural network weights is overwhelmingly positive, it’s not free from challenges. Common hurdles include overfitting, where the model performs well on training data but poorly on unseen instances, and lack of sufficient computational power to handle complex datasets. Despite these challenges, seasoned practitioners and businesses are unraveling solutions by employing ensemble training and cutting-edge hardware like GPUs, customized for deep learning workloads. When each obstacle is addressed, the model turns from a theoretical construct into a tangible tool of innovation.

The intrigue hovering around optimizing neural network weights often attracts researchers and companies alike, drawn by the promise of untapped potential and breakthroughs. Every optimized model serves as a cornerstone for greater pursuits, enabling everything from natural language processing to automated driving technologies. Companies frequently echo the sentiment that while optimization may seem daunting, the journey rewards with unprecedented insights and capabilities, serving as a testament to human ingenuity and technological advancement.

Key Actions for Optimizing Neural Network Weights

The Significance of Neural Network Weight Optimization

In the ever-evolving domain of artificial intelligence, optimizing neural network weights stands as a crucial endeavor. Much like sculpting a masterpiece from a slab of marble, refining these weights involves precision, patience, and understanding. It’s not just about tweaking parameters blindly but deciphering the optimal configuration that unlocks a model’s full potential. This process begins at the initialization phase and carries through to convergence, demanding sophisticated techniques and industry know-how.

Across industries, weight optimization translates to enhanced efficiency and accuracy. Whether it’s improving customer experiences through personalized recommendations or forecasting financial markets, the implications are expansive. By employing effective strategies and embracing innovation, businesses can rise to the challenge, transforming data into insightful predictions and strategic initiatives. Moreover, sharing these success stories adds to the collective knowledge, serving as a pillar for those venturing into the intriguing world of neural networks.

Strategies for Optimizing Neural Network Weights

Understanding that neural networks are modeled on the human brain’s complex synaptic connections can offer inspiring insights into their potential. Just as our learning is never static, neither should be our approach to optimizing neural network weights. Employing regularization techniques can help prevent models from overfitting, enhancing their ability to generalize. Furthermore, opting for adaptable learning rates and fine-tuning algorithms to task specificity can aid significantly in model performance improvements.

Yet, it’s the steadfast pursuit of new methodologies and the continuous adaptation of existing ones that build a robust foundation for effective weight optimization. The synergy between theoretical knowledge and practical application acts as the keystone to unlocking neural network potentialities. As industries stride forward with their AI advancements, these optimizations hold the promise of a path less trodden, teeming with unlimited potentialities and innovative avenues.

Further Exploration on Optimizing Neural Network Weights

—

In the quest to optimize neural network weights, the strategy is multifaceted, involving a blend of science and art. By embracing a diverse set of tactics, from initialization to regularization, and leveraging computational advancements, stakeholders can unlock unprecedented opportunities for growth and innovation. As neural network applications continue to expand, the strides in weight optimization will inevitably propel industries toward a bolder, brighter future.