Optimizing Feature Selection Process

In the bustling world of data science, one overwhelming truth prevails: not all data is created equal. Imagine trying to sip water from a firehose. That’s how challenging it can be when faced with a massive dataset, with countless features, and you’re trying to pinpoint the few that actually matter. This is where optimizing feature selection process comes into play, much like a skilled orchestra conductor who ensures every instrument contributes effectively to a harmonious performance.

In the competitive business landscape, the key to standing out is often found in the finer details—the unique variables that truly drive outcomes. Whether you’re building a predictive model for real estate prices or fine-tuning a recommendation engine like Netflix, selecting the right features is quintessential. It’s like brewing the perfect cup of coffee; too many ingredients can spoil the flavor, while the right blend creates magic. With examples from major tech companies making headlines about their innovative use of data, the importance of an optimizing feature selection process is clearly not just a passing trend, but rather a substantial shift in strategic thinking that can drive business success.

Accurate and efficient feature selection can reduce overfitting, enhance model interpretability, and decrease training times. You save on resources, get better performance, and understand your model better. Enter the realm of smart data solutions, where machines learn like curious children, picking up essentials while discarding the noise—this is the promise of optimizing feature selection process methods. Savvy data scientists relish in searching for that needle in the haystack, knowing full well that finding it can lead to the next big breakthrough or the smartest AI, reshaping industries far and wide.

But let’s be real; data transformation processes can sound dry to those unfamiliar. And yet, there’s a compelling narrative here—each bit of data has a story to tell, an underlying truth waiting to be unveiled. Data-driven decision-making is akin to detective work, and at the heart of this investigation is the skillful art of feature selection.

Benefits of Optimizing Feature Selection

Diving deeper into why optimizing feature selection process matters. Imagine you’re assembling a dream team for a championship game. You wouldn’t just pick any player off the bench. That’s what optimizing feature selection ensures: you’re putting the best players on the field, those that will help you win the game. By reducing your dataset to the essential features, you’re tuning into precision, opening avenues for better predictions, and cleverly cutting down on unnecessary computational costs. Picture a comedian who trims each joke to its punchiest form—what you get is an effective, streamlined performance. Feature selection is your comedic timing—the artful delivery of your data narrative, ensuring your audience is engaged and your story remains compelling.

—

Introduction to Optimizing Feature Selection Process

The term “optimizing feature selection process” isn’t just a buzzword in the data analytics realm; it’s increasingly becoming the secret sauce for companies looking to harness the power of deep learning and predictive analytics. Consider this: businesses today thrive not merely on information, but on the insights extracted from that information. Building robust, cost-effective models quickly enough to adapt to market changes is the name of the game. Feature selection is akin to sifting through a treasure map, identifying the X that marks the spot where the hidden gold lies.

A classic example would be in healthcare analytics, where hundreds of biological attributes are considered to predict disease states or treatment responses. Optimizing feature selection process in such cases can be life-changing, allowing for a focus on the most critical variables, thus providing a better understanding of underlying mechanisms and improving patient outcomes. Imagine a superhero with an unerring sense of priority, swiftly zeroing in on what’s truly important, making every decision and action count—that’s the essence of optimized feature selection.

Statistics show that businesses that strategically implement an optimizing feature selection process can see up to a 20% improvement in their model’s predictive accuracy. These aren’t just fancy numbers to put on a PowerPoint; they represent real growth potential and competitive advantage. As more industries realize this, optimizing feature selection process becomes the torchbearer leading them into a data-empowered future.

Another intriguing aspect is the story of serendipity in data science. Sometimes, companies stumble upon insights they weren’t even looking for simply by having the right features in focus. The proverbial “Aha!” moment becomes more frequent with an effective optimizing feature selection process, turning data into a fertile ground for innovation.

Techniques in Feature Selection

To embark on your optimizing feature selection journey, you need to be equipped with the right techniques. Feature selection techniques range from the simple, like manual selection by domain experts, to the complex, like algorithmic approaches using machine learning models themselves to rank feature importance. You may employ wrapper methods for a more granular selection or use embedded approaches to simultaneously train your model and select the right features. Much like a culinary artist experimenting to find that perfect spice blend, you’ll need a mix of creativity, skill, and a bit of adventurous spirit.

Real-world Applications and Success Stories

Look no further than industry giants. Tech behemoths like Google and Amazon utilize feature selection to perfect their algorithms—from improving search relevancy to crafting recommendation engines that seem to read your mind. Through optimizing feature selection process, they’re not just maintaining their lofty perches atop the tech hierarchy but also paving the way for new ventures and applications. The magic lies in understanding data as a dynamic, evolving entity. What works today might not work tomorrow, and hence, continually optimizing your feature selection process ensures that you’re ready for whatever the future holds.

—

Related Topics to Optimizing Feature Selection Process

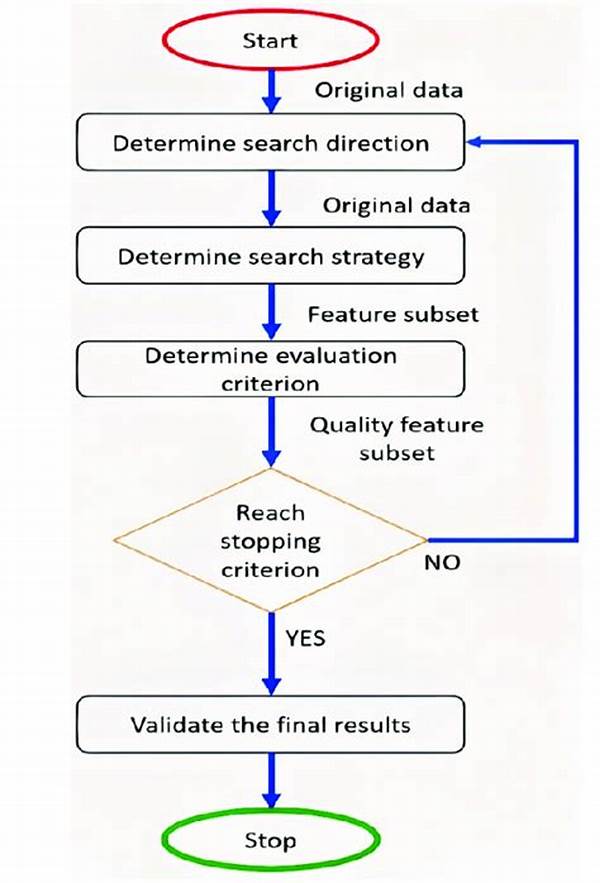

—Understanding the Structure of Optimizing Feature Selection Process

Optimizing the feature selection process is much like crafting an intricate masterpiece, where precision and expertise converge to bring out the finest details from the plethora of available data. It’s not just about removing redundant features but deeply understanding the intricacies of the dataset. When you think about it, it has a touch of artistry; imagine Michelangelo chiseling away at a marble block, knowing exactly what to carve away to reveal the David underneath.

One cannot overlook the rational aspect of this process. Statistical tools and algorithms play a pivotal role—Decision Trees, Random Forests, and L1-based feature selection are just a few methods employed to determine feature importance. Through structured selection, better model interpretations arise, enabling data scientists to make more informed decisions. Like strategists on a battlefield, those optimizing feature selection make calculated moves, aware that the right focus can bring victory in the form of insights and innovation.

What makes the optimizing feature selection process a game-changer in analytics is not only its ability to enhance model efficacy but also the resource-saving benefits. Companies can often get overly ambitious with the amount of data they collect, which can lead to bloated, inefficient models. By nailing down the right process, resources can be redirected towards more strategic initiatives, akin to an athlete shedding unnecessary weight to boost performance in a critical race.

Advanced Techniques in Feature Selection

Feature selection is often approached as an art and science. As optimizing feature selection process digs deeper, it transitions from basic methods to advanced optimization techniques like feature embedding, forward and backward selection, and genetic algorithms. These methods aren’t merely theoretical—case studies show real-world applications where such techniques lead to groundbreaking results, whether it’s in predicting customer churn rates or refining AI-driven chatbots. Once companies master these techniques, they’re not just following the market trends; they’re setting them, with optimized feature selection as their trusty compass.

—Tips for Optimizing Feature Selection Process

Understanding the problem domain can guide you in identifying key features. It’s your first filter before complex algorithms come into play.

Tools like heatmaps and scatter plots provide insight into feature correlations and importance, often revealing patterns invisible in raw data.

Techniques like PCA can help reduce the feature set size while preserving the information necessary for accurate predictions.

Regularly update the feature selection to adapt to new data, ensuring your model remains relevant and sharp in its predictions.

Try multiple feature selection methods to find the most effective for your particular dataset and objective.

Use cross-validation to ensure that your selected features consistently improve model performance across different data samples.

The Importance of Feature Selection in Machine Learning

Feature selection isn’t just a technical step in data processing; it’s a critical component of an agile and robust machine learning pipeline. Its importance can be likened to the backbone of any high-performing model. Inaccuracies in selecting features can misguide the training process, leading to undervaluing or overvaluing certain predictors. Just as a chef wouldn’t ignore the quality of ingredients, data scientists shouldn’t skip the meticulous process of feature selection. By zeroing in on the most pertinent characteristics, you carve a path to more accurate and reliable models—a precision art form mixing intuition with data-backed evidence.