Neural Network Weight Initialization

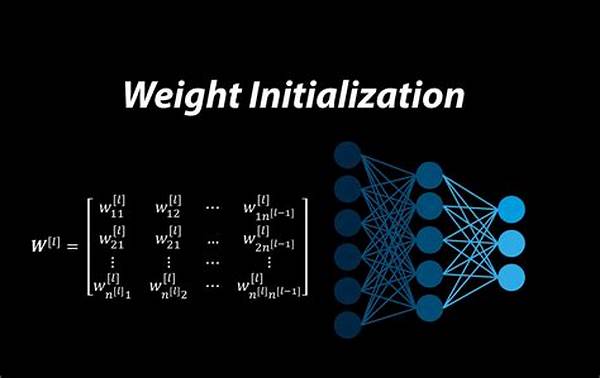

In the ever-evolving world of artificial intelligence, neural networks stand as one of the most remarkable and intriguing technologies. However, for those delving deeper into the world of neural networks, one of the initial steps that can significantly affect a model’s performance is the process known as neural network weight initialization. This step may sound trivial at first glance, but its implications are profound, impacting how neural networks learn and adapt.

Imagine embarking on a journey where every step’s direction potentially alters your path’s outcome. Similarly, in the realm of neural networks, weight initialization sets the starting point for the learning journey. It involves assigning initial values to the weights (connections between neurons) in the network before any training begins. You might wonder, “Why is this crucial?” Here’s a hint: the right weight initialization can lead to faster convergence and better-performing models. On the contrary, poor initialization can result in converging too slowly or not converging at all, turning your dreams of building a super-smart AI into a daunting task.

In a world that thrives on creativity and innovation, sometimes you need a bit of humor to grasp these intricate concepts. Think of weight initialization like baking cookies. You have your basic ingredients: flour, sugar, butter, and so forth. If you start with the right proportions, the result is delicious cookies. But mess up with the initial proportions, and your cookies might end up too sweet, too bland, or not baked evenly. Neural network weight initialization isn’t much different. It’s all about starting on the right foot so that the learning process is as smooth and effective as grabbing that perfect cookie from the jar.

Even though it may not receive the attention it truly deserves, neural network weight initialization is a fundamental step underscoring the importance of patience, precision, and planning. As you delve deeper into neural networks’ mysterious yet fascinating world, remember that each model’s success story began with this seemingly humble step.

The Significance of Proper Initialization

A well-initialized neural network can significantly outperform a poorly initialized one. This realization spurred researchers to delve into methods that optimize weight initialization. Among popular techniques are the Xavier Initialization and He Initialization, designed to address issues like vanishing and exploding gradients. Imagine driving a car; the Xavier method ensures smooth transitions when shifting gears, while He Initialization fuels the engine to climb steep hills without a hitch. Understanding these techniques allows practitioners to harness neural networks’ full potential, ensuring that each learning journey mirrors a well-navigated road trip.

—

Structuring Neural Networks: The Key to Effective Initialization

The neural network realm is a fascinating jungle, where understanding your terrain can make all the difference. At its core, neural network weight initialization is about setting the right foundation, the bedrock upon which learning builds. Visualize this as constructing a skyscraper; a strong foundation ensures longevity and stability. In this context, understanding the proper structural elements for neural network weight initialization becomes paramount.

In our tech-driven age, as we revel in AI marvels’ success stories, behind each triumph is a structured neural network driven by well-initialized weights. Take, for instance, the house construction analogy: you start with a blueprint, lay a solid foundation, and choose quality materials. Only then do you commence the build. Similarly, with a neural network, weight initialization isn’t a slapdash task—it’s about meticulous planning and careful execution, knowing that the journey can make or break your ultimate structure.

Delving Deeper into Neural Network Geometry

The landscape of neural network weight initialization is rich with choices. Internally, every node in the network is a junction where decisions are made. Picture Alice in Wonderland at a crossroad with multiple paths, each promising unique adventures. With neural networks, initialization guides the flow of signals through these nodes, akin to choosing the path that leads to Wonderland’s ultimate treasure.

Embrace the strategies illuminated through modern research and tools. Indeed, the practical side reigns supreme when converting academic concepts into real-world impacts. Neural network weight initialization sets the tone for learning, a prelude orchestrating how swiftly and accurately a model adapitates to data patterns. As you leverage these insights, you’ll find the desires of innovation coming to life, converting exploratory adventures into predictable, successful outcomes.

The Art of Balancing Depth and Breadth

Venturing into the depths of neural network design demands balancing depth with breadth. Herein lies the artistry of neural network architecture—the aesthetic dance of choosing layers and units, refining initial values. This duality, depth and breadth, must be aligned to construct a structure capable of handling diverse applications without crumbling under complexity or redundancy.

Thus, heed the insight from seasoned experts and budding innovators alike. Interviews and testimonials highlight that the allure of intelligent AI systems often begins with seemingly trivial steps—each mathematical symbol and algorithm vying for attention and precision. This brings to life narratives that intertwine rational strategies with emotional resonance, allowing you to carve a competitive edge in today’s AI-driven market.

Crafting Success in Neural Network Campaigns

Heed these lessons and you’ll find yourself navigating neural networks with confidence and finesse. Think of it as embarking on a captivating campaign, where each strategic decision echoes through the corridors of neural connection. Just as marketing geniuses craft campaigns that grab attention and inspire action, skillful engineers design neural networks that captivate with performance, reliability, and ease of understanding.

Ultimately, your journey into neural network weight initialization is a quest—an odyssey to maximize and optimize learning models. As stories and facts converge, they reveal that initializations sculpt narratives, crafting models that aren’t just functional but masterpieces that contribute to the landscapes of technology and innovation.

—

Discussion Topics on Neural Network Weight Initialization

Understanding the Challenges

Neural network weight initialization is more than just a technical necessity; it’s a gateway to innovation and efficiency. The initial values of weights determine how a neural network embarks on its learning journey, reshaping the way these models comprehend data. Imagine a symphony orchestra tuning their instruments; just as the right pitch can create a harmonious sound, appropriate weight initialization can lead to seamless learning.

The sophistication behind neural network weight initialization reflects a blend of art and science. It’s not just numerics; it’s about creating a starting line where neural networks can thrive. This intentional setting has an intrinsic value, carving out a path that facilitates swift convergence and optimizing neural networks’ inherent potential.