In the rapidly evolving world of artificial intelligence (AI) and machine learning, the buzzwords “model interpretability and transparency” are quickly gaining ground. This is not just a trend; it’s a necessity. In a landscape filled with complex algorithms and black-box models, the ability to understand and trust what these models are doing is crucial. Imagine, if you will, a scenario where an AI system makes decisions that impact your life—like approving your loan or diagnosing your medical condition—but you have no idea how it arrived at those conclusions. It sounds like a plot for a dystopian movie, doesn’t it? This is precisely why the quest for model interpretability and transparency is not just a technical challenge, but an ethical mandate.

In simple terms, model interpretability refers to our ability to understand how a model makes its predictions. It’s like turning on the light in a dark room; suddenly, everything becomes clearer. On the other hand, transparency is about how openly that information is shared with the world. The two are inseparable, like the yin and yang of ethical AI. They play a vital role in ensuring that AI systems are not just intelligent, but also accountable and fair. It’s like having a trustworthy friend who doesn’t just say, “Trust me,” but also shows you the receipts. Together, these principles help us build AI systems that we can rely upon, systems that will soon be a part of everyday lives, affecting everything from healthcare to finance to social media.

In the following sections, we’ll dive into the many facets of model interpretability and transparency. Whether you’re a tech enthusiast, a business leader, or just a curious soul, you’ll find that understanding these concepts is like having a superpower in the digital age. So, buckle up as we embark on this enlightening journey.

The Power and Responsibility of AI

In a world where AI decisions can impact lives significantly, the importance of model interpretability and transparency cannot be overstated. This dynamic duo is like the superhero team of the tech realm, offering both power and accountability. While technology offers the power to improve efficiencies and drive novel insights, it also carries the responsibility to do so ethically. Nobody wants a world where AI runs amok, making inexplicable decisions with life-altering consequences.

The concept of model interpretability and transparency is also a business imperative. Companies that embrace these principles stand to gain consumer trust—a currency more valuable than gold in today’s skeptical market. We’re living in an age where customers demand more than a product or service—they seek a relationship built on trust and openness. Businesses that unlock the mystery of their AI models can communicate their processes more effectively, creating a unique selling point that differentiates them in a crowded marketplace. Embracing these principles is not just the right thing to do; it’s a smart business strategy.

—

The discussion on model interpretability and transparency can be both intriguing and educational. Imagine you’re an investigator diving deep into the enigmatic world of machine learning models. Your task is to unveil the hidden secrets behind their predictive power and bring this knowledge to the light. It’s akin to detective work—the more you explore, the more intricate webs you discover.

Why It Matters

In applications like healthcare, finance, and self-driving cars, the stakes are incredibly high. One misjudgment by an AI model can lead to catastrophic outcomes. To avert such disasters, model interpretability and transparency serve as the guiding lights that enable us to see through complex models. This capability makes it easier for stakeholders to trust AI decisions, much like how transparent accounting lays the groundwork for financial credibility. Knowing the ‘how’ and ‘why’ behind a model’s prediction can be the make-or-break factor that determines a product’s success in highly regulated sectors.

Moreover, this is not just a technological journey; it’s a human-centric story. People want to trust technology, but they also want assurances. They seek comfort in understanding that AI is working for them, not against them. By integrating model interpretability and transparency into the AI development process, we anchor AI technologies within ethical boundaries, thus creating a more inclusive and equitable technological future.

Trends and Future Directions

So, how do we ensure model interpretability and transparency remain at the forefront of AI development? Researchers are tirelessly working on new algorithms and methods to make complex models interpretable. Lawmakers are keenly observing these advances to draft frameworks ensuring AI accountability. It’s a collaborative effort where tech companies, academia, and governments need to walk hand-in-hand, exemplifying how teamwork truly makes the dream work.

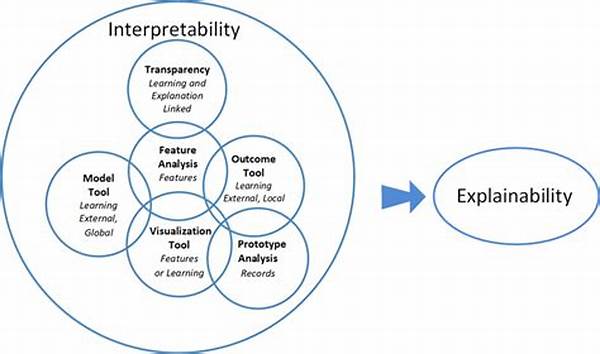

Organizations are fetching benefits by adopting explainable AI—a modern trend focusing on the interpretability of complex models while retaining their predictive power. The promising future envisions AI models that are not only transparent and interpretable but also robust and effective across various scenarios. It’s a world where open-source communities and private enterprises work together to design the AI of tomorrow—ethical, smart, and responsible.

—

Realizing the Goals of Model Interpretability and Transparency

Understanding the importance of model interpretability and transparency is just the starting point. Let’s dive into the primary purposes that drive this vital aspect.

Enhancing Trust Through AI Transparency

In a world increasingly reliant on machine learning models, fostering trust is a critical goal. Model interpretability and transparency play an indispensable role in engendering this trust. When stakeholders—ranging from customers to regulators—are assured of a system’s fairness and accountability, the ground is set for a sustainable relationship built on mutual confidence.

Such transparency also facilitates an open dialogue, allowing end-users to voice their concerns and provide input, thus leading to AI systems that are not only effective but also user-centric. Imagine what it would mean for a doctor to confidently rely on a diagnostic tool, knowing that they can explain its recommendations to patients. It’s the kind of clarity that dissolves fear and uncertainty, replacing it with informed decision-making and enhanced relationships.

—

The road to achieving effective model interpretability and transparency is not without its challenges. From a technical standpoint, complex models like deep neural networks are notoriously difficult to interpret. However, this complexity doesn’t have to be an insurmountable barrier. Instead, it serves as a call to action for researchers and practitioners to innovate and overcome these hurdles.

Strategies for Improvement

The pressing question is: how do we navigate these challenges? First, simplifying models while maintaining accuracy is one promising path. Hybrid models that combine interpretable components with complex algorithms are gaining traction. Further, the development of visualization tools aids in demystifying intricate models, transforming abstract numbers into comprehensible insights.

Collaboration is Key

Moreover, the solutions to these challenges hinge on collaboration. Bridging the gap between technical developers and domain experts allows for interdisciplinary approaches to model design. Workshops, conferences, and open-source platforms provide fertile ground for these collaborations, fostering innovation that might otherwise remain hidden.

Finally, education plays a crucial role. By training the next generation of data scientists to prioritize interpretability and transparency from the outset, we pave the way for a future in which AI systems are robust, fair, and comprehensible. It’s a blend of excitement and necessity as we march toward an AI-centric future—collaborative, transparent, and deeply human-centered.

The Empirical Evidence

Research provides compelling evidence of the benefits of model interpretability and transparency. Studies have shown that interpretable models are crucial for high-stakes decision-making areas, yielding better outcomes than opaque counterparts. Case in point, in healthcare, interpretable models have demonstrated the ability to improve diagnostic accuracy while reducing wrongful predictions.

Future Implications

As AI continues to permeate various facets of life, the implications of model interpretability and transparency extend beyond individual use cases. There’s potential for a profound shift in how we perceive and relate to AI systems—as trusted partners rather than enigmatic machines. The quest for interpretability and transparency is not just a technical challenge; it’s a societal one.

Pioneering businesses, institutions, and governments acting as AI torchbearers will set the standards for a future bound by ethics and efficiency. The roadmap is clear: prioritize transparency and interpretability, and the benefits will undoubtedly follow. Embarking on this journey promises rewards that extend beyond technology—creating a more trustworthy, fair, and enlightened society.

—

Key Aspects of Model Interpretability and Transparency

The Need for Transparent AI Communication

In conclusion, model interpretability and transparency are pivotal to the ethical and effective deployment of AI technologies. As we stand on the cusp of a future intertwined with advanced AI systems, we must carry forward the lessons of clarity and openness. The commitment to transparency fosters a culture of trust between technology and society, a bond that strengthens as AI continues to evolve.

In the long run, the need for transparent communication in AI will only grow, becoming a standard rather than an exception. Armed with clear insights into AI’s inner workings, stakeholders—from individual users to large enterprises—can make better-informed decisions. This not only ensures the responsible use of technology but also paves the way for continuous breakthrough innovations, ultimately serving humanity’s best interests.

—

By embracing these discussions, analyses, and narratives around model interpretability and transparency, we engage in a dialogue that reshapes our relationship with technology. It is a dialogue marked by curiosity, responsibility, and a shared vision for a future where AI systems enhance rather than hinder the human experience.