In the ever-evolving digital age, data holds the crown jewels to the kingdom of technological advancement. As businesses and innovations lean increasingly on data-driven decisions, the science behind these decisions becomes paramount. Enter the hero of our narrative: model evaluation using cross-validation techniques. This sophisticated and exhaustive methodology ensures that models not only perform well on known data but also generalize effectively to unseen data. Imagine building a bridge that stands tall during the test across the river, only to crumble during the actual storm. Cross-validation prevents such catastrophes in the world of data science. Picture an exclusive party, the who’s who of the data world gathered, with cross-validation techniques being the VIPs, ensuring everyone’s security and success.

Read Now : Semantic Web And Ontology Modeling

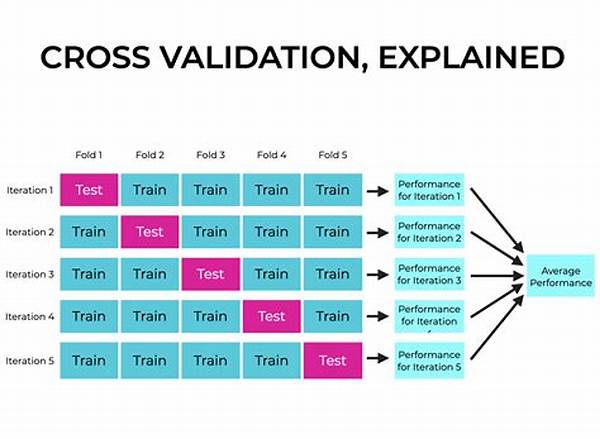

At its core, model evaluation using cross-validation techniques revolves around the concept of dividing the dataset into subsets, training the model on some while validating on others. The most classical of these techniques is k-fold cross-validation, where the dataset is split into ‘k’ subsets or folds. The model is then trained ‘k’ times, each time using a different fold as the validation set and the remaining folds as the training set. Think of it as a rigorous treadmill workout for your model, each fold pushing it toward peak performance, ensuring it isn’t just a one-hit wonder but a rock-solid performer across the board.

The allure of model evaluation using cross-validation techniques lies in its meticulous attention to detail. It offers a more effervescent, champagne-like assessment compared to its simpler counterparts. While the single train-test split might leave models like athletes untested in the world arena, cross-validation ensures that each model emerges as a champion reviewed from every possible angle. This keeps the unexpected pitfalls at bay, granting clarity and confidence in deploying models to drive business decisions or technological advancements.

In an emotionally charged domain of decision-making, these techniques take the role of the rational, wise elder, guiding models with statistically sound advice. HR giants, healthcare pioneers, finance wizards, and retail moguls all chant tales and testimonials about the impacts of effective cross-validation. Stories of increased profits, life-saving predictions, and unprecedented accuracy underline its pivotal role in modern data narratives.

Benefits of Cross-Validation Techniques

When it comes to model evaluation using cross-validation techniques, there are unseen advantages that stretch beyond just statistical accuracy. With its thoughtful architecture, it provides a robust shield against overfitting, akin to steady supervision from a seasoned coach ensuring no part of the data is left untested. It turns raw, untested algorithms into validated, market-ready performers. This meticulous testing doesn’t just highlight strengths; it reveals weaknesses, ensuring better contingency measures and optimized algorithm versions.

—

Unveiling the Magic of Cross-Validation in Model Evaluation

Data is not merely the “new oil”; it’s the lifeblood flowing through the veins of contemporary decision-making. In the vibrant and dynamic landscape of data science, one concept has come to prominence that both intrigues and reassures: model evaluation using cross-validation techniques. It’s the unsung hero of the data world, often working behind the scenes to ensure that the models we trust to make critical decisions are both accurate and reliable.

The journey of data begins far from the spotlight—gathered, cleaned, and prepped for action. It’s not unlike assembling a top-tier rock band, each piece crucial to the overall harmony. But what use is a virtuoso pianist if their performance is inconsistent? Enter cross-validation, the seasoned conductor ensuring every data model plays its part consistently across various circumstances. It’s model evaluation using cross-validation techniques that transforms raw datasets into polished symphonies of insight, ready to dazzle under the bright lights of real-world application.

When discussing model evaluation using cross-validation techniques, one must note its ability to wring maximum value and performance from a dataset. Picture this: a rigorous boot camp where models are stress-tested across multiple scenarios, ensuring they not only predict with prowess on training data but also on any new data thrown their way. With this testing apparatus, cross-validation quietly shoulders the burden of expectation, making sure when the curtain rises, the performance is flawless.

Cross-Validation: The Secret Ingredient

The practicality of deploying cross-validation lies in its supreme adaptability and comprehensiveness. Every trial, as seen in techniques like k-fold or leave-one-out, is designed as a test of the model’s consistency and robustness. These techniques, akin to secret spices in a master chef’s pantry, ensure a balance of flexibility and thoroughness that singles out cross-validation as indispensable in the data scientist’s repertoire.

Delving Deeper with Cross-Validation

As the tides of technology and demand pull us into uncharted waters, the need for dependable data models surges. Model evaluation using cross-validation techniques offers a sturdy lifeline, providing the necessary assurances of model reliability. Professionals across industries tout cross-validation as a godsend, a mentor and guardian ensuring that models do not just promise the stars but reach for them with precision-guided actions.

—

Read Now : Key Factors For Machine Learning Success

Discussion Topics on Cross-Validation Techniques

Embracing Cross-Validation for Accurate Model Evaluation

Cross-validation is akin to the magic wand in the toolkit of every effective data scientist, silently casting its spell to conjure authenticity and precision in model performance. In a world that thirsts for authentic insights, model evaluation using cross-validation techniques is the trusted water bearer. It ensures that the predictions made by models aren’t mere hallucinations of a fevered data dream but measured, dependable forecasts rooted in robust validation processes.

Insights into Cross-Validation Techniques

This evaluative shield is more than mere academic flair—it’s an operational necessity. As industries become increasingly data-driven, the need for models that can adapt and thrive irrespective of the nature of new incoming data becomes critical. Cross-validation, with its versatile testing frameworks, equips models with this essential adaptive capability, ensuring seamless transition and operational efficacy across various data environments.

The Practical Magic of Cross-Validation

Imagine heading to a tailor who not only ensures the fit is perfect in-store but convenient and stylish wherever you may venture. This is precisely how cross-validation functions for models. Whether dealing with stock market predictions or patient outcomes in healthcare, the assurance that a model has been tried and tested across multiple conditions is an invaluable asset—one that instills confidence and drives forward momentum.

Furthermore, the implementation of cross-validation methodologies frequently results in a paradigm shift in organizational culture. It roots out complacency, driving teams towards continuous improvement and excellence. Model evaluation using cross-validation techniques fosters innovation and encourages a data-driven mindset, enabling companies to tactically and strategically navigate the intricacies of their respective industries.

Tips for Effective Cross-Validation Implementation

Ensuring successful cross-validation requires deliberate strategy and insightful execution.

Implementing cross-validation techniques effectively begins with a solid understanding of your dataset. It’s crucial to identify the right method that complements the specific demands of your data and use case scenarios. Cross-validation techniques, when deployed wisely, minimize risks, highlighting not only areas of improvement but also unlocking valuable insights that drive innovation and efficiency across operations.

Progress isn’t just about building faster algorithms or harvesting endless datasets; it’s about refining these tools to offer consistent, reliable insights. Model evaluation using cross-validation techniques gives your data models the rigorous, real-world testing they need to thrive in unpredictable environments, paving the way for sustained success across efforts.

—

For additional detailed articles, instructional content, or further discussion, feel free to reach out. Tapping into the power of cross-validation techniques provides unparalleled assurance and insights, keeping decision-makers a step ahead in their strategic endeavors.