Long Short-Term Memory (LSTM) networks are a type of recurrent neural network (RNN) that are exceptionally powerful when it comes to modeling sequences and time series data. If you’re at the intersection of curiosity and ambition, eager to uncover the secrets of LSTM architectures, you are in for a treat. Think of this article as your exclusive backstage pass to understanding one of the most significant breakthroughs in the field of deep learning. Whether you’re a fledgling in the world of data science or a seasoned expert looking to brush up on the technical intricacies, the LSTM cell structure explanation is your ticket to comprehending how machines remember sequences over extended periods.

Why are LSTMs celebrated in the tech community? They possess a unique ability to selectively keep or discard information, ostensibly demonstrating a kind of “memory.” This is akin to having the perfect balance between reminiscing about your memorable high school performance and forgetting your last awkward social mishap. In an age where data is the new oil, being able to efficiently process and recall valuable information can catapult industries to next-level heights. From speech recognition systems that understand and transcribe at lightning speed to smart assistants guiding you through your daily grind, LSTMs operate silently in the background, empowering these technologies. Imagine the possibilities that unravel when you have these giants working on your side!

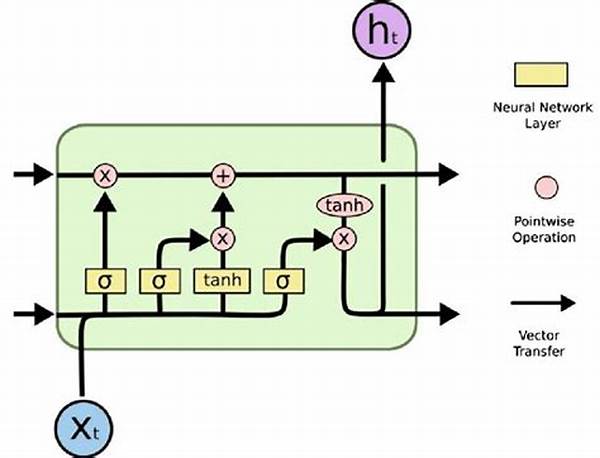

Interestingly, the development and evolution of LSTMs came at a time when traditional RNNs faltered. They suffered from short-term memory issues due to vanishing and exploding gradient problems, causing difficulties in maintaining long-term dependencies. With LSTM’s innovative cell structure, however, these challenges are effectively addressed. It’s like an aha moment when a detective finally discovers the missing piece of a complex puzzle. The magic lies in its sophisticated gates mechanism that regulates the flow of information meticulously.

Move over to the more technical lanes, and you’ll encounter terms like ‘forget gates,’ ‘input gates,’ and ‘output gates.’ Although they may sound like fancy tech jargons at first, they are the heroic triad doing the heavy lifting inside an LSTM cell. These gates work in tandem to manage the cell state. Think of it like a seamless symphony orchestrating a masterpiece — each musician knows precisely when to play and when to pause. Buckle up as we plunge into the nitty-gritty of each component in our forthcoming articles.

LSTM: The Underlying Genius

The beauty of LSTMs lies in their intricately designed architecture, enabling them to remember long-term dependencies. Let’s delve into an explanation that breaks down these components and demystifies their functions within the LSTM framework. From the ever-watchful forget gate, which ensures that irrelevant information gets eliminated, to the input gate, which determines the valuable data admitted to the cell state, each part is critical. Each unit contributes to the single significant goal: empower machines to process data with episodic memory accuracy.

As the LSTM delicately balances numerous tasks, it forms short-term memories, decides which memories to keep or erase, and predicts future values flawlessly. Imagine a juggling act where each ball represents a piece of data and the LSTM is the adept juggler ensuring not a single ball hits the ground. This precision and efficacy have made LSTMs indispensable in various domains, from language processing to time-series predictions.

In the ever-evolving landscape of artificial intelligence, where rapid change is the only constant, LSTM stands out for its robustness and adaptability. Consider it your digital storyteller whose lines are crafted from data rather than words. Unlike traditional RNNs that struggle with longer sequences, LSTMs thrive due to their sophisticated gating mechanisms. These gates manage the intricate dance of data within the network, relentlessly working toward mastering context and sequence comprehension.

At the heart of these operations is an algorithmic marvel that emulates the biological neural networks in our brains. By adopting LSTM’s methodologies, industries have made significant leaps in digital transformation. With the know-how you gain through an in-depth LSTM cell structure explanation, imagine the analytic prowess and predictive accuracy you could harness. The opportunities to apply this knowledge are limitless — from revolutionizing customer service with chatbots to enhancing financial forecasting models.

Understanding the ingenuity behind LSTM networks opens doors to a realm of innovation and creativity where data is not just processed but transformed into tactical insights. The concepts can intrigue a curious mind as they reveal the complexities of how machines interpret sequences. Dive into this knowledge reservoir and equip yourself with formidable skills that could lead you to your next great achievement in tech innovation!

8 Actions Related to LSTM Cell Structure Explanation

Delve into the captivating world of Long Short-Term Memory networks, where the complexities of temporal sequence learning unfold. Traditional recurrent neural networks, once pioneers in handling sequential data, often found themselves stumbling over long-range dependencies. Enter LSTMs, the knights in shining armor arising from the depths of machine learning laboratories. The introduction of gates within the LSTM architecture changed the landscape. Each gate holds the power to regulate, allowing the network to selectively commit chunks of information into long-term memory or expunge them altogether. This strategic curation of data empowers LSTMs with a remarkable ability to recall pertinent information over extended sequences.

Imagine for a moment a complex dance choreography, where each dancer (data point) is perfectly synchronized, seamlessly continuing the narrative regardless of how extensive the dance number becomes. This analogy beautifully encapsulates how LSTMs operate, efficiently retaining memory without bottlenecks. From detecting patterns in financial trends to comprehending the subtleties of human language, LSTMs are at the forefront of many technological breakthroughs.

The utility of LSTMs extends beyond merely processing data; they herald a new era where machines can understand context, discern sentiment, and even emulate human intuition. As industries deepen their foray into data-driven decision-making, the proficiency of LSTMs becomes indispensable. Your journey into LSTM cell structure explanation is not just an academic endeavor but a strategic investment into a future where data-driven insights lead the way to innovation and advancements.

The Prowess of LSTM Technology

Understanding LSTM cell structure explanation enriches your comprehension of what makes these networks crucial. You’re not just diving into technicalities but exploring an artifact of technological progress that revolutionized data interpretation. Whether you are developing next-generation AI solutions or enhancing current predictive models, grasping the nuances of LSTM mechanisms is a game-changer. It’s an adventure rife with potential, promising developments that could shape the technological tapestry of tomorrow.