Sure! Below is an article with the title “Improving AI Accountability Measures”:

—

Artificial Intelligence (AI) is no longer just the domain of science fiction; it’s reshaping industries, enhancing efficiencies, and enriching human capabilities like never before. However, as AI systems become increasingly autonomous, the need for improving AI accountability measures becomes paramount. These measures are necessary to ensure that AI technologies are designed and deployed responsibly, ethical boundaries are respected, and any unintended consequences are promptly addressed.

From streamlining tasks to providing insights and driving innovation, AI’s potential is limitless. However, its misuse or malfunction can lead to critical issues—privacy infringements, biased outputs, and even malicious activities. This is why improving AI accountability measures isn’t just a suggestion; it’s an exigency. Consider it the safety net in a high-wire act, catching us should something go awry.

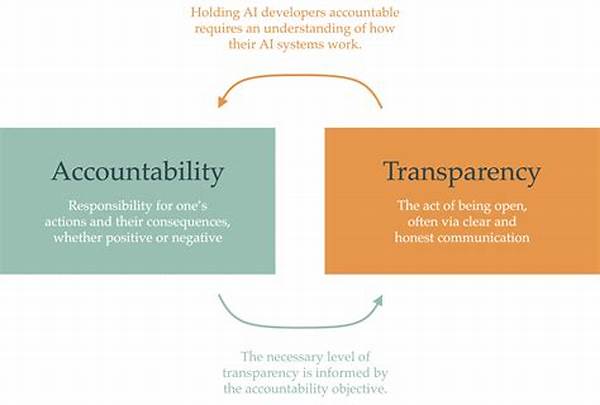

But how do we ensure that AI remains aligned with human ethics and values? The answer lies in a robust framework of accountability, transparency, and governance. These components are the cornerstones upon which we can build AI systems that are not only powerful but also trustworthy. Just like superheroes need their sidekicks, AI systems require oversight dominated by improving AI accountability measures to perform at their best while avoiding pitfalls.

The Path Toward Improved Accountability

In this ever-evolving digital landscape, where AI technology is intertwined with our daily lives, sometimes in ways we don’t even realize, the emphasis on improving AI accountability measures has never been more critical. Think of it as a friendly nudge that AI developers and users must take seriously.

In-depth research, rigorous legislative frameworks, and ongoing public debates all play pivotal roles in this transformation. By pooling together intellect, empathy, and responsibility, stakeholders from various sectors can collaboratively draft guidelines that serve as a moral compass for AI usage. This collaborative effort helps not only in recognizing potential threats but also in crafting tailor-made solutions, making these technologies safer and more reliable.

The journey towards improvement isn’t a solitary endeavor. It is a collaborative crusade involving engineers, ethicists, lawmakers, and society at large. Improving AI accountability measures is far from a one-size-fits-all proposition; it’s a custom-tailored suit stitched to fit the unique contours of each AI application, ensuring it behaves in line with human values and expectations.

—

Structuring AI Accountability: A Comprehensive Approach

Creating a structured approach to improving AI accountability measures is pivotal to maintaining trust in AI technologies. Understanding these measures involves engaging stakeholders, identifying ethical concerns, and executing strategic actions.

AI systems must integrate ethical frameworks that address issues like data privacy, bias, and transparency. This ethical backbone acts as both shield and guide, protecting users while steering AI development in an equitable direction.

An effective framework for improving AI accountability measures incorporates real-world case studies and lessons from previous AI failures. Learning from past mistakes is key to building robust systems. As developers, remaining vigilant about potential risks and taking preventive measures can prevent history from repeating itself.

Bringing stakeholders together is critical. Regulatory bodies, businesses, and the tech community must join forces to enforce standards, paving the way for an AI ecosystem that is both innovative and accountable.

Ethical Frameworks: The Roadmap to Accountability

Ethical frameworks aren’t just theoretical; they are actionable guidelines that ensure AI development remains principled and people-centric. By embedding these frameworks, developers can anticipate challenges, mitigate risks, and effectively navigate the complex landscape of AI.

A major aspect of improving AI accountability measures involves regular audits and assessments. These evaluations provide real-time insights into AI performance, highlighting areas of improvement and ensuring compliance with ethical standards.

—

Improving AI Accountability Measures: Summary

—

Ethical Implications of AI Accountability

In addressing the need for improving AI accountability measures, ethical considerations are paramount. The incorporation of ethical principles serves as a guidepost for development processes and usage protocols. It balances technological innovation with human rights and societal norms.

The crux of improving AI accountability measures revolves around policy formulation, data handling, and human oversight. These elements must amalgamate to build a cohesive strategy that guarantees AI acts beneficially and adheres to ethical standards.

Developers and regulators need to continually adapt and refine AI accountability frameworks to cope with the rapid evolution of AI technologies. The transparency in AI operations can offer a layer of reassurance that these technologies are wielded responsibly.

Strategies for Implementation

By maintaining vigilance and ethical diligence, AI can evolve into a positive force that significantly improves human life while respecting individual rights and societal values.

—

In this comprehensive exploration of improving AI accountability measures, I hope you find the balance and methodologies necessary to shape a future that is as accountable as it is innovative. Feel free to engage with this content and share your stories and insights on the journey toward a more accountable AI environment.