Impact of Overfitting in Models

In the fast-evolving world of machine learning and data science, creating the perfect model can feel like chasing a mythical creature through a dense, bewildering forest. The excitement of finding patterns in complex datasets is akin to discovering hidden treasures. But beware! For lurking in the shadows is an old foe – overfitting. Just like a mystery villain in a crime thriller, overfitting waits to sabotage your model’s performance, leaving you scratching your head, wondering where it all went wrong. Understanding the impact of overfitting in models is crucial for anyone who wants their data-driven projects to succeed.

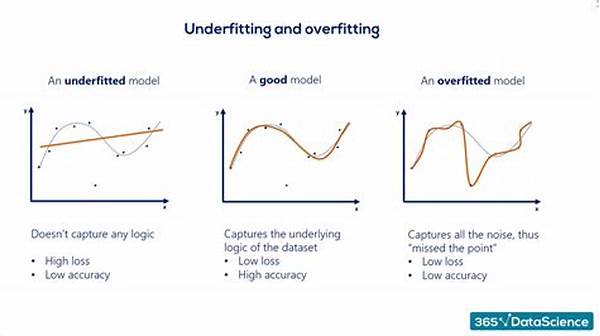

Overfitting occurs when a model learns the training data too well, capturing noise along with the underlying pattern, like an overenthusiastic student who memorizes every word without understanding the content. This leads to models that perform brilliantly on training data but fail spectacularly in new, unseen data — a classic case of “all style, no substance.”

Imagine spending weeks or months refining a model, only to discover it collapses when faced with reality. The disappointment is like baking a grand, multi-layer cake only to have it deflate in the oven. But fear not! Understanding the impact of overfitting in models is your first weapon in the arsenal against this common pitfall. By the end of this article, not only will you see overfitting as a challenge you can overcome, but you’ll also explore strategies to create robust models that generalize well, even in the face of variable real-world data.

Understanding the Pitfalls of Overfitting

Overfitting might initially seem like a compliment – your model impressively captures the complexities of the data. But, look closer, and you’ll see the trouble. Much like trying to impress on a first date by recounting every movie ever watched, your model might be charming but ultimately exhausting and ineffective.

Now, let’s dive deeper. When a model overfits, it’s like a detective who focuses on tiny, irrelevant details while missing the broader crime scene pattern. Such a model might gleefully tackle the training dataset, solving mysteries effortlessly but stumbles in confusion when it’s time to handle new clues.

To mitigate the impact of overfitting in models, practitioners must strive for a balance between complexity and simplicity. Think of it as the sweet spot during a comedy act where jokes are well-timed without being over the top. Armed with knowledge, you can take action to enhance your models, ensuring they don’t just boast theoretical prowess but demonstrate tangible, practical wisdom.

—

Objectives and Insights on Overfitting

Grasping the consequences of overfitting isn’t just an academic exercise; it’s imperative for any machine learning project aiming for success. Imagine embarking on a journey to Mars. You wouldn’t want the shuttle to get all calculations precisely right on Earth and fall apart halfway to the red planet. Similarly, training-machine learning models demand robustness once they encounter new data.

As data scientists, understanding the nature of overfitting is akin to mechanics diagnosing engine problems. Without this fundamental understanding, your chance of achieving desired outcomes is slim. The impact of overfitting in models can reduce efficiency, waste resources, and, importantly, tarnish the credibility of your insights.

Combat Overfitting: From Theory to Practice

To balance complexity and simplicity, applying regularization techniques becomes your best friend. These methods, like Lasso or Ridge, are the insightful guides helping navigate through the sea of potential errors. They encourage the model to keep it real, stripping back to its essentials while preventing it from being bogged down by trivial quirks in data.

Integrating cross-validation techniques forms another shield against overfitting. By rigorously testing models before deployment, similar to software stress testing, you can predict their behavior under varied conditions. In marketing terms, it’s like focus-group testing before launching a product — indispensable for tweaking and refining your masterpiece.

Realizing Robust Models

For inspiration, consider testimonials from seasoned data scientists. One accidental researcher tells a humorous tale of learning from overfitting disasters – it taught him humility and reinforced a critical mindset. His simple yet effective philosophy: don’t take data at face value. Dig deeper, question, and continuously refine the approach. The impact of overfitting in models became his learning curve.

By understanding the impact of overfitting in models, you too can evolve from mere tinkering to crafting models that stand the test of time. These strategies not only mitigate the risk but transform potential pitfalls into stepping stones to success.

Pursue Perfection with Precision

Remember, the journey to the perfect model isn’t a sprint; it’s a marathon. With each step, embrace the learning curve. With every misstep, grow wiser. As you continue to refine your approach, think of overfitting not merely as an obstacle but a teachable moment — an invaluable chapter in your data science storybook.

Strategies to Tackle Overfitting in Models

Introduction

In the realm of data science, where the enchanting allure of perfect models tempts many, a notorious villain named overfitting lurks quietly in the shadows. Embarking on a data science journey without acknowledging overfitting’s potential repercussions is like setting sail into treacherous waters without a compass. But fear not, brave explorer! This article serves as a trusty guide to unraveling the mystery of the impact of overfitting in models.

Overfitting is fundamentally about misjudging complexity. When models cling too tightly to their training data, it’s like an overteller of tales, incapable of deciphering truth from fiction. Left unchecked, overfitting can wreak havoc, leading well-intentioned projects to far-flung, shaky conclusions. Picture a model as an entertainer. Its job is to engage with the audience (real-world data) without fumbling over inconsequential details. Overfitting steals that charm, replacing it with awkward silences and misinterpreted jokes.

The impact of overfitting in models is not just technical jargon for academics; it roots itself firmly in real-world implications. Imagine financial models failing to predict market crashes or medical datasets dodging critical diagnostic points. Each example is a stern reminder that overfitting is a foe to be reckoned with urgently. Yet, within every challenge lies the opportunity to learn and evolve.

This narrative isn’t a cautionary tale but a saga of overcoming odds and sharpening our data science armor. Within the confines of this guide, you’ll discover the tools and strategies necessary to battle overfitting, transforming potential setbacks into stepping stones toward comprehensive, resilient solutions. Ready to tackle this formidable adversary? Let’s delve deeper!

Managing Overfitting: Techniques and Tools

Models, much like artists, must avoid excessive adornments to maintain elegance and simplicity. Here’s how to disarm the impact of overfitting in models:

Leveraging Advanced Techniques

Understanding and mitigating overfitting is crucial for data scientists aiming for precision and accuracy. While overfitting might seem like an arcane passage in the data science narrative, acknowledging and countering it transforms the challenges into stories of innovation, perseverance, and ultimate success.

—

Illustrations to Visualize Overfitting

Overfitting is more than just a nuisance in the intricate world of machine learning — it’s a cautionary tale echoing through the digital corridors. When constructively addressed, it ensures accuracy and fortifies robustness across models. Driven by the urgency to perfect our modeling endeavors, overfitting’s presence reminds us to balance creativity and precision, art and science in data handling.

Bravely confronting the impact of overfitting in models ensures that your projects glow with credibility and transparency. By strategically employing tools and methodologies, not just performing a surface-level fix, but diving into the core causes, you’ll foster models that don’t just perform spectacularly in controlled environments but thrive in the wild, unpredictable ebb and flow of data reality.

In overcoming overfitting, remember: it’s less about the distant, perfect goal — it’s the journey through data’s wonder that embeds lifelong knowledge and expertise. Only through this lens of understanding do we rise, improving, evolving, and conquering one dataset, model, and line of code at a time. The narrative of data science continues, enriched by these insights, ready to face the challenges of tomorrow with courage and wit.