H1: Historical Development of Neural Networks

Welcome, dear reader, to the intriguing world of neural networks, where machines learn, adapt, and sometimes, even surprise us with their uncanny abilities. It’s a tale as old as academic study itself, or at least, since the time whispers of artificial intelligence first echoed through the halls of scholarly pursuit. Picture it—a room filled with academics whose ideas were as electrifying as the codes they would eventually write. It marks the beginning of what we now refer to as the historical development of neural networks. This journey is one marked by curiosity, challenge, and discovery, driven by a singular goal: to unlock the potential of machines to think like humans. So buckle up as we dive into this exciting evolution that tells the story of human ambition and technological advancement.

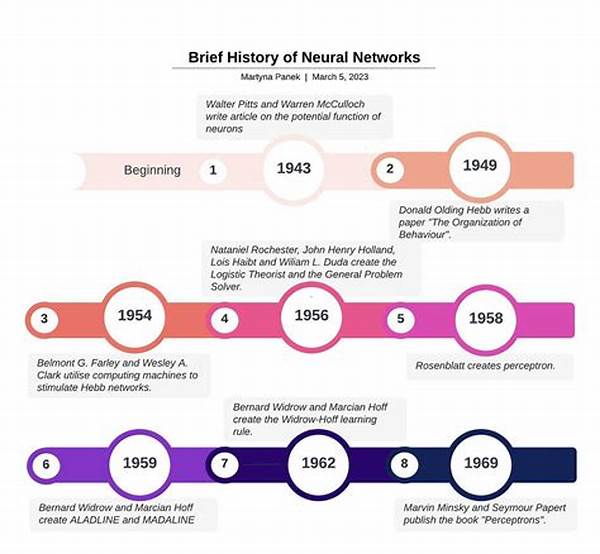

In the 1940s, Warren McCulloch and Walter Pitts laid the groundbreaking foundation for neural networks with their seminal paper “A Logical Calculus of Ideas Immanent in Nervous Activity.” This paper introduced a model of artificial neurons, the first step in birthing what would later become a revolution in computational science. What they presented was not just an academic discourse but a roadmap to an uncharted future. These informal neural constructs sparked what we can rightfully call the historical development of neural networks.

Fast forward to the 1950s and 1960s, Frank Rosenblatt, a visionary psychologist, introduced the Perceptron—a machine designed to mimic the brain’s ability to interpret visual data. It was an exciting time, reminiscent of a technicolor dream. Scholars and engineers were captivated by the seemingly boundless possibilities the Perceptron promised. Unfortunately, the dream didn’t last—a significant limitation of the Perceptron to handle non-linear data was discovered, casting a shadow over the neural network landscape. Nonetheless, the journey had only begun in earnest, sowing seeds that would germinate in decades to come.

The historical development of neural networks took two steps forward in the 1980s with the advent of multi-layered networks and backpropagation, rekindling hope and interest. This period marked a renaissance, as computational power grew, allowing for more sophisticated models and algorithms. Scientists and engineers buzzed like caffeinated bees in a hive, each contributing to the growing complexity and efficiency of neural networks. Enthusiasts marveled at the prospect of machines that could not only process data but seemingly ‘think’—an exhilarating notion that went beyond mere functionality.

Today, we stand amidst an era where neural networks power self-driving cars, conduct medical diagnoses, and even compose music. The historical development of neural networks has led us here—to a world where boundaries between science fiction and reality blur like watercolors on a canvas. This vivid journey from theoretical exploration to real-world application is nothing short of phenomenal, showcasing not just the triumph of human intellect but our endless capacity for innovation.

H2: Key Milestones in Neural Network EvolutionDescription: The Heartbeat of a Technological Revolution

The historical development of neural networks isn’t just a timeline—it’s a narrative that pulsates at the core of technological evolution. Imagine the advancement as a series of waves—each crest a groundbreaking milestone, each trough a momentary pause of introspection that propels the next leap forward. Let’s dive into these fascinating undulations that have shaped the field.

In the dawn of the AI era, McCulloch and Pitts’ model served more than just an academic muse; it birthed a computational paradigm. The allure of machines mimicking cerebral functionality captivated futurists and technologists alike. Their ideas suggested possibilities that went beyond simple calculation—enchanted whispers of digital cognition caressed the imaginations of many.

By the late 20th century, a period often dubbed the “AI winter” set in, as the limitations of early systems like the Perceptron became apparent. Yet, in a metaphorical twist akin to a plot climax in a gripping saga, three scholars—Rumelhart, Hinton, and Williams—resurrected hope by introducing the backpropagation algorithm. It was like fitting the final piece in a jigsaw puzzle, prompting a renaissance of sorts in neural research.

This epoch of revitalization ushered in the era of deep learning, where neural networks grew deeper, richer, and more intricate. The historical development of neural networks now mirrored the structure and complexity of living brains more closely than ever before. With enhanced computational power, neural networks could learn faster and perform tasks previously deemed science fiction.

Fast forward to our current decade, where frameworks like TensorFlow and PyTorch have democratized machine learning. These tools allow even casual developers to engage with neural networks, fostering an environment ripe for rapid innovation. The historical development of neural networks is now less about isolated events and more about an ever-unfolding continuum of discovery—one that calls to the adventurous spirit of inventors and dreamers everywhere.

H2: Turning Ideas Into Reality

The journey from the abstract to the tangible forms the crux of the historical development of neural networks. What was once the domain of theoreticians has become a tool wielded by practitioners across diverse industries. Whether it’s predicting market trends, diagnosing diseases, or composing symphonies, the impact is as tangible as it is impressive.

H3: From Concept to Code: The Transition

With each leap forward, neural networks bridge the gap between possibility and practicality. This transition happens through incremental advancements and revolutionary breakthroughs alike. And every step is steeped in a kind of experimentation that can only be described as magical.

Discussion: Traversing the Neural Fabric

In contemplating the historical development of neural networks, we find ourselves tracing the contours of a fascinating map—a roadmap colored with aspirations of human ingenuity and adorned with milestones that shimmer like stars on a clear night. This voyage through the neural cosmos is one of curiosity, fraught with valleys of skepticism yet teeming with peaks of achievement.

Perhaps one of the most captivating aspects is the human element—the narratives of those who dared to tread these uncharted territories. Early pioneers like McCulloch and Pitts were akin to sculptors chiseling out the first tentative edges of what would become grand edifices. Their work was less about the immediate results and more about paving pathways for future dreamers to explore and expand.

Today’s scholars and developers continue to build upon these venerable foundations. The exponential growth of computational power pairs with a democratization of knowledge, allowing broader participation in exploring this digital frontier. It’s no longer something rarefied or niche—a growing number of voices contribute to the conversation, enriching the tapestry with their unique insights and innovations.

The allure of historical development of neural networks isn’t merely its capacity to solve problems but also its artistry—the elegance with which it transforms abstract concepts into coded reality. It invites us to marvel at both its math and its mystery, to stand in awe of a system that can aggregate data into learning experiences.

As we stand on the brink of tomorrow, we remember that the historical development of neural networks is less about the past and more about the stories we are yet to tell. It is a call to action for all who dare to dream, reminding us that in the realm of neural networks, the only limits are those we place upon ourselves.

H2: Illustrative Milestones in Neural Networks

In the grand theater of artificial intelligence, the historical development of neural networks takes center stage as both a scientific marvel and an artistic masterpiece. It’s a story with no definitive ending, only infinite new beginnings. Whether you’re an academic, a tech enthusiast, or merely an inquisitive soul, this narrative beckons you to explore its ever-evolving chapters. Dive into the fascinating journey of neural networks and discover how each milestone wasn’t just a step forward but a leap towards an exciting future, brimming with potential and promise.