Welcome to the fascinating world of neural networks! If you’ve ever marveled at how your phone recognizes your face or how Netflix knows exactly what to recommend next, you’ve ventured into the domain of neural networks, even if unknowingly. Today, we’ll dive into the basics or “fundamentals” of neural networks training, and I promise to keep it as light and breezy as a sunny afternoon.

Understanding Neural Networks

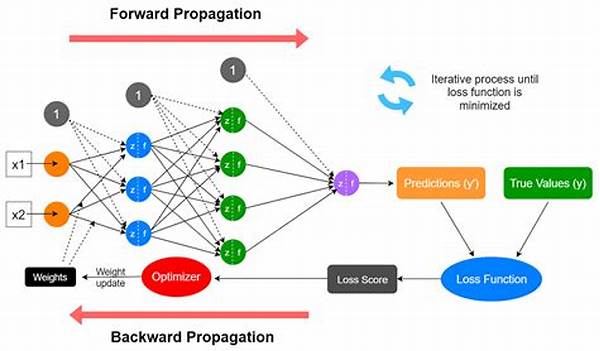

Let’s break it down: neural networks are inspired by the human brain’s network of neurons. Think of them as tiny computational units working together to solve complex problems, just like how our brains process thousands of inputs every day. The fundamentals of neural networks training involve teaching these models to recognize patterns by adjusting weights, much like tuning a guitar. At the start, our neural network might not sound quite right, making wrong predictions. However, with the fundamentals of neural networks training, it learns from its mistakes and refines its guesses, slowly improving at its task. It’s like teaching your pet tricks—trial, error, and lots of patience!

In the early days, neural networks were trained through simpler algorithms and often got stuck, metaphorically like a car in mud. Now, armed with better algorithms and faster computers, the fundamentals of neural networks training have evolved significantly. Dive into the leading concepts, and you’ll find researchers experimenting with different architectures and techniques, making today’s neural networks powerful and efficient problem solvers. So, don’t let the jargon intimidate you! Mastering the fundamentals of neural networks training is an exciting journey into machine learning.

Key Components in Training

1. Data, Data, Data: You can’t train a model without it. Imagine trying to learn piano without sheet music. The fundamentals of neural networks training hinge on quality datasets to recognize patterns.

2. Learning Rate: Think of it as the speed of your progress. Too fast, and you might overshoot; too slow, and you’re barely moving. It’s a crucial part of the fundamentals of neural networks training.

3. Activation Functions: These work like light switches for neurons, determining whether they should activate and pass information forward. Mastering them is essential to the fundamentals of neural networks training.

4. Cost Function: It assesses how far off your predictions are from reality. Modified as training proceeds, it’s central to the fundamentals of neural networks training for continual improvement.

5. Weight Adjustment: Core to learning, this process tweaks the neural pathways influencing the outcomes, a vital function in the fundamentals of neural networks training.

Challenges in Neural Networks Training

No talk about the fundamentals of neural networks training is complete without addressing the roadblocks. One of the biggest challenges is overfitting, where a model learns the training data too well, like memorizing every question in a textbook but buckling under the scrutiny of unanticipated exam questions. Overfitting can be frustrating because the model performs spectacularly during training but stumbles when exposed to new data.

Then, there’s the issue of data quality. If “bad” data feeds into your model, the results can be as unexpected as a smoothie made with salt instead of sugar. In the fundamentals of neural networks training, ensuring clean and relevant data is as crucial as having the right ingredients in a recipe. Regularly reviewing and cleaning your dataset can often mitigate potential mishaps. In this dynamic field, problem-solving and creative thinking are your best allies.

Common Missteps in Training

1. Ignoring Model Complexity: Simpler models often outperform complex ones. The fundamentals of neural networks training benefit from simplicity.

2. Overly High Learning Rate: This leads to erratic models. The fundamentals of neural networks training stress balanced learning rates.

3. Insufficient Data: Training on limited data may produce biased results. Quality and diversity of datasets are staples in the fundamentals of neural networks training.

4. Neglecting Validation Set: Skipping validation means no performance checkpoint. It’s emphasized in the fundamentals of neural networks training.

5. Neglecting Data Preprocessing: Raw data can introduce noise, harming results. The fundamentals of neural networks training often underline preprocessing importance.

6. Neglecting Hyperparameter Tuning: Hyperparameters need careful adjustment to optimize model performance—an essential of the fundamentals of neural networks training.

7. Forgetting Regularization: Helps control models and prevents overfitting, vital in the fundamentals of neural networks training.

8. Skipping Normalization: Keeping input data on the same scale can reduce errors, a basic rule in the fundamentals of neural networks training.

9. Mismanaging Batch Sizes: Batch size impacts model performance, and it’s a detail-oriented aspect of the fundamentals of neural networks training.

10. Overemphasis on Hardware: While faster GPUs help, the skillset to train models matters more in the fundamentals of neural networks training.

Common Algorithms in Training

Let’s chat algorithms. When digging into the fundamentals of neural networks training, you’ll discover the classic backpropagation, a backbone in training neural networks. This algorithm fine-tunes neuron weights by moving backward through the network and adjusting based on how off the mark the final output was. The use of stochastic gradient descent (SGD) is prevalent, too; it’s a simple yet effective technique that tweaks the weights to minimize errors.

In addition, the fundamentals of neural networks training often involve optimizing these strategies. Adam Optimizer, for example, combines the advantages of two other popular methods (AdaGrad and RMSProp) and is highly efficient for large datasets. By getting to know these algorithms, you get insider access to the core ways neural networks learn. Understanding different algorithms ensures you’re ready to experiment and improve models, a step further into mastering the fundamentals of neural networks training.

Applying Neural Networks in Real Life

Now let’s take it from the books to the streets—how are the fundamentals of neural networks training applied in everyday life? For instance, image recognition is a game-changer in sectors like healthcare. These networks help detect anomalies in medical images, stepping in where the human eye might miss. It’s the fundamentals of neural networks training that make such applications possible.

In the world of e-commerce and retails, recommendation systems powered by neural networks enhance user experience by suggesting products tailored to customers’ tastes and preferences. Banking sectors harness these fundamentals to detect fraudulent transactions, assuring security for their clients. So next time you engage with an app, pause and appreciate how the fundamentals of neural networks training might be quietly working to enhance your experience.

Conclusion

There you have it! A brisk walk through the fundamentals of neural networks training has shown us just how nuanced yet exciting this world is. From understanding and debugging quirky model behaviors to the thrill of making predictions, it’s a dynamic tango of algorithms, data, and problem-solving skills. Remember, learning the fundamentals of neural networks training is not just about crunching numbers; it’s about unlocking potential and harnessing technology that can change lives significantly.

In our rapidly evolving tech ecosystem, keen knowledge and understanding of these fundamentals set the groundwork for future advancements. Whether you’re just starting or deepening your understanding, embracing the fundamentals of neural networks training opens pathways to innovative solutions and groundbreaking possibilities. Happy training, fellow AI explorers!