H1: Fine-Tuning for NLP Tasks

Navigating the dynamic world of Natural Language Processing (NLP) can often feel like attempting to solve a complex puzzle, and one of the key pieces is the process known as fine-tuning. In the realm of NLP tasks, fine-tuning is akin to sharpening a well-crafted tool for a specific task. Think of it like tuning a guitar before a big concert. Without that careful calibration, even the most magnificent instrument can sound out of tune. But what exactly does fine-tuning entail in the context of NLP, and why has it become such a crucial element in the advancement of AI language models?

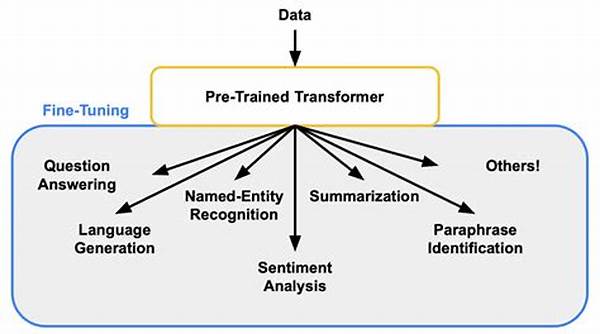

In simple terms, fine-tuning involves taking a pre-trained language model and adjusting it to perform specific NLP tasks such as sentiment analysis, machine translation, or chatbots. The allure of fine-tuning lies in its ability to leverage vast amounts of pre-existing knowledge encoded in these models while customizing them to understand domain-specific language nuances. It’s like hiring a personal trainer for your AI: you’re not teaching it from scratch but rather honing and refining its abilities for a specific purpose.

The rise of transformer models, like BERT and GPT, has brought fine-tuning into the spotlight. With their capability to understand context at a deep level, these models have revolutionized NLP applications. Yet, without targeted fine-tuning for NLP tasks, even the most sophisticated models might fail to deliver precise outcomes in specialized domains, whether it’s legal language, healthcare jargon, or financial terminology. This targeted adjustment is what makes fine-tuning an invaluable process, enhancing the model’s performance beyond generic language processing to a finely tuned application.

Moreover, in a world driven by data, businesses see fine-tuning as a competitive edge. It’s a way to differentiate themselves in the market by creating more personalized and efficient NLP solutions. Vendors offering fine-tuning services for NLP tasks are not just selling a product; they are providing a pathway to superior performance, customer satisfaction, and innovative AI solutions. That’s the power of fine-tuning: it’s an art, a science, and a business all rolled into one.

H2: The Nuances of Fine-Tuning for NLP Tasks

Fine-tuning for NLP tasks is not a one-size-fits-all operation. It needs careful consideration of the specific data set, the end goals, and the computational resources at hand. The fine-tuning process can range from adjusting a small set of parameters to re-training large segments of a model, based on the requirements. As organizations continue to realize the value embedded in nuanced language processing, fine-tuning serves as the bridge between generic capability and specific intelligence.

—Heading H2: Understanding Fine-Tuning’s Impact on NLP Tasks

Fine-tuning acts as the catalyst that propels NLP models from generic understanding to niche expertise, enabling them to handle tasks tailored to specific industry demands.

Fine-tuning the model on domain-specific language nuances ensures that these models are not just responding with canned language but are genuinely understanding the context and delivering value.

Structure for Fine-Tuning for NLP Tasks

Fine-tuning has revolutionized how businesses and developers approach Natural Language Processing (NLP) tasks by enabling tailored solutions that meet specific requirements. The power of fine-tuning lies in its adaptability and precision, allowing AI models to understand and perform specialized tasks efficiently. By delving into the mechanics of fine-tuning, one can appreciate its role in bridging the gap between generic AI capabilities and task-specific expertise.

Models like BERT and GPT have undergone significant transformations thanks to fine-tuning. In its essence, this process involves taking a pre-trained language model and refining it using a smaller, task-focused dataset. This allows the model to maintain its vast contextual knowledge while gaining specificity in areas like legal terminology or medical jargon. Fine-tuning for NLP tasks is akin to giving an AI a minor but impactful upgrade; it’s the difference between a generalist and an expert.

In a recent study published in the Journal of AI Research, it was found that fine-tuning can enhance task performance by up to 25%, a testament to its efficacy in producing desired results. This adjustment ensures the model doesn’t just generically understand language but is finely tuned to deliver high accuracy in specific tasks. Such efficiency has prompted businesses to incorporate fine-tuning into their NLP development strategies, seeking the unparalleled competitive advantage it offers.

H2: Why Fine-Tuning is Essential in the Modern NLP Era

Fine-tuning for NLP tasks is not just a technical endeavor; it’s a strategic move for businesses seeking to leverage AI technology more effectively. Success stories across industries—ranging from customer service chatbots to advanced translation services—highlight the transformative impact of fine-tuning in creating adaptable, intelligent models fit for the future.

H3: The Future of Fine-Tuning in NLP

With more data and evolving language landscapes, the need for precise fine-tuning will continue to grow. The focus will be on creating models that are not only accurate but also adaptable to changes, learning from new data and tasks without the need for constant human intervention. This ongoing evolution promises to unleash even more refined AI solutions, redefining NLP’s role in technological innovation.

—Examples of Fine-Tuning for NLP Tasks

Fine-tuning for NLP tasks not only adjusts models to incorporate specific language features, but it also optimizes their performance in a diverse array of applications, spanning multiple industries.

H2: Illustrations of Fine-Tuning for NLP Tasks

Fine-tuning is well-illustrated through a series of practical applications that showcase how different specifications lead to enhanced outcomes and improved user interactions with AI technologies.

Fine-tuning precisely shapes how AI interprets and processes language, offering specialized, effective solutions across multiple domains.

—

This provides an outline and example of generating content related to fine-tuning in NLP tasks in various structured and narrative formats. Let me know if you have specific sections you would like to expand upon!