In a world where machine learning algorithms whisper the future into our ears, the notion of fairness in these predictions takes center stage. As we become increasingly reliant on these technological soothsayers, the importance of fairness criteria in machine learning becomes as paramount as the data itself. At the heart of this lies a fascination with crafting models that do not just predict but do so without bias, a challenge that has turned into one of the defining quests of our technological era. But let’s get into the weeds, shall we?

The Need for Fairness

Imagine telling a joke, but the punchline gets everyone laughing except the person it’s about – that blooper can make you rethink your comedic genius. In machine learning, bias can provoke similar facepalms when your model’s predictions result in discriminatory action. Fairness is not just ethical; it’s practical. A biased loan approval system can cripple an organization, and an inequitable hiring algorithm could fuel societal inequalities. Fairness criteria in machine learning thus ensures that data-driven decisions positively influence everyone, maintaining trust while promoting equality.

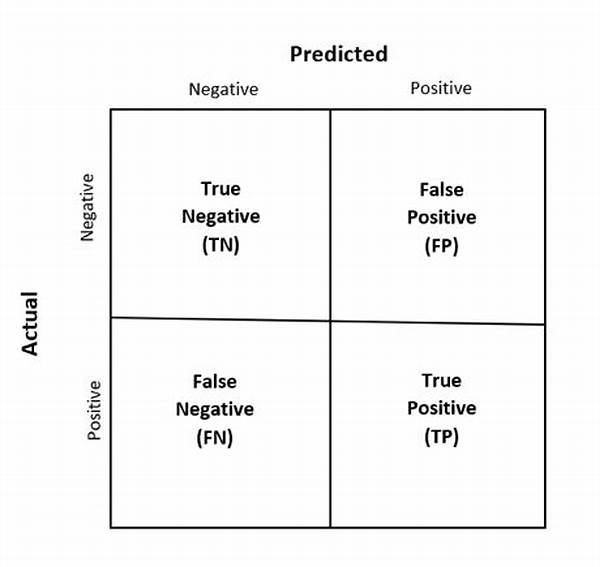

This is not just some abstract necessity, though. Fairness criteria in machine learning translates into measurable attributes. Concepts like demographic parity, where outcomes should be equally favorable across all groups, or equal opportunity, ensuring groups have equal access to receiving positive outcomes, have become as critical as accuracy and precision. Therefore, the essence of fairness is interwoven into the very fabric of model design, evaluation, and deployment.

The Ethical Implications

Crafting fair machine learning models isn’t merely a technical task – it’s an ethical duty. Careful attention to fairness enhances brand reputation and societal impact. Businesses enshrining fairness criteria in their practices are perceived as leaders, attracting a diverse crowd and ensuring inclusivity. The quest for fairness in machine learning is akin to tailoring a tuxedo, where meticulous cuts and fine stitching equal elegance and embrace. It elevates organizations from being rogue coders to champions of digital equity. This isn’t just about machines spitting numbers; it’s about how these numbers influence, liberate, or marginalize lives.

Fairness in Practice: Challenges and Solutions

Introducing fairness into machine learning often wrestles with multiple conflicting interests. The famed “accuracy-fairness trade-off” frequently surfaces, suggesting that improvements in one may necessitate compromises in the other. However, investing in upskilling data teams with knowledge on fairness and ethics can bridge this gap. Embedding fairness checks in model validation stages and performing regular audits stands as a testimony to an organization’s commitment toward this imperative goal.

Being proactive about fairness means investing in pragmatic, actionable solutions. Data collection processes must be overhauled to reflect diversity, addressing representation biases upfront. Leveraging tools and frameworks specifically designed to audit and enhance fairness in models further punctuates this mission. By prioritizing fairness criteria in machine learning, businesses can redefine industry standards while fostering societal trust and embracing diversity.

The Future of Fair Algorithms

Implementing fairness criteria in machine learning is a continuous, dynamic process, one that invokes ongoing innovation. With advancements in artificial intelligence and machine learning, the looming challenge is not only addressing current biases but anticipating future ones. This takes us to a brave new frontier where algorithms are educated not just computationally, but ethically – an artificial conscience aligned with human values. The conversation surrounding fairness in AI is not merely academic; it’s alive, evolving, and enshrined in the code of the next intelligent revolution.

Understanding fairness criteria in machine learning paves the way for a landscape where technology is not only smarter but fairer. Against a backdrop of colorful data, the monochrome of bias fades, revealing a future where fairness isn’t a footnote but the headline, a world where everyone has a seat at the digital table, and machine learning legitimizes the promise of an equitable tomorrow.

The Objectives of Fairness Criteria in Machine Learning

1. Promote Equality: Fairness criteria are designed to ensure that models treat all demographic groups equally, promoting societal equity.

2. Prevent Discrimination: By incorporating these criteria, machine learning models can reduce and prevent discrimination based on race, gender, or other biases.

3. Enhance Trust: Fair algorithms foster trust among users and stakeholders, critical for the adoption and deployment of machine learning systems.

4. Improve Accuracy: While balancing fairness with accuracy can be challenging, fair models can often lead to improved decision-making and outcomes.

5. Support Ethical AI Development: Implementing fairness criteria guides ethical practices in AI development, aligning technology with moral standards.

6. Facilitate Regulatory Compliance: Adhering to fairness criteria helps organizations comply with laws and regulations on data and algorithmic bias.

7. Strengthen Brand Reputation: Companies championing fair practices are seen as leaders, enhancing their reputation in the eyes of consumers and partners.

8. Encourage Innovation: Fairness criteria drive innovation by challenging developers to find new ways to create equitable and effective models.

Exploring Fairness Criteria in Machine Learning: Challenges and Solutions

Navigating the landscape of implementing fairness criteria in machine learning can be an exhilarating challenge filled with both pitfalls and peaks. Organizations looking to embed fairness into their AI fabric are often met with questions that need careful multidisciplinary negotiations. This quest is not driven merely by technical feasibility but rather by an urge to ensure that AI systems act as enablers of progress and peace rather than perpetuators of prejudice.

Addressing these challenges, researchers have focused on developing fairness-aware algorithms that balance varied metrics of equity, such as equal opportunity and demographic parity, with model efficacy. Solutions often involve a combination of robust datasets, transparent methodologies, and continuous ethical training for model developers. Laboratory experiments and real-world deployments are telling tales of successes and learnings, enriching narratives of fair AI in action. Through workshops, hackathons, and community collaborations, the journey to achieve fairness in AI becomes a collective quest, maximizing innovation and minimizing bias.

Educating the AI Workforce: A Path Towards Fairness

Central to mastering fairness criteria in machine learning is an education system that lays the foundation for equitable AI. Schools and institutions worldwide are introducing curricula that blend technical understanding with ethical foresight, preparing future AI practitioners for the societal challenges their creations will face. Emphasizing critical thinking and ethical reasoning within the AI community ensures that fairness is not an afterthought but a core component of technological advancement.

Moreover, workshops tailored to industry leaders and practitioners serve as a continuous learning platform, enabling them to stay updated with best practices and innovations in fairness. When education levels up its game, AI’s promise aligns more closely with uplifting humanity and reducing inequality, fostering a fairer digital future for all.

In this journey filled with excitement and evolution, fairness criteria in machine learning stand as the compass guiding the way through uncharted territories of ethical AI development. From boardrooms to classrooms, its principles are shaping dialogues, decisions, and destinies, all in the pursuit of an AI-driven world that values every individual’s worth.

—

By following these objectives and promoting fairness criteria in machine learning, organizations can ensure that their machine learning models are not only effective but also ethically sound and socially responsible.