Hey there, fellow data enthusiasts! If you’ve been around the data science block a few times, you’ve probably heard of cross-validation. It’s one of those nifty techniques that can seriously boost your model’s performance. Today, we’re diving into the world of enhancing predictive power using cross-validation, and trust me, it’s going to be as intriguing as your favorite binge-worthy series.

Why Cross-Validation is a Game-Changer

So, let’s kick things off with why enhancing predictive power using cross-validation is all the rage. Imagine you’re building a predictive model. You’ve got your data, your algorithms, and that sparkle in your eye that comes with the prospect of insights. But wait, how do you ensure your model isn’t just memorizing the data, but actually learning from it? Enter cross-validation. This technique lets you partition your data into subsets, using some for training and others for testing. This way, you’re not just hoping your model works – you’re proving it does. Cross-validation helps in mitigating overfitting and gives a more reliable estimation of the model’s performance, enhancing predictive power in a more trusted way.

By dividing your data and validating it multiple times, you get a sense of how your model will perform on unseen data – a crucial element in predictive power. In a world where data is king, enhancing predictive power using cross-validation ensures your model doesn’t just work on paper but shines in real-world scenarios. So, the next time you’re knee-deep in data, remember to cross-validate; it’s like giving your model a mini boot camp to prepare for the big leagues.

Benefits of Cross-Validation

1. Accuracy Enhancement: Enhancing predictive power using cross-validation boosts the accuracy by ensuring the model is not overfitting.

2. Data Utilization: It maximizes the data usage for both training and testing, enhancing predictive power using cross-validation efficiently.

3. Model Reliability: Cross-validation provides a more reliable measure of model performance, enhancing predictive power using cross-validation in diverse scenarios.

4. Bias Reduction: By splitting the data, it reduces bias, thereby enhancing predictive power using cross-validation techniques.

5. Performance Consistency: It ensures consistent performance metrics, enhancing predictive power using cross-validation across various datasets.

Types of Cross-Validation Techniques

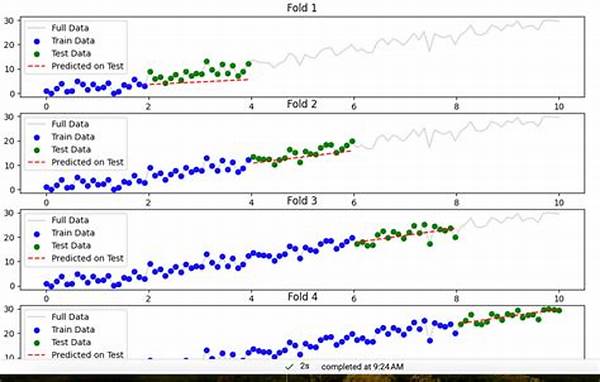

Alright, let’s get into the techniques that make enhancing predictive power using cross-validation such a must-have tool. There are a few star players in this field, like k-fold cross-validation, stratified k-fold, and leave-one-out cross-validation.

K-fold is like the popular kid on the block. You split your dataset into ‘k’ parts, train on k-1 sections, and test on the last one. Rinse and repeat until each subset gets a turn in the spotlight. It’s simple yet effective, enhancing predictive power using cross-validation by making sure your model gets a taste of every part of the data pie.

Stratified k-fold is its wiser cousin, ensuring each fold is a mini-representation of the dataset. Perfect for classification problems, it takes enhancing predictive power using cross-validation up a notch by maintaining balance. Leave-one-out? That’s the meticulous sibling, using almost all data for training except one lonely point. Choose your style; each has its place in the kingdom of data science!

Implementing Cross-Validation: Tips and Tricks

Enhancing predictive power using cross-validation isn’t just about running the technique. It’s about understanding your data and the problem at hand. Here are some things to keep in mind:

The Science Behind Enhanced Models

Let’s talk a bit more about the science underpinning enhancing predictive power using cross-validation. Imagine crafting a story; each data point is a character. Cross-validation ensures that all these characters get their time in the narrative spotlight, making your story – or model – more robust and compelling.

In essence, enhancing predictive power using cross-validation allows you to understand not just the what, but the why of your model’s predictions. By validating, you’re ensuring that your models don’t just perform well by fluke but have learned patterns ingrained in your data. It’s like giving your model a passport to travel through different scenarios confidently, knowing it has the experience to handle whatever comes its way.

Wrapping Up: Cross-Validation for the Win

In conclusion, if you’re on the journey of honing your data science skills, enhancing predictive power using cross-validation is your trusty companion. It’s like having a second pair of eyes ensuring that your model isn’t just good enough but continuously getting better. Cross-validation empowers you by providing a more accurate, reliable measure of your model’s predictive abilities.

As the field of data science continues to evolve, staying on top of techniques like cross-validation is vital. Sure, it might seem like an extra step, but consider it an investment in your model’s future success. At the end of the day, enhancing predictive power using cross-validation is all about gaining insights and ensuring your model can stand the test of time and complexity. Happy modeling!