In the world of data science and machine learning, the pursuit of accuracy and robustness in predictive models is akin to chasing the Holy Grail. Data scientists constantly search for the magical balance between model complexity and performance, striving for better predictions without overfitting. Imagine you’re a wizard, and your spells are the algorithms that make predictions. However, without the right incantations (i.e., methods), your spells might go astray. Enter the hero of our tale: cross-validation. This powerful technique, enhancing predictive models through cross-validation, ensures that your predictive spells remain potent and accurate across different datasets.

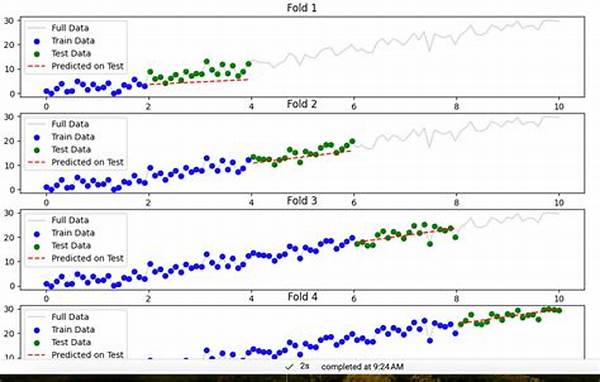

Cross-validation is like a dress rehearsal for your model before it hits the stage of the real world. It divides your data into multiple parts or “folds”, where some are used for training and others for validation. This process repeats multiple times, ensuring each data point experiences both the training and validation phases. The beauty of this method is that it provides a more comprehensive understanding of the model’s performance, scoring how it will fare when new, unseen data is introduced.

Enhancing predictive models through cross-validation is not just a necessity; it’s revolutionary! Think of it as the secret ingredient that takes your model from “it’s okay” to “Wow, that’s accurate!”. According to a study published by the Journal of Machine Learning Research, models validated using cross-validation consistently outperform those that do not. This method tackles overfitting head-on, ensuring your model doesn’t just memorize data but learns genuine patterns capable of making reliable forecasts.

Cross-validation essentially challenges your model to adapt to different data scenarios, mimicking real-world application variations. It’s like taking your model to a boot camp, where it faces unexpected hurdles, emerges stronger, and ready to conquer any challenge. Furthermore, it’s versatile and flexible: whether you’re working on regression, classification, or any data-driven complexities, cross-validation remains your best ally. As a data scientist, enhancing predictive models through cross-validation should be on your priority list, ensuring your models are not just built, but built to last.

Understanding Variations in Cross-Validation Techniques

When delving into the nitty-gritty of enhancing predictive models through cross-validation, one quickly discovers that there are several types of cross-validation methods, each with unique twists and benefits. For instance, K-Fold Cross-Validation is a popular choice, where the dataset is split into ‘k’ smaller sets or folds. The model is trained and validated ‘k’ times, each fold serving as validation once, ensuring every slice of data gets its moment in the spotlight.

To truly master enhancing predictive models through cross-validation, one must understand the nuances of its various forms. Leave-One-Out Cross-Validation (LOOCV) is another technique that sounds like it could be an annoying neighbor, yet it’s a meticulous method that trains the model on all data points except one, iterating through each point. Although LOOCV provides unbiased estimates, its intensive computation makes it more suitable for smaller datasets. The eclectic nature of cross-validation techniques means there’s always something to fit your specific needs—similar to a wardrobe full of styling options!

Moreover, cross-validation is more than a mere validation method. It’s an invitation for experimentation, allowing for creative tweaks in your modeling approach. Imagine a world where your predictive models are so refined they could predict whether you’ll have bad hair days a month in advance. That’s the potential cross-validation offers!

Real-World Impact of Cross-Validation

Beyond academia, enhancing predictive models through cross-validation is a tool transforming industries. In finance, for instance, accurate predictive models can forecast stock trends, helping investors make informed decisions. The healthcare industry employs predictive modeling to foresee patient outcomes or potential epidemics, enhancing public health responses. Cross-validation plays a crucial role in ensuring these models are both robust and reliable, marking the difference between plausible projections and random guesses.

Goals of Cross-Validation Mastery

The ultimate aim of enhancing predictive models through cross-validation is simple yet profound: to build models that perform well in the way real data behaves out there in the chaotic wild. With accuracy as the prime goal, cross-validation minimizes risks associated with overfitting, where models learn the minute details and noise in the training data to the detriment of its predictive power.

Furthermore, cross-validation encourages a culture of thorough evaluation amongst data scientists, instilling best practices in model assessment. It encourages the shunning of shortcuts, urging professionals to leave no stone unturned in pursuit of the best possible models. In an era where data-driven decisions are the norm, the pressure to have reliable predictions is immense, and here, cross-validation acts as a guiding beacon.

Having models that generalize well to new, unseen data is not a luxury; it’s a necessity. The confidence cross-validation brings in achieving this goal cannot be overstated. As technology progresses and more data floods in, cross-validation remains a cornerstone method, ripping away uncertainties and fortifying models.

Cross-Validation in Practice: Insights from the Field

In the wise words of experienced data scientists, “A model is only as good as the data it’s trained on—and how it’s validated.” Cross-validation reinforces this sentiment, offering a sense of security and trust. When applied, it becomes not just a step in the model development process, but a mantra for excellence, a pledge towards ensuring that every predictive step is taken with care and precision.

Enhancing predictive models through cross-validation is an art, a science, and an endeavor that every data enthusiast should embrace. Indulge in its power today, and let your models not just exist; let them excel!

Key Elements in Enhancing Predictive Models Through Cross-Validation

In conclusion, embracing cross-validation in your model development process is vital for powerful and reliable predictions. By profoundly enhancing predictive models through cross-validation, you’re not merely adhering to a process—you’re setting a benchmark and broadening the horizons of what’s possible in the predictive modeling landscape. If you’re ready to leave inaccurate models in the dust, it’s time to champion cross-validation and witness firsthand its transformative power!