- Enhancing Model Performance with Cross-Validation

- Deep Dive into Enhancing Model Performance with Cross-Validation

- Actions Related to Enhancing Model Performance with Cross-Validation

- An In-Depth Look at Enhancing Model Performance with Cross-Validation

- Key Points on Enhancing Model Performance with Cross-Validation

- Cross-Validation: The Unsung Hero of Model Performance

Enhancing Model Performance with Cross-Validation

In today’s competitive landscape of data science and machine learning, achieving top-notch model performance isn’t just desirable—it’s essential. One of the ultimate goals for any data scientist or machine learning practitioner is to ensure that their models not only perform well on training data but also make accurate predictions on unseen data. This is where enhancing model performance with cross-validation comes into play. Cross-validation is a time-tested, reliable technique designed to assess the generalization ability of your models. Without it, your model may suffer from overfitting or underfitting, leading to unreliable predictions and disappointed stakeholders.

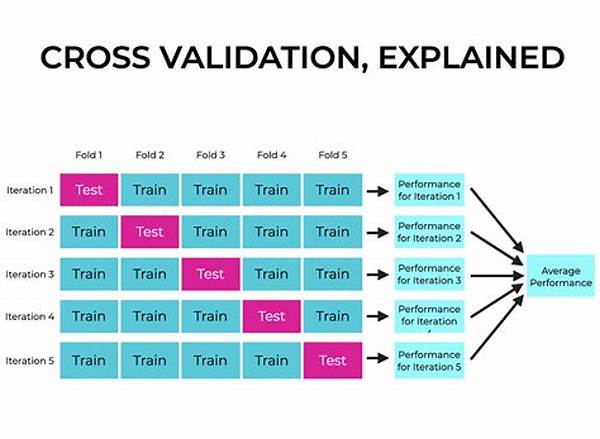

Cross-validation splits your dataset into multiple subsets or “folds,” rotating through different combinations of training and testing data to provide a robust evaluation metric. Imagine this process as a kind of model “stress test,” forcing it to prove its mettle across various scenarios. As a result, you gain a more comprehensive understanding of your model’s strengths and weaknesses. Not only does this technique enhance the credibility of your modeling efforts, but it also lends a sense of professionalism to your data science endeavors and can significantly boost stakeholder confidence.

The versatility of cross-validation can’t be overstated. Whether you’re working on logistics regression or a sprawling neural network, cross-validation has you covered. Not only does it provide a more realistic evaluation metric, but it also offers insights into potential data biases, revealing blind spots that could otherwise go unnoticed. By implementing this, you are effectively enhancing model performance with cross-validation, leading to smarter, more data-driven decisions.

—

The Mechanics of Cross-Validation

To understand how cross-validation works its magic, we must delve into its mechanics. Typically, the dataset is divided into “k” number of folds. The model is trained on “k-1” of these folds and tested on the remaining one, rotating the folds to ensure every data point gets to be in a test set. This process can be repeated several times as needed to get an average performance score.

By using this method, you’re ensuring that the model’s robustness is well assessed, obliterating any chance for overfitting or underfitting. Trust us; once you experience the power of enhancing model performance with cross-validation, you won’t look back.

—

Deep Dive into Enhancing Model Performance with Cross-Validation

Cross-validation is nothing short of a revelation in the data science field—often described as a “model whisperer.” But why is it so effective, and how can we harness its full powers to optimize our models?

Cross-Validation Explored

The technique of cross-validation isn’t just about slicing and dicing data. It’s about bringing a systematic approach to model validation. First, cross-validation offers an excellent way to mitigate overfitting. When models are trained exclusively on one dataset, they risk becoming experts at only that data, similar to acing one test but struggling with different versions. Cross-validation rectifies this by ensuring the model trains and tests on different subsets, making it more generalizable.

Another advantage is its roll in hyperparameter tuning. With cross-validation, you can not only evaluate the model’s performance but also experiment with different hyperparameters, leading to a finely-tuned model that balances bias and variance. In other words, if the model were a musician, cross-validation would be its sheet music, guiding it to play not only loudly and clearly but harmonically in tune.

Why Everyone’s Talking About It

Even industry veterans agree—enhancing model performance with cross-validation is a game-changer. In competitive environments, split-second decision-making often relies on the efficacy of machine learning models. Thus, a model’s generalization capabilities aren’t just numerically attractive; they’re ethically crucial for businesses that pledge accountability.

Finally, cross-validation can even provide a slight edge when making business cases or presentations. Clients and stakeholders are more likely to trust outputs backed by comprehensive validation strategies. It’s the difference between saying “trust me” and “trust my tried and true methods.”

A Practical Guide to Cross-Validation

For those eager to dive in, start simple. Implementing k-fold cross-validation might seem daunting at first, but it’s like riding a bike—you only get better with practice. Use libraries like Scikit-learn in Python to automate many cross-validation tasks. Over time, you’ll find that enhancing model performance with cross-validation becomes second nature, a default staple in your data science toolkit.

For practical applications, consider what kinds of questions you’re aiming to solve. Are you focused on sales predictions, customer churn analysis, or fraud detection? Different contexts may require subtle shifts in how cross-validation is applied, but the core principle remains steady: enhance model performance with cross-validation for reliable and trustworthy outputs.

Making Cross-Validation Work for You

Considerations must be made when setting the stage for cross-validation—such as dataset size and available computational resources. These factors can influence the choice between methods like k-fold, stratified k-fold, or leave-one-out. But don’t fret, even for large datasets, modern computational capabilities can usually handle extensive cross-validation setups with relative ease.

—

Actions Related to Enhancing Model Performance with Cross-Validation

Understanding is only part of the battle. Here are actionable steps you can take right out of the gate:

—

An In-Depth Look at Enhancing Model Performance with Cross-Validation

In the ever-evolving terrain of machine learning, the quest for better performance is unending. Whether it’s identifying emerging patterns in retail sales or predicting stock market shifts, enhancing model performance is a universal ambition. Enter cross-validation—one of the most reliable and robust methods to amplify model efficacy.

The Role of Cross-Validation

Let’s put it this way: if your model were a contestant on a quiz show, cross-validation would be its ultimate rehearsal strategy, prepping it for any curveball questions on live television. The core principle is to leverage the entirety of your dataset for both training and validation without any overlap that can lead to misleading outcomes. Cross-validation divides the data into sections and ensures each section gets to be a part of the training and the testing set while rotating through.

By doing so, you’re effectively minimizing overfitting, a common affliction in machine learning models that can seriously hamper their ability to generalize—think of this as the flu for your model’s performance. Furthermore, cross-validation can help in identifying redundant features that might add noise, as opposed to strength, to your models. These insights are invaluable, adding another layer of reliability to your analyses.

Why Cross-Validation Makes a Difference

There’s a magical quality about enhancing model performance with cross-validation that even seasoned data scientists can’t help but appreciate. It’s the ability to instill confidence by generating performance metrics closer to real-world expectations. Imagine joining a poker game not having trained solely on past games with your younger cousins—cross-validation ensures you’re as prepared for Vegas as you are for family night.

Numbers speak a universal truth, and they do so persuasively. With cross-validation, each trial’s aggregated performance yields statistically sound results, devoid of the variability seen in a single train-test split. This ensures that your performance metrics aren’t just theoretical but practical enough to guide business decisions effectively.

Implementing and Mastering Cross-Validation

There’s no one-size-fits-all when it comes to cross-validation, which is why data folk love it so much. Choose from diverse methodologies like k-fold or leave-one-out to suit your data’s unique characteristics and business objectives. Remember, the goal remains the same: to provide a reliable gauge of how our predictors perform on unseen data, thereby enhancing model performance with cross-validation.

For those diving into implementation, there are numerous resources and libraries like Python’s Scikit-learn that offer pre-built functions, cutting down the time required to set up cross-validation. So, whether you’re a lone wolf data wrangler or part of a bustling analytics team, mastering cross-validation techniques can distinguish you as a data whisperer in a world that’s constantly inundated with noise.

Examples of Excellence

Let’s break down an example: imagine you’re tasked with creating a predictive model for customer retention. You’ve got historical data, but it’s crucial that your predictions hold water and save the company money. Cross-validation allows you to assess how well your model’s foreseeability aligns with actual customer behavior. Thus, the marginal investment of time and computational power into cross-validation pays dividends.

After all, when enhancing model performance with cross-validation becomes a part of your DNA, it elevates not just your projects but your professional standings—ensuring you’re always a step ahead of the curve.

Key Points on Enhancing Model Performance with Cross-Validation

—

Cross-Validation: The Unsung Hero of Model Performance

At the intersection of daring innovation and methodical precision lies cross-validation, a technique often hailed but rarely understood. Like an artist with a paintbrush or a chef with their signature dish, enhancing model performance with cross-validation requires an exquisite blend of science and art. And let’s be honest—doesn’t everyone want to be known as a bit of an artist in their field?

The Art of Model Validation

Cross-validation isn’t just for the data nerds; it’s a creative approach to peeling back the layers of your data and optimally tuning your models. Picture yourself as a master mechanic—cross-validation is your diagnostic tool, fixing and fine-tuning every part until the model performs like a finely tuned race car. Beyond its technical benefits, cross-validation cultivates a sense of trust in your modeling process, providing evidence-based assurances to stakeholders and peers alike.

By rotating datasets in cycles, cross-validation delivers insights you couldn’t have predicted—literally. It’s like wearing x-ray glasses, allowing you to peer into the DNA of your data and craft strategies tailored for effectiveness. And once you’ve tasted the success of enhancing model performance with cross-validation, you won’t settle for anything less.

Elevating Data Science Practices

Enhancing model performance is more than just refining numbers on a screen—it’s about storytelling. With cross-validation, you can convincingly narrate the reliability of your models, embedding confidence in both the process and outcomes. It’s the ultimate synergy of creativity and analytics, elevating standard data practices from the mundane to the extraordinary.

In summary, whether you’re crafting algorithms to save cities from traffic woes or dissecting consumer behavior for marketing insights, cross-validation provides the foundational confidence needed to navigate the unknown. Always remember: a little cross-validation goes a long way in transforming good models into great ones.