Dropout Technique to Prevent Overfitting

Welcome to the world of machine learning, where algorithms learn from data, discern patterns, and make decisions. The excitement of seeing a model predict trends or identify objects is akin to teaching a parrot to talk—mesmerizing and rewarding. But there’s a hiccup in paradise, and it goes by the name of overfitting. Overfitting is when a model learns the training data so well that it performs poorly on unfamiliar data. Imagine memorizing every question and answer in a textbook but failing miserably when faced with different questions. The dropout technique to prevent overfitting is the knight in shining armor in this saga, providing a simple yet elegant solution to this problem.

Just imagine training a model that’s akin to a fitness regime, where balance is crucial. Too much focus on one aspect and you might end up with a muscle imbalance, a problem that would make any weightlifter cringe. This is precisely where the dropout technique to prevent overfitting finds its relevance. It acts like a fitness coach, urging bits of the model to rest while others do the workout, leading to a well-rounded performance. Doesn’t it sound like the perfect workout discipline for your algorithms?

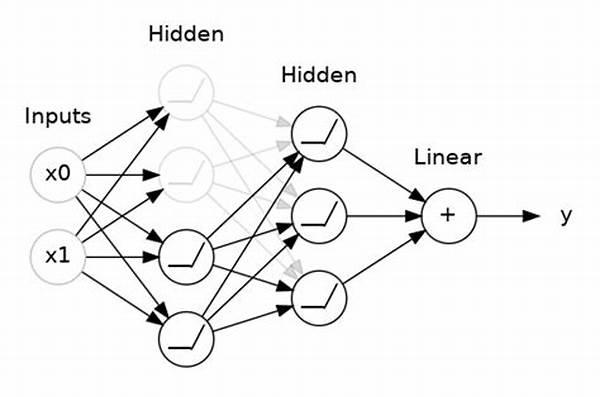

In a world where data is the new oil, and models are like sophisticated engines, dropout works like a fuel additive ensuring efficiency while preventing rust—the rust of overfitting. With every epoch, dropout randomly turns off neurons, introducing variability and enhancing the model’s ability to generalize. Even celebrities have cheat days, right? Dropout gives neurons that luxury, ensuring they don’t become sofa potatoes. Who knew neural networks had camaraderie and enjoyed a good break just like us?

So, why not embrace dropout? It’s the cool kid in the neural network neighborhood and using it doesn’t need a PhD in rocket science. With dropout, your model remains lean, efficient, and poised to tackle real-world data with the exuberance of a Labrador in a field of tennis balls. Ready to give your model a makeover with the dropout technique to prevent overfitting? Join the revolution!

Embracing the Dropout Technique to Prevent Overfitting

When pondering over strategies to make models more robust, the dropout technique to prevent overfitting stands out as a popular choice. This novel concept has revolutionized the way neural networks approach learning. Think of it like a mystery surprise party—by dropping neurons randomly, the model is forced to learn a more robust structural framework. A significant upside? Teams and researchers have lauded its simplicity and effectiveness.

Research and Validation of Dropout’s Effectiveness

Dropout is backed by research, making headlines for its prowess in machine learning communities. Like a hailed research paper revealing groundbreaking statistics, dropout resonates with its elegance. Studies suggest that dropout reduces complex co-adaptations in neurons, coaxing them to learn more independent patterns. As reported by leading AI magazines, the anticipation surrounding each iteration of dropout’s implementations is akin to a blockbuster movie release.

Exploring the Dropout Technique

Let’s delve a bit deeper into what makes this method shine. Imagine neurons networking like buddies at a party—when some step outside for a breath of fresh air, the others are forced to mingle, ensuring everyone grows through the interactions. Dropout mimics this social environment within neural networks. Research indicates that this enhances learning dynamics, akin to sending a mixed group of guests to an unexpected team-building retreat.

Understanding the Dropout Technique to Prevent Overfitting

The Goal of Implementing Dropout

The magic behind dropout lies in its ability to create resilience within neural networks. When the layers of a model are forced to reckon with partial information, it’s like training a cyclist with one hand tied behind their back; they become adept at handling unexpected roadblocks. The aim isn’t just to teach to memorize but to instill adaptive reactivity, ensuring the model doesn’t just expect the usual but gracefully handles the unexpected curveball.

Imagine you’re navigating a sophisticated car with cutting-edge technology, and dropout is the software update ensuring seamless transitions and adaptive responses to new terrains. The dropout technique to prevent overfitting accomplishes just that—upgrade your models to think on their feet and adapt to new data streams. To sum it up, dropout isn’t just a method; it’s the seasoned yet trendy upgrade your neural network desperately needs, ensuring it remains not just relevant but ahead in the AI race. Embrace dropout, because keeping your model in tip-top shape is the game, and winning is the aim!

Benefits of Using Dropout in Model Training

This overview of the “dropout technique to prevent overfitting” provides a thorough understanding of its application and impact, ensuring that whether you’re a novice or an expert, your models are destined for success. Ready to optimize your algorithm’s learning journey? Dive into the world of dropout and watch your neural networks transform!