The ever-evolving world of neural networks offers endless possibilities—and sometimes, endless headaches. One of the most innovative solutions that can transform the way neural networks work is the dropout technique in neural networks. This method has become a game-changer for many data scientists and machine learning practitioners. Imagine building an AI model that can predict the stock market accurately or analyze medical images with high precision. Yet, there’s always the risk of overfitting—where your model becomes overly complicated and learns the “noise” along with the actual data, rather than focusing solely on the task at hand. This is where the dropout technique in neural networks comes into play.

Read Now : Eliminating Bias In Data Gathering

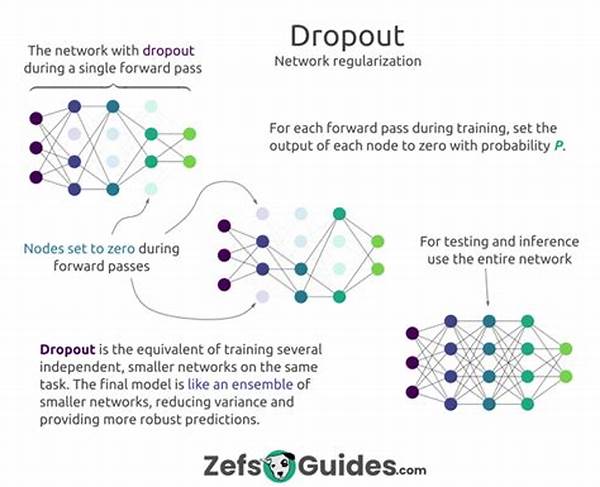

The dropout technique in neural networks acts like a regularization powerhouse, essentially preventing the model from leaning too heavily on any one neuron. By randomly “dropping out” certain neurons during training, the network becomes more robust and generalizes better when confronted with new, unseen data. It fosters a diversity of patterns within your model that can adapt to changes, much like a multi-talented team rather than a group heavily reliant on a superstar to save the day.

Effectively managing dropout can result in miraculous performance boosts, allowing models to maintain flexibility while reducing the overall error margin. Is it magic? No, it’s just intelligent engineering. Dive into the world of the dropout technique in neural networks, and you just might find that your models excel beyond your wildest expectations.

Why Dropout Technique Matters

The dropout technique in neural networks matters because it offers an ingenious solution to the age-old problem of overfitting. By enforcing randomness in the selection of neurons that “drop out,” it ensures that the model doesn’t rely too heavily on certain aspects of the data. Imagine a basketball team where every player is capable of scoring. Even if one star player has an off day, the team can still win. Likewise, dropout helps even out the neural network’s reliance, fostering a more adaptable, formidable model.

—

Introduction

In the vast, intricate landscape of neural networks, one technique stands out for its simplicity and effectiveness: the dropout technique in neural networks. Whether you’re a newcomer to the world of AI or a seasoned machine learning expert, this technique offers invaluable benefits, making it a staple in AI model development. Imagine constructing complex neural networks without fearing the proverbial boogeyman of machine learning—overfitting. Dropout acts as a safety net, preventing your hard work from drowning in unnecessary complexity and ensuring that your model guesses the best patterns from the given data.

The concept of dropout technique in neural networks might remind one of playing a game to enhance skills—try, fail, learn, and repeat until mastery is achieved. But unlike traditional practice, dropout encourages the neurons to attempt together, but not lean on each other too hard. Think of dropout as training wheels for neurons. It nudges them into forming the most reliable pathways possible.

Dropout is not just for the rookies; even seasoned professionals find its advantages compelling. In fact, it often leads to models that outperform those developed with other regularization methods. Intrigued yet? Let’s delve deeper into why the dropout technique in neural networks is so revolutionary.

How Dropout Technique Works

Dropout technique in neural networks may sound complex, but the core idea is straightforward: during each iteration of the training process, a certain percentage of nodes (neurons) are suddenly “turned off.” This means they are temporarily ignored and neither contribute to the network’s output nor participate in backpropagation. As if playing musical chairs, neurons must become adaptable and effective in the absence of certain “players” to improve the performance of the model.

When you introduce this randomness, akin to adding a wild twist to a puzzle, neural networks are forced to discover a better way to operate without leaning on specific patterns or data points. Crafting a solution that holds up under new and daunting scenarios becomes second nature for such a well-rounded model.

The question is, can the dropout technique in neural networks become your go-to method for creating robust models? The answer is a resounding yes.

Practical Applications of Dropout

The practicality of dropout technique in neural networks spans countless industries. In healthcare, models equipped with dropout can identify anomalies in medical images, assisting in early diagnosis. In finance, models that utilize dropout can adapt to volatile markets, offering predictions with more resilience. Even in the world of autonomous vehicles, dropout allows for neural networks that can respond better to unpredictable driving conditions.

As neural networks continue to evolve, the dropout technique serves as a keystone holding innovation in place. Its utility is so versatile that it finds a spot in nearly every industry leveraging AI for impressive results. With dropout, the world of machine learning does not just survive—it thrives.

—

Key Highlights of the Dropout Technique

Purpose of the Dropout Technique

The dropout technique in neural networks serves to democratize the learning process across the model’s neurons. How so, you ask? Picture this: rather than allowing a few neurons to dominate and dictate the model’s direction, dropout ensures every neuron gets its turn under the spotlight. This holistic training approach not only curtails overfitting but also promotes a balance that enhances the network’s predictive ability.

Such a method emphasizes sharing the “burden” of learning. Dropout encourages neurons to collaborate, not relying on a single neuron or feature. This leads to cooler, more efficient models that handle real-world data with grace and aplomb. It’s akin to a well-oiled team whose members know each other’s strengths and weaknesses, covering for one another seamlessly.

The dropout technique in neural networks is more than just a procedure—it’s a paradigm shift in how we build, train, and deploy models. By allowing randomness and encouraging all neurons to partake equitably, dropout assures that your model remains robust, reliable, and ready for deployment. This technique not only refines how neural networks are structured but also transforms the narrative of AI from complex theory to easily applicable practice.

Read Now : “skills Required For Ai Career Pathways”

—

Understanding Dropout Technique in Neural Networks

The Science Behind Dropout

Dropout technique in neural networks stands on solid scientific ground. It leverages random sampling during training to mitigate overfitting. Imagine guarding a vast treasure trove, where the task is not to let any one item become too valuable lest it draws too much unwanted attention. Similarly, dropout limits the reliance on any single neuron, ensuring the learning process is better balanced and efficient.

Why Randomness Works

Randomness in dropout is not chaotic; it’s creatively purposeful. By randomly selecting neurons to “drop,” the model is constantly shuffled, forcing neurons to become robust and independent in their learning. This randomness ensures that every neuron is just as critical as the next, elevating the overall model performance.

The dropout technique in neural networks is indeed a celebration of randomness—a reminder that sometimes the best solutions arise from letting go and allowing natural processes to take their course. Undoubtedly, the dropout effect is like orchestrating an ensemble where every instrument gets a solo, culminating in a harmonious symphony that is both beautiful and effective in tackling real-world problems.

—

Tips for Implementing Dropout Technique

Best Practices:

Descriptive Insight on Dropout

In the intricate dance of developing AI models, the dropout technique in neural networks is a graceful yet powerful step. Within this sophisticated algorithmic ballet, dropout serves not only as a tool for better performance but also as an ally for developers and data scientists. Creating great models requires a mixture of patience, trial, and strategy—and dropout adds that magical element to see the process through.

The dropout technique is akin to reducing a chef’s over-reliance on salt—it encourages the discovery of other flavors, making the final dish more balanced and delightful. For businesses and experts dwelling in data, dropout becomes not just a method, but a philosophy. It teaches us to forge systems that learn more broadly by distributing the weight of learning evenly across neurons, much like how sharing tasks among team members can drive a project faster to success.

As we continue to delve deeper into the realm of AI and machine learning, the dropout technique in neural networks remains a bright beacon, guiding innovative explorers to create models that wow and awe. With eloquence, efficiency, and effectiveness at its core, dropout evolves into a vital mechanism that propels machine learning into striking heights.

—

Short Content on Dropout Technique in Neural Networks

Avoiding Overfitting

In machine learning, one of the most intimidating foes is overfitting—and fighting it is the dropout technique in neural networks. This formidable ally ensures that our painstakingly devised models don’t fall prey to overly specific patterns that serve no purpose in real-world applications. By randomly deactivating neurons during training, dropout keeps models on their toes, always guessing and learning the most efficient way.

Excelling with Dropout

When applying the dropout technique in neural networks, you’re essentially making an assurance pact with variance and bias. This fellowship leads to models that generalize better, equipped to decipher new data, much like an experienced detective solving fresh mysteries each day. As models equipped with dropout learn, adapt, and evolve, they attract attention for their incredible performance and flexibility.

Neuron Diversity

Consider dropout as an exercise in creating diversity within the neuronal world. Each neuron must learn to thrive in a fast-paced environment, contributing creatively without relying on other neurons to do the heavy lifting. This leads to models that are both robust and agile, akin to a discipline-remastered orchestra where every player takes center stage at one point or another.

Future of Machine Learning

As we march forward into an age where neural networks underpin groundbreaking advancements, techniques like dropout shed light on the path forward. Their ability to transform complex neural networks into nimble, effective, and insightful models makes them indispensable for practitioners in the field. So, let the dropout technique in neural networks be your guiding star in the cosmos of artificial intelligence.