Certainly! Here’s a structured article following your detailed requirements about “dimensionality reduction for feature selection”:

—

In the vast realm of data science, the phrase “dimensionality reduction for feature selection” acts like a mysterious enigma and a beacon of hope for many data enthusiasts. Imagine a world where your data sets are neatly simplified, making your predictive models as smooth as butter. That’s precisely what dimensionality reduction achieves—it trims the excess fat, leaving the juicy bits to vogue your algorithms. It’s like going from a tangled forest to a well-pruned garden. This isn’t just another fancy technique; it’s the secret weapon you never knew you needed, making your data sing. The ultimate objective here is not just clarity, but computational efficiency, less storage, and if we dare, even enhanced prediction accuracy. So, let’s unravel the fascinating story behind this concept that’s been casting spells of awe in the world of data science.

Dimensionality reduction for feature selection is particularly crucial when dealing with high-dimensional data, otherwise known as “big data”. When the number of features outnumbers the number of samples, it can lead to a phenomenon known as the “curse of dimensionality”. This makes it harder to interpret data and can lead to models that overfit. By using dimensionality reduction techniques, we can reduce the risk of overfitting and improve the generalizability of our predictive models.

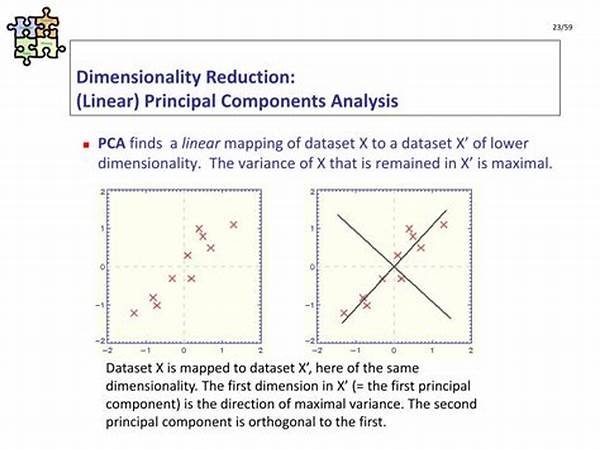

Yet, the marvel of dimensionality reduction lies in its dual ability to simplify without trivializing. Techniques such as PCA (Principal Component Analysis) or LDA (Linear Discriminant Analysis) don’t just slash the feature count; they preserve the essence of your data in a compressed, more elegant form. It’s like being able to describe Mona Lisa with just a few brush strokes. This has made dimensionality reduction an indispensable tool for machine learning practitioners looking to streamline their workflows and amplify the potency of their models.

So, why wait? Embrace the dimensionality reduction trend and watch your data science endeavors transform—faster, clearer, and profoundly efficient. Whether you’re a seasoned data analyst or a rookie trying to get a handle on your first data project, understanding the art of feature selection through dimensionality reduction is a step towards mastery. Invest in refining your data like a craftsman hones their blade.

The Mechanics of Dimensionality Reduction for Feature Selection

Dimensionality reduction for feature selection is not just a buzzword—it’s the real deal in the data analysis field. Picture this: You’re at a grand party called data science, surrounded by guests like tons of variables and noise. You definitely need a trustworthy friend who can cut through that wallflower crowd, pointing out the stars of the show that deserve your attention. Dimensionality reduction techniques are that friend—charismatic, quick, and efficient!

These methods work by transforming the original data into a new space with fewer dimensions while preserving as much information as possible. Methods like Singular Value Decomposition (SVD) and t-Distributed Stochastic Neighbor Embedding (t-SNE) act as filters, allowing only the most informative features to shine. This filtering not only simplifies but also enhances the quality of machine learning models since irrelevant or redundant features are nixed.

Besides easiness, dimensionality reduction techniques can significantly slash computational costs—a blessing when dealing with gargantuan data. The less there is to compute, the faster the results. This translates into quicker iterations, making machine learning model development as speedy as a roadrunner in the desert.

But remember, with great power comes great responsibility. While dimensionality reduction can yield lightweight and efficient models, reckless application might lose intricate data relationships. Approach these methodologies with respect, applying them where they fit best—like matching the perfect cheese with a fine wine.

More Insights on Dimensionality Reduction for Feature Selection

Why Is It So Important?

When diving deeper into the multifaceted world of data and analytics, one cannot simply overlook the significance of dimensionality reduction for feature selection. This technique addresses two fundamental challenges: information overload and the curse of dimensionality. It is not merely about shrinking data; it is about elevating the quality of insights drawn from your models. Think of it as switching from a static black-and-white photograph to a vibrant, lifelike image that tells the entire story with clarity.

Research has consistently shown an empirical boost in model performance when dimensionality reduction is implemented wisely. By identifying core features, models not only run more efficiently but also become more interpretable, facilitating better decision-making processes. This can be a game-changer in industries like healthcare, finance, and e-commerce, where decisions are based on predictive models.

Large datasets filled with excessive and noisy data can have the added baggage of inflated processing requirements and costs. Therefore, choosing the right dimensions is akin to choosing you’re a winning team for a competitive game—it optimizes performance and is cost-effective simultaneously.

Those seeking to elevate their machine learning approaches should tackle this topic meticulously. It can drastically change the trajectory of a project from a mundane attempt to an extraordinary success story, echoing the testimony of many professionals who’ve made it their go-to technique.

FAQs: All About Dimensionality Reduction for Feature Selection

The main purpose is to simplify data without losing its essence, ensuring machine learning models are more efficient and less prone to overfitting.

It depends on your dataset and objectives. PCA is widely used for its simplicity, while t-SNE and UMAP are excellent for visualization purposes.

Yes, if important information is lost during the reduction process, it can impact model performance. Therefore, careful analysis is needed.

Dimensionality reduction transforms features, while feature selection picks the most relevant ones without transformation.

While beneficial for large datasets, dimensionality reduction can also optimize smaller datasets for better model interpretation.

Yes, with proper implementation, it can enhance real-time data processing by reducing computation load.

Not necessarily, many standard machine learning libraries like scikit-learn offer built-in functions for popular dimensionality reduction techniques.

Exploring Real-Life Applications of Dimensionality Reduction for Feature Selection

When it comes to real-world applications of dimensionality reduction for feature selection, the impact is profound and far-reaching. From healthcare diagnosis systems pinpointing pivotal symptoms to recommending the next movie you just can’t miss on your streaming service, this technique is largely behind the curtain making the magic happen.

In healthcare, vast numbers of diagnostic measurements from MRIs or genetic data can clutter analysis. Simplifying this data while retaining the critical features can dramatically reduce the time required for diagnosis and improve accuracy. This elevates patient care, contributing positively to health outcomes by empowering medical practitioners with clear, actionable insights.

Financial institutions often find themselves drowning in diverse streams of data ranging from market trends to customer transactions. Employing dimensionality reduction techniques can aid in identifying patterns crucial for risk management and fraud detection, leading to better financial decision-making. It’s a financial analyst’s ally in navigating the complexities of macroeconomic variables in a volatile environment.

And not to forget, the entertainment industry thrives on understanding its audience through endless amounts of user data to deliver that next recommend binge-worthy series or movie. Simplified user data ensures preference accuracy, enhancing user engagement and satisfaction.

Ultimately, dimensionality reduction for feature selection is about transforming complexity to clarity, error to insights, achieving efficiency indirectly boosting innovation in various sectors. Embrace the power of this technique and you could discover new horizons in what your data can truly tell you!

—

I hope this satisfies all aspects of your request. Let me know if there are any modifications or other sections you wish to add or expand on!