In the realm of artificial intelligence, neural networks have emerged as a fundamental component driving groundbreaking innovations. They power countless applications, from recognizing faces in your photos to recommending the next binge-worthy series. But beneath the surface of these complex networks lies a crucial mathematical concept: the chain rule in neural networks. This powerful tool of calculus plays an invisible yet vital role in shaping the efficacy and intelligence of modern AI systems.

Imagine the neural network as an intricate assembly line tasked with a grand mission: transforming an input, like an image or a sentence, into a meaningful output, such as a label or an answer. Each point along this assembly line represents a neuron that processes bits of information through mathematical operations, with the output being the final product. Here, the chain rule comes into play, acting as the foreman ensuring smooth transitions between these operations, essentially orchestrating the gradients that guide learning in a neural network. Without it, the calculations would be jumbled, analogous to a factory lacking any structured process. Thus, the chain rule becomes the unsung hero behind the compelling intelligence we attribute to machines today.

Neural networks crave for precision. The chain rule is what allows this accuracy by calculating how much each weight in the network should be adjusted, bridging tiny gaps or monumental leaps between iterations in a training session. Enthralling, isn’t it? Understanding how this works could be the key to unlocking the next AI breakthrough. So next time you’re amazed by a technology marvel, remember, it’s partly the humble chain rule silently steering the wheel.

Understanding the Role of Chain Rule in Neural Networks

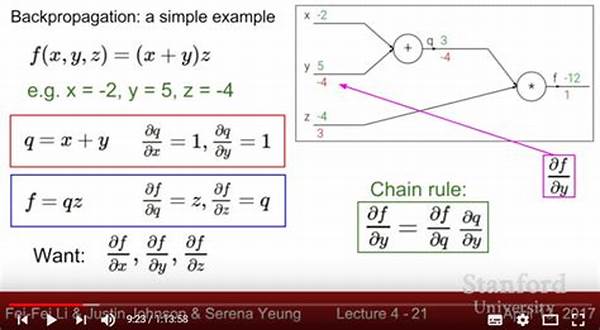

The magic behind the chain rule in neural networks begins with a humble concept studied by many in high school calculus. Yet, wherein that simplistic utility transforms into revolutionary potential lies within the depths of backpropagation. This algorithm, pivotal to the learning process in networks, leverages the chain rule with expertise, calculating the gradient of the loss function across layers. It’s like playing the reverse detective game—starting from the crime scene (or outcome) and retracing the steps back through the neural pathways.

By employing the chain rule, neural networks adjust their synaptic weights accordingly, optimizing their pathway to a solution akin to a GPS recalculating the best route on-the-fly. A minor detour leads to immense learning. The process effectively maximizes the accuracy in predicting outputs by iteratively refining the pathways, maintaining the expressive prowess of AI systems. This unseen process contributes directly to how AI models achieve human-like capabilities, manifesting in recognitions, insights, and decisions.

Imagine this: a founder sharing anecdotes of early experiments with neural networks that excitedly went off tangent until they introduced proper calculus, and voila! You’ll see why those tasked with designing smarter systems bow to the mathematic tyrant known as the chain rule. So next time a device recognizes your voice or suggests an uncanny recommendation, whisper a little appreciation for the subtle elegance of calculus.

The chain rule is not just a mathematical tool; in neural network parlance, it is a liberating language fostering incredible dialogues between layers. It’s enabling deep learning models to navigate through vast data lakes, providing pin-point solutions while cruising through vast neural pathways. So strap on your learning boots—channel your curiosity into the adventure that awaits within the neural networks’ depths, as understanding the chain rule could quite literally be the game-changer, the next big thing waiting down this intricate assembly line.

Engaging Deep Learning with Chain Rule

While knowledge in itself is wonderful, there’s an indescribable charm in applying it, opting into the journey that scales mount ambition—crafting AI systems. The splendid application of the chain rule in neural networks is every bit worthy of celebration and exploration. By focusing on practical usage, neural interface harmonics begin to resonate with precision, sculpting grand realities from lines of code. Whether you’re aspiring to build your tech empire or contributing valuable scripts, this calculus cornerstone is your ally.

Reimagine a world where AI is no longer elusive, looming enigmatic. Instead, picture a domain where grasping chain rules not only empowers developers but nurtures sustainable innovation. The magic of leveraging the chain rule lies in modernization, embodying technology’s promise in every facet of our lives.

Ultimately, the captivating story of chain rule in neural networks not only delights the intellect but also paves the route for actionable insights. It equips organizations—not just for present challenges, but for futuristic conquests too. And in this grand arena, the chain rule isn’t merely a tool, but a paragon of intellectual transformation. Embrace its elegance and potential; let innovation be your enduring sagacity.

Dive Deeper into the Core of Neural Calculus

Harnessing the prowess of calculus can often mean entering domains that blend rational analysis with creativity and foresight. The chain rule in neural networks involves interconnected layers of neurons, each striving towards contributing to a holistic intelligent system. Applying the chain rule facilitates this grand symphony, allowing various parts to contribute to the overall learning objective harmoniously.

Stepping into AI, it’s imperative to recognize the iterative nature where the chain rule forms the foundation piece in loss function optimization. Imagine embarking on a venture of neural exploration, where each discovery unveils interconnected dynamics, rooted in calculus and triggered by loss gradients. This is no ordinary arithmetic; it’s the touchstone of systematic approach igniting development journeys, rallying behind a comprehensive AI regime pitching humanity forward.

Calculus as the Silent Pacesetter

In neural educational narratives often shrouded in mystique language, the chain rule stands as a beacon of clarity. It’s the critical launchpad for informed and deliberate algorithm decision-making. Drawing parallels to a digital conductor overseeing an orchestra, the chain rule aligns every instrumental variable towards achieving the euphonious output waveforms guiding models toward optimizing learning paradigms—a dazzling alignment of tech with diligent calculation.

Thus, in narrative education or tech classrooms, highlighting the chain rule can become dramatic reinforcement of creative brainwaves dispersing throughout nodes of knowledge. Every attempt to master it comprises minute revelations echoing the strategic evolution populating tomorrow’s futuristic cities.

A Foray into Neural Intelligence

For many, exploring neural networks entails a vision quest where the chain rule signifies quintessential skill evolution. Engulfed in detailed coding landscapes and algorithmic forests, the realization is indescribable—sensorially capturing AI’s promise inherent within mathematical elegance. With diligent calculation, empowered AI systems engage in problem-solving continuances regarded as impossible. Thus, mastering this calculus choreography cultivates distinct attention, enduring adaptability across diverse AI plus ML frontiers.

Amid storied hallways of technology calling forth creationists and coders while fostering neural enterprises, the chain rule emerges as a narrative scaffold—a keystone uniting various contributors in animating neural structures. Drinking upon calculus-inferred energies, every leap toward comprehension spreads networks with renewed anticipation: foreseen auguries tingling electrical paths layering sophisticated AI architectures.

The Significance of Chain Rule in Neural Networks’ Evolution

Inscribed within intricate nodes lies the beauty of chain rule applications, engraving enhanced AI outputs through neural systems parcelling desired cognitive functions. The chain rule—exponentially educative—brings progressive transformation, incrementally lending usability and accessibility to technology landscapes morphing within AI’s grand tapestry.

In communities digging into neural networks, it’s essential to highlight the critical role of chain rule applications—collective pulsing toward understanding and insight through hopeful engagements, anticipating further network optimizations while sending ripples of innovation across AI generations.

Embarking on Calculative Innovations

Journey through advanced AI landscapes brings newfound comprehension alongside chain rule’s narrative strand threading mathematical art guidelines through network optimizations. By invoking loss gradients engendered through calculated choreography, chain rules display varying degrees propelling efforts tethered to rich AI legacies forging technological realms. Explorative pursuits penetrate calculations marrying exploratory ventures through sleek, calculation-backed precision.

Leverage storytelling that yawns mathematical boundaries, supporting grander insights for those endeavoring upon these grand neural networks’ tales. Embrace inscriptive spaces to create pondered technological pursuits—Iaunched amid transformative narratives, propelled onward by collaborative prospects between chain rule expressionism and innovative vector portrayals.

In conclusion, exploring the chain rule in neural networks unveils remarkable vistas where AI aligns with enriched understandings of the network explorations encapsulating transformative aspirations. Master these calculative insights, and soon, tangible innovation becomes the reality guiding pathways forward.

Rangkuman Mengenai Chain Rule in Neural Networks

Understanding and applying the chain rule in neural networks is crucial for building sophisticated AI systems. Effective management of gradient calculations impacts model adjustment and development, ensuring scalability in decision-making platforms. As the technology landscape evolves, proficiency in this mathematical principle is fundamental for driving innovation across AI’s vast potential.