- Understanding Bidirectional LSTM for POS Tagging

- Why Use Bidirectional LSTM for POS Tagging?

- Benefits of Bidirectional LSTM for POS Tagging

- Exploring the Mechanics of Bidirectional LSTM for POS Tagging

- The Importance of Bidirectional LSTM in POS Tagging

- Dive Deeper into Bidirectional LSTM for POS Tagging

- Summing Up Bidirectional LSTM for POS Tagging

Ever found yourself tangled up in the wonderful world of machine learning? If you’ve ever dabbled in Natural Language Processing (NLP), you’d know that Part-of-Speech (POS) tagging can be a bit of a puzzle. But, here’s where the cool stuff comes in—Bidirectional Long Short-Term Memory, or bidirectional LSTM for short. This powerhouse of a tool makes tagging words in a sentence based on their part of speech a walk in the park! Let’s dive into this tech wizardry and see how it makes language processing so darn effective.

Understanding Bidirectional LSTM for POS Tagging

Alright, let’s get our geek on for a moment. Bidirectional LSTM for POS tagging is quite the superstar in the NLP space. Imagine having two eyes that can look both forward and backward in a sentence. That’s precisely what a bidirectional LSTM does! It not only examines the past word but also anticipates the future word in a sentence for better prediction accuracy. As a result, it becomes incredibly efficient in understanding the context, which is key in determining the correct part of speech for each word. Whether it’s recognizing a verb, noun, or adjective, bidirectional LSTM ensures that the tagging process is as accurate as possible. By capturing the sequence information from both directions, it makes each word in a sentence not just a standalone unit but a connected piece of the whole language puzzle.

Why Use Bidirectional LSTM for POS Tagging?

1. Contextual Genius: Bidirectional LSTM for POS tagging can understand complex linguistic structures due to its dual directionality, processing the word context forwards and backwards.

2. Accuracy Champ: This method boosts accuracy by considering the entire sentence’s context, resulting in reliable and precise POS tagging.

3. Versatility in Contexts: It’s perfect for varying text forms, capturing nuances across different linguistic scenarios.

4. Seamless Integration: Easily integrates with other NLP processes to enhance language models and applications.

5. Data-Efficient: Even with limited data, bidirectional LSTM for POS tagging can produce promising results due to its capacity to leverage global information efficiently.

Benefits of Bidirectional LSTM for POS Tagging

If you’re a tech enthusiast like me, you’ll love knowing that bidirectional LSTM for POS tagging can handle ambiguity with grace. Often in sentences, certain words can take on different roles depending on what follows or precedes them. The bidirectional nature allows the LSTM to look both ways, interpret properly, and then tag accurately. Plus, they’re not just about accuracy; they’re robust and awesome at predicting the syntactic structure of new sentences too. It’s like having a well-read, language-loving buddy who’s ready to make sense of all those tricky sentences that seem straightforward at first but are actually filled with grammatical surprises.

Exploring the Mechanics of Bidirectional LSTM for POS Tagging

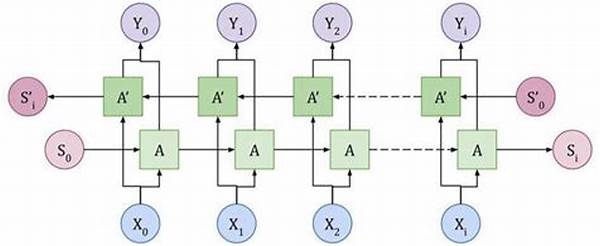

Getting into the nuts and bolts, this is where bidirectional LSTM for POS tagging gets fascinating. The magic lies in its hidden layers that run in two directions—one from past to future and the other from future to past. This dual-layer approach enables the model to take in previous and future contexts before making decisions about the part of speech for every word. Think of it like reading a mystery novel; you wouldn’t understand the full story by just looking at a couple of pages, right? You’d need the context from the chapters before and after to solve the mystery. Similarly, for POS tagging, the bidirectional LSTM models that complex storytelling angle, which results in enriched predictions. Intricacies such as tense, syntax, and semantics are better captured and tagged accurately, all thanks to this nifty dual-layer architecture.

The Importance of Bidirectional LSTM in POS Tagging

In the evolving tech ecosystem, why place bidirectional LSTM for POS tagging on a pedestal? For one, it makes automation possible in tasks like voice-to-text transcription and chatbots, where real-time POS tagging helps in understanding sentences almost as intuitively as a human does. Additionally, it optimizes language translation models where context matters enormously. Bidirectional LSTM equips machines with the ability to discern linguistic nuances, improving their interaction with humans. That seamless communication between man and machine is essential for tech-driven futures we’re heading into, and bidirectional LSTM is a pivotal part of that journey. It builds stronger, more functional NLP models that are just a tad bit closer to understanding language like we do.

Dive Deeper into Bidirectional LSTM for POS Tagging

The science behind bidirectional LSTM for POS tagging isn’t just for the tech wizards. Despite its deep-learning origins, this technology finds its application in day-to-day tech use cases. For instance, have you ever used predictive text on your smartphone? That’s bidirectional LSTM doing its thing behind the scenes, making sure your messages make sense. On a greater scale, it powers translation engines, ensuring phrases translated from one language to another maintain their intended meaning. This becomes especially crucial in professional environments where precision is paramount. Bidirectional LSTM outperforms traditional models by making more informed predictions, thus reducing errors in automated text applications.

Summing Up Bidirectional LSTM for POS Tagging

At its core, bidirectional LSTM for POS tagging stands out as a transformative tool in computational linguistics. By providing an enriched context through its two-pronged approach, it offers a level of precision and understanding that simpler models might miss. What makes it truly fascinating is how it’s revolutionizing the everyday text-based apps we rely on, from enhancing search engines to improving user experience in digital communication. As we continue to delve deeper into the realm of artificial intelligence and machine learning, the role of bidirectional LSTM becomes more critical than ever. It represents the bridge between human language complexities and machine understanding, helping build tech that’s more intuitive and human-friendly. And in this age of information, isn’t that what we all aspire to achieve—a seamless interaction between technology and human communication?