In the realm of machine learning, one stumbling block towers above the rest: bias in datasets. Like a gremlin that sabotages reason, bias can derail the most sophisticated models, making them skewed, unfair, or downright problematic. Imagine trying to build a skyscraper with uneven bricks. The higher you go, the more unstable the structure becomes. Now, transpose this issue to training datasets, and you’ve got a similar scenario. Enter the hero of our tale: bias mitigation in training datasets. This is not just a fancy buzzword flung around tech circles; it is the harbinger of precise and equitable AI models. Like a seasoned detective, bias mitigation seeks to unveil hidden partialities and escort them to the exit, ensuring fairness and equality in data-driven decisions.

However, understanding bias mitigation in training datasets isn’t just for data scientists huddling over keyboards in darkened rooms. It’s a pillar for anyone interacting with AI, from the everyday app user to the corporate titans harnessing AI’s potential to predict consumer behavior or flag fraudulent activity. As AI integrates deeper into the fabric of our daily lives, acknowledging the role of bias mitigation becomes not just significant but essential.

The training data is the lifeblood of machine learning, the fuel that propels algorithms into spheres we thought only possible in sci-fi films. Yet, to rely blindly on these systems without questioning the data they ingest would be like navigating a ship through rocky waters without a compass. Bias mitigation in training datasets offers that indispensable compass, directing models towards more balanced, just, and accurate outcomes.

A world driven by ethical AI is not only within reach—it’s a pressing necessity. Societal pressures, public demand for transparency, and the competitive edge of trustworthy AI urge stakeholders not to sidestep this matter. Whether it’s eliminating gender biases from hiring algorithms or ensuring equitable loan approvals, bias mitigation is more than a safety net; it’s the foundation for sustainable progress in AI.

Why Bias Mitigation Matters

Understanding bias mitigation in training datasets necessitates diving beneath the surface of everyday AI applications. But why does it matter? Picture an AI-powered recruiting tool inadvertently dismissing qualified candidates because of unconscious bias embedded within its dataset. A sprinkle of bias here and there might go unnoticed like a drop of ink in the ocean, yet it accumulates, becoming a tidal wave that influences decisions, opportunities, and ultimately lives.

Bias mitigation in training datasets is not merely an ethical consideration—it’s a business imperative. Companies that ignore this principle risk not only tarnished reputations but significant financial repercussions. In an age where consumers’ ethical expectations are sharpened more than ever, even a whiff of unfairness can lead to PR nightmares and falling stocks. Integrating bias mitigation helps sidestep these pitfalls, preserving trust and reinforcing a brand’s commitment to equality.

Moreover, the performance of machine learning models directly correlates with the quality of the data they are trained on. By meticulously ensuring bias mitigation, we enhance model accuracy. Imagine a music recommendation system that strays from its directive by pushing only one genre due to biased training data. Not only would it frustrate users, but it would also miss the goldmine of user engagement an unbiased system might tap into.

While the task might appear daunting, the journey toward achieving effective bias mitigation is paved with innovation. Automation, advanced training architectures, and the vigilance of conscientious data scientists collectively steer the AI landscape towards fairness. The golden rule? Always trailblaze with an open mind and a diligent eye, for the true power of AI gleams brightest when it reflects the diversity and dignity of humanity.

—

Picture this: you’re building a new app powered by an advanced machine learning model poised to take the world by storm. But this utopian vision crumbles if the dataset harboring this magnificent beast carries hidden biases. Welcome to the world of bias mitigation in training datasets, an unsung yet critical endeavor that ensures AI models are as unbiased and fair as possible. Before diving into code and algorithms, take a step back and understand that the journey to ethical AI begins with a dataset cleanse. Much like washing vegetables before tossing them into a salad, you want your datasets crisp, clean, and free from the impurities of bias.

The importance of bias mitigation in training datasets can be likened to air traffic control. It may seem complex and unnecessary on clear, sunny days, but dare to overlook it, and you’ll quickly find yourself amidst chaos on cloudy, storm-riddled nights. Datasets with inherent biases lead to skewed predictions that can perpetuate stereotypes or unfair practices, potentially alienating entire demographics. Fortunately, a commitment to proper mitigation techniques can defuse this ticking time bomb, leading to models that understand and respect the rich tapestry of human diversity.

Moving through this landscape, one might question, how does bias sneak into training datasets in the first place? Like an uninvited guest, bias can stealthily infiltrate data through historical prejudices or incomplete representations. Imagine teaching a child about the world only through outdated encyclopedias—while informative, it paints an incomplete picture. Bias mitigation in training datasets ensures that our machine learning models are not just reactive but perceptive and inclusive, designed to embrace nuance rather than oversimplify.

Moreover, bias mitigation isn’t a one-time gig. It is dynamic, requiring ongoing reflection and adjustment. Just as a sailor wouldn’t dare ignore the changing seas, data scientists must be adaptable, maintaining vigilance over their datasets and striving for improvement where bias may rear its head. This continuous effort champions transparency and social responsibility, fostering symbiotic relationships between AI systems and the diverse global populace they serve.

Bias mitigation in training datasets is the silent partner in every success story about fair and equitable AI. Its role is pivotal yet understated, crucial but often uncelebrated. So, each time a machine learning model performs a task seamlessly for every user, remember it’s not just the brilliance of the AI at play—it’s the diligent, often arduous work of bias mitigation ensuring an even playing field for all.

The Essence of Fairness in AI

Bias mitigation in training datasets is not just about algorithmic integrity; it’s a deep-seated commitment to fairness. AI’s promise of revolutionizing industries comes with an inherent obligation to be a force for good. Imagine a future where AI unknowingly discriminates in loan approvals or medical diagnoses. The fallout could be disastrous.

Trust in the Data

The cornerstone of any AI model’s success is the data it uses. By fostering trust in bias-free datasets, companies can innovate with greater confidence. This trust-building is not only crucial for business but also for societal progress and acceptance of AI technologies.

Efforts in bias mitigation in training datasets propel us into a future where AI interprets our diverse world with accuracy and equity. It is not only the moral imperative but a tangible measure that results in better business outcomes, consumer trust, and meaningful innovation.

—

Goals of Bias Mitigation in Training Datasets

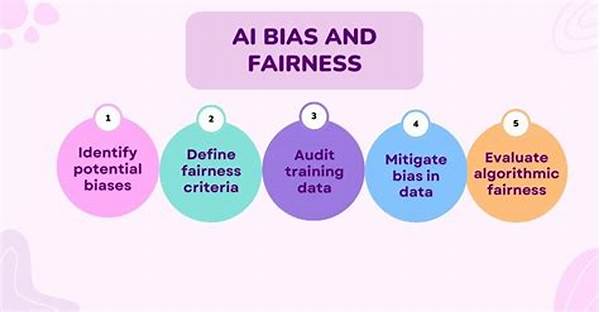

Structuring Effective Bias Mitigation

Creating a strategy for bias mitigation in training datasets involves methodically confronting the challenges head-on. Start by addressing the root: the data collection process. Ensuring diversity at this stage is paramount, akin to having a wide palette of colors when painting a masterpiece. By broadening the spectrum from which data is drawn, one can preempt a swath of biases from entry.

Furthermore, establishing a vigilant review process is key. Like a security checkpoint at an airport, this involves periodic checks and audits to ensure that bias does not stealthily sneak in post-design. Using advanced algorithms and human oversight works synergistically here, catching discrepancies machines might miss and vice versa. Encourage a culture where questioning is welcome and necessary adjustments are part of the workflow.

The ultimate goal? A seamless synergy between human intuition and machine learning’s prowess. When bias mitigation becomes an interactive dialogue, it evolves from a procedural task into a shared mission. Celebrate small victories and tweak along the way; the journey to bias-free datasets doesn’t follow a straight line but a path carved through dedication and insights.

—

Undertaking Bias Mitigation in Models

Effectively tackling the challenges of bias mitigation requires more than just a well-laid framework; it demands dynamic solutions that adapt with time. At its core, bias mitigation in training datasets depends on proactive engagement and the integration of innovative practices. Rather than relying solely on reactive measures, consider the broader spectrum of technology’s evolution. It’s a process not unlike gardening — diligent, patient, yet ever-attentive to change.

A substantial step forward involves collaborating with multidisciplinary teams, each bringing a unique perspective to bias identification and resolution. These diverse minds, when pooling their collective experiences and insights, often yield creative solutions that one-dimensional strategies might overlook. When diversity is the guiding principle, solutions mirroring real-world complexity emerge, ensuring models reflect a rich, unbiased reality.

Moving toward bias-free datasets also calls for technological ingenuity. Modern data science tools possess formidable powers to detect and correct bias, serving as vigilant sentinels guarding dataset integrity. These tools can recognize nuanced patterns humans might miss, further refining our approach to bias mitigation. Using AI to improve AI forms a captivating feedback loop, a testament to the self-correcting potential of intelligent systems.

Yet, even as we improve our techniques, challenges remain—a perpetual dance between guarding against bias and optimizing model structure and accuracy. There’s no magic bullet or one-size-fits-all solution, but a suite of strategies, each tailored to unique datasets and respective challenges. Understanding that bias mitigation is an art as much as science, emphasizes adaptability, care, and judgment in perfect harmony, ultimately creating a model as robust as it is fair.

Tools and Techniques for Bias Mitigation

Bias mitigation in training datasets comprises a range of tools and methodologies aimed at identifying and rectifying biases. From data pre-processing that adjusts imbalanced datasets, to advanced techniques like adversarial debiasing and algorithmic fairness constraints, a plethora of options cater to different needs.

Efficient bias mitigation practices require a commitment to continuous learning and adaptation. As AI becomes increasingly sophisticated, so too must our approaches to ensuring unbiased outputs. By leveraging diverse expertise and state-of-the-art technologies, we can transform potential pitfalls into new opportunities for growth and innovation.

—

Five Key Strategies for Mitigating Bias

Crafting and Executing Bias Management Plans

Amid the buzzwords and ethical conundrums of the tech world, the phrase “bias mitigation in training datasets” rings with urgency. Wheat separated from chaff, this concept emerges as a cornerstone for the responsible development of machine learning models. But how does one roll out a bias management plan that sticks? It begins with an essential step: education. Starting with developers and extending to stakeholders, understanding the gravity of bias and its broader societal implications is critical. Knowledge isn’t just power; it’s the spark that ignites proactive action.

Having laid this foundation, next comes the selection of tools and strategies tailored to specific datasets. Think of this as selecting the right gear for a high-altitude trek. Relying on outdated methods or unsuitable technologies in the face of a rigorous climb is a recipe for failure. Thus, a hybrid approach—melding time-tested strategies with cutting-edge technologies—helps assure adaptive and nuanced bias mitigation in training datasets.

Finally, maintaining an open feedback loop ensures a healthy and adaptable system. This isn’t a “set and forget” scenario; it demands continuous iteration and reflection. Encourage teams to view bias mitigation less as a checkpoint and more like a sustainably long-lasting process. Celebrate milestones—a testimonial to the collective endeavor towards ethical AI.

—

Sustainable Practices

Committed to sustainable practices, bias mitigation in training datasets evolves as a fundamental pillar of ethical AI. It’s an ongoing journey demanding attention, dedication, and a blend of intuitive and technological prowess. By positioning bias mitigation at the forefront of AI discussions, we propel the industry into a future where technology serves humanity impartially, with dignity and respect.

In essence, by embracing bias mitigation as an integral facet of AI development, we lay the groundwork for a landscape where technology, business, and humanity flourish together, unencumbered by the chains of outdated biases and stereotypes.