- Understanding AI Bias

- Introduction to Bias Mitigation in AI Systems

- The Role of Data in AI Bias

- Implementing Bias Mitigation Strategies

- Key Points on Bias Mitigation in AI Systems

- Discussion on Bias Mitigation in AI Systems

- Strategies for Bias Mitigation in AI Systems

- Description of Bias Mitigation in AI Systems

I’m excited to help you create engaging content on the topic of “Bias Mitigation in AI Systems.” Let’s dive into each part as requested.

—

In the rapidly evolving world of Artificial Intelligence, biases in AI systems can sometimes cause more harm than good, especially when these systems are deployed in sensitive areas such as healthcare, hiring practices, or law enforcement. Bias mitigation in AI systems is not just a desirable feature – it is an ethical imperative. Every company dabbling in AI, be it a tech giant or a small startup, must recognize the importance of minimizing bias to ensure fairness and maintain trust with its target audience.

Incorporating bias mitigation from the initial stages of AI development can yield more equitable outcomes and foster innovation. A perfect blend of technology expertise and ethical responsibility produces AI systems that reflect our societal values rather than exacerbate existing inequalities. AI practitioners, data scientists, and ethicists need to join forces in this endeavor to lead a wave of ethical technological advancement.

Understanding AI Bias

The concept of bias in AI is often met with skepticism and misunderstanding. Many view AI as infallible, a tool that operates purely on data, devoid of subjective errors. However, the reality is that AI is as biased as the data we feed it. Historical data, shaped by societal biases, can produce skewed AI outcomes if not properly managed. This is why bias mitigation in AI systems is crucial for AI to truly serve all members of society.

—

Introduction to Bias Mitigation in AI Systems

AI is steadily becoming an integral part of our daily lives, revolutionizing everything from consumer services to essential public services. However, a growing concern within this transformative technology is the presence of bias. Experts across the globe are investigating how biases find their way into these seemingly impartial systems and how to minimize their impact. Bias mitigation in AI systems is a field gaining traction as industries recognize the importance of fair and just AI outcomes.

Addressing biases is no longer optional. Reports indicate that unchecked AI systems have contributed to discriminatory hiring practices, unfair banking decisions, and biased law enforcement measures. These occurrences underscore the urgent need for bias mitigation, promoting fairness and equality in decision-making processes. AI systems reflect the data they’re trained on; hence, any historical or societal inaccuracies are inevitably transferred to AI outputs.

The Role of Data in AI Bias

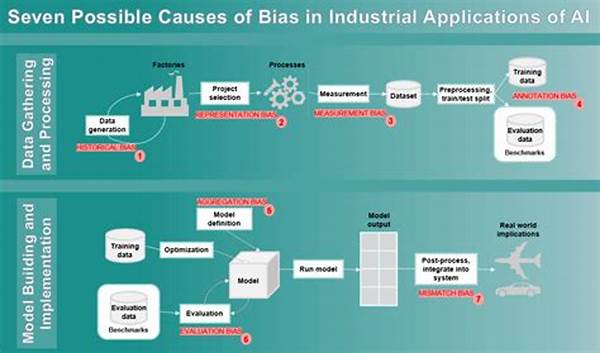

A significant contributor to bias in AI systems is data. Since AI models learn from existing datasets, the quality and neutrality of this data are paramount. Uneven representation in datasets can lead to skewed AI behavior, reinforcing stereotypes and perpetuating discrimination. To counter this, AI developers must commit to unbiased data collection and rigorous testing methodologies.

Implementing Bias Mitigation Strategies

Strategies for bias mitigation in AI systems are multifaceted. They include diversifying training datasets, implementing fairness constraints, and continuously monitoring AI system performance. Moreover, involving interdisciplinary teams in AI development—comprising technologists, ethicists, and sociologists—can offer diverse perspectives crucial in creating unbiased AI solutions.

—

Key Points on Bias Mitigation in AI Systems

Discussion on Bias Mitigation in AI Systems

Bias mitigation in AI systems is a complex issue that requires ongoing discussion and exploration. At its core, mitigating bias involves understanding the inherent prejudices present in historical data and actively working to counter them. This requires a cultural shift in how we develop, deploy, and monitor AI systems.

By fostering dialogue among stakeholders, organizations can better navigate the complexities of AI bias. This includes developers, consumers, policymakers, and ethicists. Creating a standardized framework for bias detection and adjustment can support AI innovation while minimizing harm. Moreover, transparency in AI processes can enhance trust and accountability, ensuring AI benefits all sectors of society.

In a world increasingly reliant on AI, promoting ethical AI development is vital. Organizations and developers must prioritize bias mitigation as part of their corporate responsibility. This involves not only technical adjustments but also addressing broader societal attitudes towards data representation and technology ethics.

—

Strategies for Bias Mitigation in AI Systems

1. Diverse Training Data: Ensuring diverse and representative datasets during the training phase to minimize biases.

2. Bias Audits: Conducting regular audits to detect and rectify any biases found in AI outcomes.

3. Fairness Benchmarks: Establishing benchmarks that define fair outcomes for AI systems.

4. Algorithmic Transparency: Making algorithms transparent to allow for external scrutiny and improvement.

5. Ethical Training: Introducing ethical training for AI developers to heighten awareness of potential biases.

Description of Bias Mitigation in AI Systems

Bias mitigation in AI systems is a dynamic process involving technological adjustments and ethical frameworks. Recognizing that AI can reflect and even amplify existing biases, initiatives are underway to ensure AI systems operate fairly. This involves not just technical solutions, but a comprehensive approach encompassing data ethics, fairness principles, and societal accountability.

Ensuring that AI systems are fair and unbiased requires a concerted effort across various domains. This includes educating AI developers on the societal impacts of bias and fostering a regulatory environment that promotes transparency and accountability. Ultimately, a commitment to bias mitigation fosters more reliable AI systems that benefit all demographics, enhancing societal trust and technological advancement.

—

By implementing and advocating for effective bias mitigation strategies, we can harness the potential of AI to drive equitable progress and innovation.