Hey there, fellow tech enthusiasts! Today, we’re diving into a topic that’s becoming all the rage in the AI community—Bias and Fairness in AI Models. Ever wonder if your AI assistant is playing favorites or if it’s being fair to everyone? Well, you’re not alone. With AI models becoming a staple in our daily lives, it’s crucial to ensure they treat everyone equitably. Let’s break it down!

Understanding Bias and Fairness in AI Models

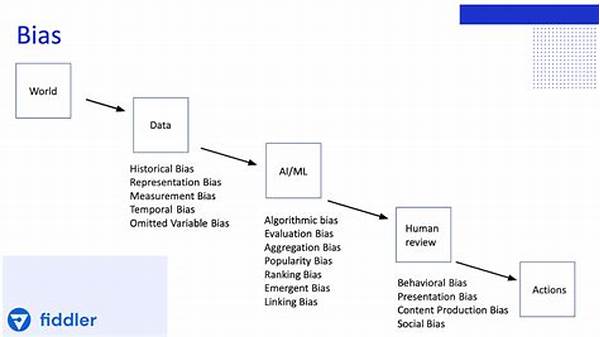

Navigating the landscape of AI can be like walking through a dense forest. You might wonder why bias and fairness in AI models have become such hot-button issues. It’s simple: AI systems are trained on datasets that might inadvertently reflect societal prejudices. Think of it this way—if an AI model learns from biased data, it could perpetuate those biases. That’s a big no-no for fairness! Ensuring bias and fairness in AI models is like doing a balancing act on a tightrope. It’s about making sure these systems provide equal opportunity, irrespective of gender, race, or any other characteristic. When we talk about bias and fairness, we mean creating AI that’s as neutral as possible. Simply put, tackling biases and ensuring fairness means constantly refining and improving AI systems. It’s what will help AI evolve into tools that facilitate equality, not division.

Challenges in Ensuring Bias and Fairness

1. Training datasets can be biased from societal prejudices, which impacts bias and fairness in AI models.

2. Correcting bias without losing AI model accuracy can be a complex puzzle.

3. Transparency in AI’s decision-making process is key to ensuring fairness.

4. Bias and fairness in AI models require continuous monitoring and iteration.

5. Different biases can emerge in various stages of AI model development, needing diverse solutions.

Tools and Techniques for Promoting Fairness

So, how can we tackle the challenge of bias and fairness in AI models? Ah, so glad you asked! There’s a toolkit out there for this very purpose. Let’s start with debiasing techniques. These are methods we can use to clean up datasets and ensure they’re as impartial as possible. Then there’s algorithmic auditing, a fancy term for checking the balance in AI results. This is akin to a fairness check-up for your car. Moreover, involving a diverse team of developers can also help bring different perspectives that challenge ingrained biases. With these approaches, promoting bias and fairness in AI models is not just a wishful dream but very much a reality within our grasp.

The Importance of Diverse Datasets

1. Diverse datasets are a cornerstone for achieving bias and fairness in AI models.

2. Real-world data diversity ensures AI doesn’t favor a specific group.

3. Balanced datasets help AI models behave more equitably in different scenarios.

4. Diverse data allows for a broader perspective in decision-making processes, reducing bias.

5. Incorporating multifaceted data is crucial for fostering fair AI developments.

The Impacts of Unchecked AI Bias

Imagine AI running wild with unchecked bias—it’s a bit like a train without brakes. Bias and fairness in AI models are critical to avoid negative consequences impacting real lives. Think discriminatory job applications or biased credit lending. Unfair AI decisions can even erode trust in AI systems, making people skeptical about engaging with them. Without addressing bias and fairness, AI can perpetuate existing inequalities rather than solve them. Plus, is it possible to make ethical strides in tech if our models are veering off course? Without action, the dream of AI empowering everyone equally might just remain a dream. Hence, pushing for fairness is vital to ensure AI remains a force for good in this world.

Real-World Examples of AI Bias

1. AI-based hiring systems may favor certain demographics over others, affecting bias and fairness in AI models.

2. Facial recognition technology sometimes struggles with non-Caucasian faces.

3. Certain language processing tools can perpetuate gender stereotypes.

4. Credit scoring models have been known to exhibit discrimination.

5. Search engine algorithms may highlight certain biases in generated results.

How We Can Make AI Fair for Everyone

Alright, folks, it’s time we roll up our sleeves and ensure bias and fairness in AI models aren’t just buzzwords. Start by advocating for transparency. When AI systems are open books, it’s easier to spot biases. Encourage the development of ethical guidelines for AI usage so companies have a clear road map to follow. Diversifying teams involved in AI development can usher in a variety of perspectives, which is essential for fairness. Push for standardized testing of AI systems to evaluate their biases regularly. Interdisciplinary collaboration between techies, ethicists, and policymakers can also offer invaluable insights. Let’s work towards creating a tech landscape where AI is a fair tool that empowers the masses.

Conclusion

In a world increasingly driven by artificial intelligence, addressing bias and fairness in AI models isn’t a luxury but a necessity. We’re tasked with the challenge—and opportunity—to ensure that these powerful tools work equitably for everyone. Avoiding biases can be tricky business, like untangling a knotted necklace, but with persistence and focus, it’s possible. To keep AI a positive force for all of humanity, let’s champion diversity, transparency, and regular audits. By working tirelessly on these fronts, perhaps one day AI can be judged not by the color of its data but by the quality of its fairness. Here’s to achieving equality with tech, folks—because that’s what truly futuristic progress looks like!