In the world of artificial intelligence, data is the kingmaker. Without quality data, even the most advanced algorithms falter. This is where balanced sampling in AI datasets comes into play, ensuring that AI models are not just intelligent but also fair. Imagine crafting a masterpiece only to realize it suffers from a fatal flaw: an imbalance in the very material it is made from. This flaw plagues many AI systems today, but balanced sampling offers a powerful antidote. Not only does it promise a normalized distribution of data points, but it also acts as a safeguard against bias that can skew results and undermine reliability. AI datasets need meticulous care, as they are the building blocks of future innovations. This journey into balanced sampling in AI datasets reveals why it’s a game-changer in fine-tuning AI performance.

Developers face an uphill task, navigating between the Scylla of insufficient data and the Charybdis of bias-induced inaccuracies. Balanced sampling in AI datasets involves techniques such as stratified sampling, oversampling, and undersampling to ensure variety and uniform distribution. This might sound like technical jargon, but at its core, it’s about fairness. How do you ensure an AI model doesn’t favor one side of a dataset? This question has puzzled researchers and developers alike. Balanced sampling bridges this gap by ensuring each subset of data is proportionately represented, making your AI not just smarter, but wiser.

It’s easy to underestimate the significance of balanced sampling until you witness its impact firsthand. Businesses have leveraged balanced sampling to enhance customer engagement, streamline operations, and predict trends with unerring accuracy. The process isn’t just technical fine-tuning—it’s an innovative marketing strategy by itself, translating raw data into actionable insights with finesse. Want to see customer satisfaction soar? Ensure your AI’s training data reflects the diversity and nuances of real-world scenarios. In a nutshell, balanced sampling in AI datasets isn’t merely a tool—it’s a revolution waiting to happen.

Why Balanced Sampling is Vital for AI Fairness

Balanced sampling in AI datasets is the secret sauce for fairness and accuracy in machine learning. As AI continues to integrate into facets of daily life, the hazards of imbalanced datasets become more apparent. Picture an AI model trained on biased data making decisions in areas like hiring, healthcare, or law enforcement. The ramifications are both profound and far-reaching, emphasizing the urgency of incorporating balanced sampling.

In today’s fast-evolving landscape, balanced sampling helps curtail the risks of skewed AI, offering a more equitable and just approach. For example, in sentiment analysis, balanced sampling ensures negative and positive sentiments are evenly represented, preventing bias. Similarly, in fraud detection, it assures that fraudulent and legitimate activities are equally captured, enhancing model reliability. Balanced sampling isn’t just an add-on—it’s a cornerstone in designing ethical AI systems.

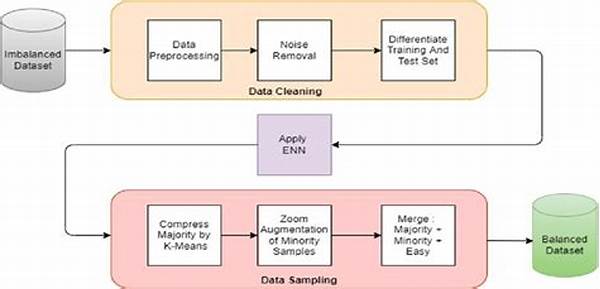

On the technical front, engineers can utilize oversampling methods to replicate minority class data, or leverage undersampling for majority classes to achieve a harmonized dataset. But it’s not just about numbers; balanced sampling takes on the challenge of reflecting true diversity in its datasets. That diversity crucially influences how a model will behave under various real-world circumstances, making these methods indispensable.

The implementation of balanced sampling in AI datasets shows dedication not just to technological advancement but also to societal progress. Consider the implications of ethically balanced AI systems: increased trust in AI technologies, reduced instances of discrimination, and improved decision-making processes. Companies and organizations standing at the frontier of AI development need to champion such methodologies, as they carve a pathway to a future where AI works in harmony with human interests.

The Nuances of Implementing Balanced Sampling

Engaging in balanced sampling in AI datasets sounds straightforward, yet it involves several layers of complexity. To begin, understanding the composition of your dataset is crucial. It’s like throwing a party; you need to know the guests before deciding on the seating arrangement. Balanced sampling begins with an analysis of data distribution across different classes or categories.

Once you have defined your categories, the magic starts—the careful process of selecting and replicating data points to ensure equal representation. This is especially critical in domains like language processing or computer vision, where data scarcity can lead to skewed model outputs. Essentially, balanced sampling acts like a data cocktail mixer, blending elements to create a concoction neural networks can digest more accurately.

Moreover, advanced strategies like SMOTE (Synthetic Minority Over-sampling Technique) elevate balanced sampling by creating synthetic samples rather than merely duplicating existing ones. This ensures that the dataset is both balanced and capable of offering more nuanced training scenarios. These advanced methods of sampling not only contribute to model efficacy but also safeguard against overfitting, enhancing the generalization capabilities of AI systems.

Understanding the nuances of balanced sampling is an education in AI ethics and efficacy. It forms the backbone of a model’s ability to process, learn, and make decisions in a manner that’s demonstrably superior to its unbalanced counterparts. For stakeholders involved in AI—be it from academia, corporate sectors, or R&D—a profound comprehension and application of balanced sampling practices is indispensable.

Examples of Balanced Sampling in AI Datasets

Strategies for Effective Balanced Sampling

Balanced sampling in AI datasets isn’t just a methodology—it’s an art form that requires precision and a keen understanding of data intricacies. When curating a balanced dataset, the stakes are high, as the impacts drive both commercial success and societal benefit. In industries like finance, healthcare, and customer service, outcomes from AI models can have profound effects, and balanced sampling provides the robustness these sectors demand.

Firstly, understanding dataset imbalances is fundamental. This typically involves statistical analysis to determine where overrepresentation and underrepresentation occur. Once identified, balanced sampling can proceed through various strategies, each tailored to suit specific needs. For instance, oversampling, a commonly used method, involves duplicating data points from underrepresented classes to level the playing field. While this might solve issues of imbalance, it requires careful implementation to avoid overfitting, where the model becomes too tailored to the training data.

Alternatively, undersampling is used to reduce data points in overrepresented classes to balance the scale. While seemingly straightforward, it’s a delicate procedure that may lead to valuable data being underutilized. However, the beauty of balanced sampling in AI datasets lies in its adaptability—hybrid approaches can be employed, combining over and undersampling techniques to curate a perfectly balanced dataset.

The implementation of SMOTE (Synthetic Minority Oversampling Technique) cannot be overlooked, offering a sophisticated approach to balanced sampling by generating synthetic samples rather than merely replicating existing ones. This addresses limitations observed in pure oversampling, creating richer datasets with more varied training scenarios, ultimately enhancing model reliability through diversity in learning.

The Benefits of Balanced Datasets

The impact of balanced sampling in AI datasets is multifaceted. Primarily, it bolsters the accuracy of AI models, which are trained on datasets reflecting real-world scenarios more accurately. More accurate models lead to better decisions, impacting business profitability and societal equity. Consider a fraud detection system; without balanced sampling, it might miss numerous instances simply because they were underrepresented in the training phase. With balanced data, predictions are more robust and reliable.

Balanced datasets also foster fairness and inclusivity. When datasets are skewed, biases prevail, leading to discriminatory outcomes. AI systems used in recruitment, for example, can perpetuate gender biases if training data is imbalanced. By ensuring that AI models are balanced, developers promote equitable technology, enhancing consumer trust and societal acceptance.

Furthermore, balanced sampling ensures that AI models are resilient and generalizable. Models trained on unbalanced data might perform exceptionally well on specific tasks but falter under conditions not represented in training. By balancing datasets, developers craft models capable of making predictions across diverse environments, thereby bolstering AI’s applicability worldwide.

Lastly, balanced sampling optimizes resource utilization, conserving both time and computational power. Robust models require less iteration and debugging, accelerating time-to-market for AI solutions. This efficiency translates to cost savings and swift adaptation to market dynamics. In essence, balanced sampling isn’t just a tool for crafting better datasets—it’s a cornerstone of strategic AI development.

Balanced sampling in AI datasets isn’t merely a technical necessity—it is an enabler of breakthroughs in both technology and society. With it, developers can craft algorithms that respect diversity and function at a higher, more empathetic level. This venture into balanced sampling is more than research; it’s a revolution redefining AI’s impact on the world.

In conclusion, while the challenges are many, the rewards of implementing balanced sampling in AI datasets are vast. By proactively adopting these strategies, stakeholders not only ensure robust AI performance but also contribute to a world where technology acts in service of humanity. The journey is intricate, but with persistence and foresight, balanced sampling in AI datasets emerges as an unparalleled ally in the quest for responsible, intelligent automation.