Backpropagation Through Time in RNN

Understanding the intricate dance of neural networks is both an art and a science. At the heart of many successful models lies the Recurrent Neural Network (RNN), an elegant structure designed to recognize patterns in sequences of data. Whether you are trying to predict stock market movements or decode speech recognition patterns, RNNs prove invaluable. However, training them is a different ballgame altogether. Enter backpropagation through time in RNN — a mouthful but a revolutionary process that optimizes these networks. This technique is how we train RNNs to ‘remember’ information over time and adjust their parameters to get better with experience. Imagine having an assistant who’s not only brilliant but also constantly learning from the past; that’s what backpropagation through time does for RNNs.

The concept of backpropagation through time (BPTT) might sound daunting, but at its core, it’s akin to the way we reflect on past experience to improve future actions. Imagine reliving every moment of last week to understand where things went right—or horribly wrong—so that you can adjust and make the next week even better. This is BPTT for RNNs simplified. The beauty of this approach is it allows RNNs to excel in tasks that require sequence prediction by learning from the errors in sequential data.

The effectiveness of backpropagation through time in RNN is evident in various cutting-edge applications, from advancements in natural language processing to breakthroughs in time-series analysis. Despite its complexity, many in the tech community hail it as a game-changer. The intriguing part is not just how it works but how it’s applied in real-world situations—a topic for the deep learning enthusiasts and curious minds alike. So, next time you marvel at your AI-generated playlist knowing exactly what you want to hear next, remember, backpropagation through time in RNN might just be behind it all.

The Mechanics Behind BPTT

Moving beyond the technical jargon, let’s dig deeper into why backpropagation through time in RNN garners so much interest. Unlike standard neural networks where data is fed one after another, RNNs are built for sequences. These could be a stream of text, a series of time-stamped events, or even a melody—anything where the order of information matters. A traditional method of backpropagation wouldn’t do the trick here because RNNs need to take past data points into account when processing new inputs.

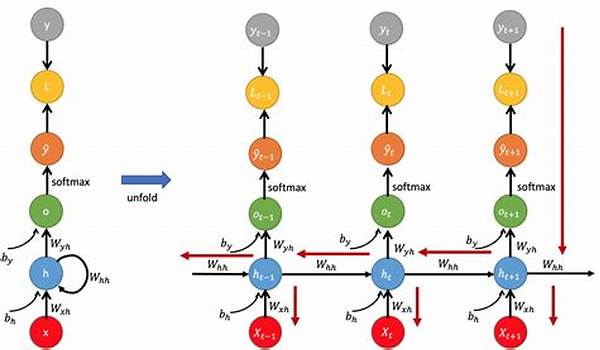

To achieve this, BPTT unfolds the RNN across time. Imagine stretching the network out to cover all previous time steps up to a certain limit, and then performing backpropagation across this unrolled version. For instance, training can cover a week’s data to understand the information better. This enables the model to learn long-term dependencies—something regular backpropagation often fails at.

The concept might sound abstract, but when applied, it’s incredibly effective. It allows the model to weigh the influence of each past moment differently, adapting and honing its ability to predict and sequence smarter than before. It’s analogous to polishing a piece of diamond, understanding which angle shines the brightest, and refining future cuts based on that knowledge.

Purpose and Power of Backpropagation Through Time

At its heart, backpropagation through time in RNNs serves the vital purpose of enabling these networks to learn and adapt efficiently. The uniqueness of this method is embraced within industries driven by sequences—like voice command systems, language translation apps, and predictive text input. Its beauty lies not just in its adaptability but also in its vast applicability. We’re talking about an algorithmic marvel that gives your smartphone its brainpower to correct typos on the fly or suggest words that make you wonder, “How did it know?”

BPTT extends the ordinary backpropagation by considering each sequence step as equally pivotal as the rest. It’s as if each note in a symphony is recalibrated to contribute to an unforgettable performance. This is an essential factor when it comes to understanding sequences because in real-life situations, every piece of data, regardless of its initial insignificance, could be a game changer.

Furthermore, in complex domains like language modeling, BPTT acts as a cornerstone. Did you ever wonder how predictive text seems magical? It’s because of intricate systems trained via backpropagation through time—ensuring that the context isn’t lost after just a few words. This ongoing learning loop doesn’t just stop at some pre-set point; it continuously strives to enhance the algorithm’s capability by minimizing prediction errors across multiple dimensions.

The Emotional Impact of BPTT

For programmers and developers, BPTT isn’t just a technical tool; it’s a transformative process that revolutionizes how machines understand human language and behavior. The emotional allure lies in its capacity to bridge the gap between human expectation and machine performance. As an invisible magician working behind the scenes, BPTT translates messy data into sensible actions and predictions.

The ability to transport your digital voice assistant from misinterpreting phrases to becoming your most reliable companion stems from its foundational training in BPTT. For tech enthusiasts, it’s an exhilarating prospect—unveiling an unprecedented level of customization and foresight in digital systems.

From Geeky to Chic: Implementing BPTT

Desiring to implement BPTT in real-world applications? Let’s move from geeky mechanics to practical applications. Imagine being the mastermind behind an app that learns as users interact with it—an app that doesn’t just follow patterns but evolves them. Backpropagation through time is your key to unlocking that door. It offers exciting opportunities for developers convinced that the future lies in intelligent systems that think on their own.

Whether you’re creating a sophisticated stock predictor or an interactive storytelling device, BPTT amplifies the impact. Its blend of mathematical precision and creative potential lets developers configure adaptive models suited for an array of industries, embracing innovation that promises more delightful user experiences.

Practical Use Cases

1. Natural Language Processing: BPTT optimizes language models for seamless communication.

2. Time Series Forecasting: Ideal for analyzing stock prices and weather trends.

3. Speech Recognition: Enhances accuracy by learning over time.

4. Machine Translation: Translates text more contextually.

5. Predictive Text Input: Suggestive, intelligent keyboard predictions.

6. Emotion Detection in Text: Identifies sentiments within communication streams.

7. Robotics and Automation: Facilitates sequential decision-making.

8. Music Generation: Creates artist-like compositions reflecting historical data.

Exploring the Future of BPTT

The role of backpropagation through time in RNN is perpetually transformative, adapting with technological strides taken in artificial intelligence. It remains a gateway, an essential cornerstone, and a bridge leading to the world where machines seamlessly converse and collaborate with humans. The dual promise of precision and affinity is its USP—quickly making it a darling of developers with an eye on the horizon.

This robust methodology, though intricate, offers immense opportunities to revolutionize automation, making it more intuitive and seemingly “human.” However, as we unroll the history of BPTT, it’s pivotal to explore future possibilities or modernization that could redefine its shape within the AI landscape. Evolution is inevitable, and staying ahead of the curve is essential—an intricate dance with technology awaits, where every leap forward is backed with learned foresight.

The Science of Implementation

Current research highlights the trajectory of BPTT in uncovering new dimensions in neural network training. Exciting areas of research are considering alternate means like integrating memory networks that elevate standard RNN capabilities to an enriched, broader framework. The science behind it has won accolades, heartily praised for engineering models that pave the way for a future empowered by progressively smart systems.

As we forge ahead, a discerning scientific mandate is sought for educating developers, engineers, and enthusiasts to investigate BPTT as a fundamental pragma brought to light through rigorous research and implementation excellence. It’s not just computer algorithms at play—it’s discovering profound connections that unmistakably influence both the technology we design and the world we imagine.

Enhancing Systems with BPTT

The advent of backpropagation through time in RNN brings with it the ethos of progress—driving intelligent systems capable of understanding, predicting, and adapting through sequential data analysis. Capturing the zeitgeist, it yields a comprehensive learning experience punctuated by adaptability, crucial in robotics, linguistic evolution, and system automation.

A multi-pronged approach to enhancing systems through BPTT involves developing models that appreciate and harness its inherent learning diversity. This will undoubtedly address contemporary computational challenges, offering solutions enriched with advanced frameworks considered futuristic today. This collaborative action with continuous refinement heralds a new dawn where mechanical aptitude is interwoven with intuitive intelligence, bringing forth not just productivity but brilliance in execution.

Tips for Implementing BPTT in Your Projects

Whether you’re diving into the realm of deep learning or exploring specific niche applications, having efficiency at your fingertips requires more than just knowledge—it necessitates practical insight into BPTT.

Tips for Successful Implementation

Taking these tips to heart ensures a nuanced understanding and maximization of BPTT’s potential. As you embark on your journey in the BPTT universe, remember the realm of endless possibilities, positioning yourself at the vanguard of technological transformation.