Ah, backpropagation in supervised learning; the unsung hero of the machine learning world! If machine learning were a movie, backpropagation would be the understated supporting actor who steals the spotlight despite the lead’s glitzy appeal. Let’s start by delving into this fascinating process, delivering insights with a dash of humor and a whole lot of nerd charm.

Imagine teaching a child how to recognize animals. You show her a picture of a cat, say, “This is a cat,” and she nods, understanding. The next day she points to a dog and says, “Cat!” You prompt her again with new cues until she confidently and correctly identifies a cat. That, in layman’s terms, is what backpropagation in supervised learning does but in the digital realm. It feeds labelled examples into artificial neural networks, measures their output against the true outcome, and then fine-tunes the network’s “understanding” through adjustments, so its future guesses—be it animal identification or something dramatically more profound—hit the mark more often.

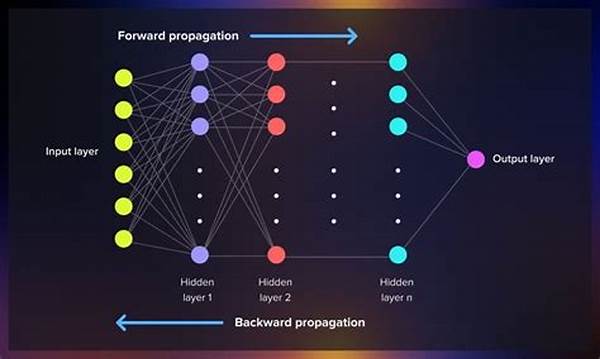

Simplistically, backpropagation is like a retroactive script doctor. It rewrites the neural network’s internal workings after a scene (or output) flops, considering its missteps to polish the end product. Despite its simplicity seeming like stitching a new patchwork quilt, it’s an intricate ballet of weighted inputs, calculated errors, and gradient descent—a mystical-sounding yet methodical step-ladder approach to reach optimal efficiency. Each epoch—or cycle of learning—is fraught with small corrections, much like how we’d hope our GPS would gently reroute us after a wrong turn.

Understanding the Impact of Backpropagation in Supervised Learning

In machine learning’s grand narrative, backpropagation serves as a meticulous sculptor, chiseling the model closer to its data-driven truth. This isn’t magic but rather a method extended from 1986 ground-breaking research by Rumelhart, Hinton, and Williams. Their contribution made the current surge of AI in daily life possible. From improving your smartphone’s voice recognition to optimizing logistics, these incremental backward calculations bring transformative results.

Nonetheless, don’t let the technical aspects intimidate you. In essence, grasping backpropagation is akin to understanding why your grandma puts love and experience into each pie she bakes. Both mix ingredients (data points), adjust recipes (weights and biases), and yield something deliciously satisfying: accurate models or, well, comforting pies.

Now let’s dive deeper into what makes backpropagation such a critical process in supervised learning and beyond.

The Magic of Mathematics: How Backpropagation Works

Behind every successful supervised learning model is an aligned symphony of mathematics. Backpropagation, in all its glory, timely employs calculus, linear algebra, and an arsenal of math tricks to adjust each neural weight (the force multipliers in a network’s equation) with just the right amount of change.

One can’t help but marvel at backpropagation’s automatic, recursive nature—it truly is the Watson to Sherlock Holmes, cross-referencing new evidence (datasets) with past conclusions. Its iterative essence is an ongoing scientific conversation where the model learns, adapts, and strives for statistical perfection.

To those wading through these waters, recall the goosebumps of using your favorite cheat codes: predictable yet astonishingly satisfying.

Once the network achieves the zenith of design and execution, it stands as a robust, intelligent entity capable of making discerning decisions, like predicting stock market fluctuations or diagnosing medical conditions—all thanks to the patient persistence of backpropagation.

The Essence of Backpropagation’s Usefulness

While backpropagation’s mechanics might seem intimidating at first blush, its application reveals a world of possibilities, a tech Renaissance ready to dawn on future projects:

- Fine-tuning neural networks for optimal performance and precision.

- Reducing error rates in complex predictions or classifications.

- Enhancing image recognition systems to near human-like proficiency.

- Boosting natural language processing tasks’ contextual understanding.

- Contributing to autonomous systems striving for errorless functionality.

- Advancing personalized recommendations in e-commerce landscapes.

- Improving real-time decision systems, such as high-frequency trading algorithms.

How Backpropagation Transformed Machine Learning

Drawing parallels from history’s eureka moments, backpropagation has turned the spotlight onto supervised learning’s simplified execution, efficiently reducing error margins with surgical precision. This algorithm ushered in waves of expansion within AI research and application domains, becoming the cornerstone for academic discoveries and industry breakthroughs.

Designed to mimic human learning, this process allows systems to refine their decisions iteratively, chipping away at inaccuracies and revealing insightful, actionable intelligence that simplifies modern challenges but undoubtedly challenges the norm.

So, if you sign up for that next AI course, remember: the language of mathematics is intertwined deeply throughout your learning journey—a language where backpropagation is the bard, singing tales of magnificence and precision.

Happy learning, fellow AI enthusiast!