- Tackling Common Challenges in Data Labeling

- Introduction to Addressing Data Labeling Inconsistencies

- Strategies for Ensuring Consistency

- How These Strategies Benefit Businesses

- Discussions on Addressing Data Labeling Inconsistencies

- Goals for Addressing Data Labeling Inconsistencies

- Data Labeling Standards for Improved Consistency

In the dynamic landscape of data science and artificial intelligence, the importance of accurate and consistent data labeling cannot be overstated. Imagine, for a moment, launching the world’s most sophisticated rocket only to find out there were discrepancies in the assembly manual. This scenario mirrors what countless AI algorithms face when confronted with inaccurately labeled data. Data labeling inconsistencies can severely disrupt the process of training machine learning models, ultimately leading to skewed results or, worse yet, a complete misfire on the intended analysis.

The world is driven by data, and as such, accuracy in labeling serves as the cornerstone for creating reliable AI systems. Picture this: an incisive AI capable of diagnosing diseases more accurately than human doctors faltering because certain medical images were mislabeled or misunderstood. The stakes are incredibly high, which is why addressing data labeling inconsistencies has become a priority for tech innovators and businesses striving for precision.

In data labeling, consistency is key. The subtleties of labeled data shapes the learning curve models undergo, directly impacting their decisions and outcomes. Inconsistencies in these labels can introduce bias and errors, ultimately leading to unintended results. To combat this, a robust and well-aligned labeling framework is essential. Addressing these inconsistencies not only enhances model accuracy but also fosters stakeholder trust, paving the way for breakthrough advancements.

Tackling Common Challenges in Data Labeling

Critically assessing the sources of common data labeling inconsistencies reveals various factors. Misunderstandings of labeling guidelines, insufficient training for annotators, and ambiguous data classification all contribute to flawed outcomes. Although technology often lies at the heart of these challenges, human intervention can also yield variable results. It’s an intricate dance between machine precision and human judgment, requiring constant calibration and attention.

—

Introduction to Addressing Data Labeling Inconsistencies

The headline itself, “Addressing Data Labeling Inconsistencies,” might be a mouthful, but it’s a vital part of optimizing AI performance. Why does this topic garner such attention? It’s simple: accurate labeling is the backbone of reliable data models. It’s much like a precision-cut diamond; a slight deviation can mar its intrinsic value. Therefore, companies and researchers are increasingly focusing their efforts on minimizing inconsistencies in data labeling.

Consider a scenario where you are tasked with preparing a gourmet meal. You have all the finest ingredients, but if your measurements are off, the result will be subpar. In the realm of AI, even minimal discrepancies in data labeling can lead to flawed outcomes. Ensuring consistency in data labeling processes is an endeavor that promises peak performance.

The demand for addressing data labeling inconsistencies has led to the emergence of specialized services. These services not only provide expert annotators but also incorporate advanced quality checks to ensure precision. Such initiatives underscore the significance of error-free labeling in data-driven operations.

In the ever-evolving technological world, the conversation on data labeling inconsistencies isn’t just academic. It’s a pragmatic discussion on enhancing the efficacy of AI systems. By delving into this topic, you unlock possibilities for not only improving AI accuracy but also reducing biases embedded within datasets.

Let’s not sugar-coat it; addressing data labeling inconsistencies can be a daunting task. However, confronting these challenges is akin to fine-tuning an instrument for a symphony. The result? A harmonious alignment between your model’s learning and your business objectives.

To further comprehend this endeavor, let us delve into methods and strategies for tacking these inconsistencies. Addressing data labeling inconsistencies opens pathways to more reliable and effective AI systems, setting the stage for propelling business success in an increasingly data-reliant world.

Strategies for Ensuring Consistency

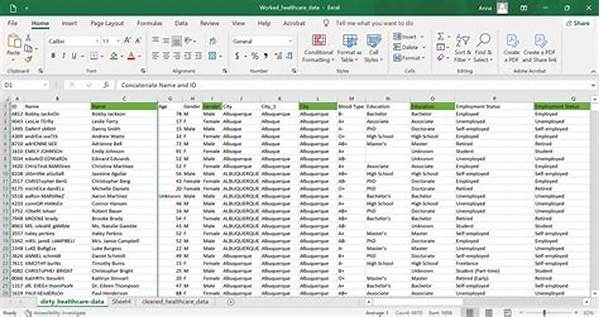

One promising approach is adopting a standardized guidelines framework. This method decreases the likelihood of misinterpretation among those involved in the labeling process. Furthermore, incorporating iterative training sessions for labelers based on error analysis can refine the accuracy of data labeling.

How These Strategies Benefit Businesses

By embracing consistency in labeling, companies can achieve significant improvements in model accuracy. Consistent labeling mitigates biases, promotes scalability of machine learning systems, and enhances user trust. Integrating these strategies not only maximizes the potential of AI but also optimizes operational efficiency.

—

Discussions on Addressing Data Labeling Inconsistencies

Goals for Addressing Data Labeling Inconsistencies

Addressing data labeling inconsistencies effectively serves to refine the accuracy and efficiency of AI models. By focusing on eradicating these inconsistencies, organizations can expect to not only enhance model reliability but also reduce time spent on re-labeling and re-training models, effectively increasing ROI and reducing operational costs.

Moreover, consistent data labeling fosters a sense of confidence among users, stakeholders, and tech developers, creating a shared understanding and trust in the data processed. When stakeholders have faith in the data’s accuracy, they are more likely to invest in and support AI-driven business initiatives, launching them toward greater heights of innovation and success.

Data Labeling Standards for Improved Consistency

Addressing data labeling inconsistencies can be approached strategically through standardized guidelines and practices. Regular training and updating of the annotation team can lead to a significant reduction in errors. Furthermore, implementing quality checks and controlling mechanisms ensures that any deviation is captured early.

—

Feel free to let me know if you need any part adjusted, or additional content for your project!