H1: Activation Functions in Neural Networks

Embarking upon the realm of artificial intelligence, one is immediately thrust into a world bustling with innovations, each playing a crucial role in the functioning of intelligent systems. A standout among these integral elements is undoubtedly the realm of “activation functions in neural networks.” While they often work behind the scenes, unseen by the naked eye, they serve as the unsung heroes responsible for enabling neural networks to make sense of the complex world around us.

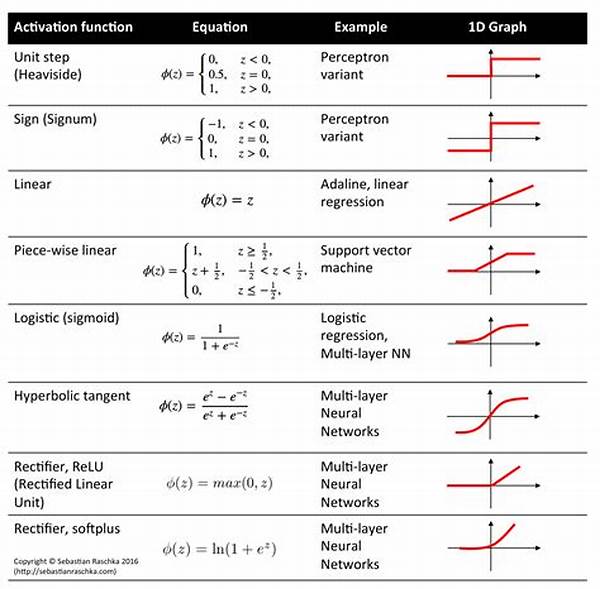

At the heart of neural network operations, activation functions transform raw data into meaningful outputs, much like how a chef transforms simple ingredients into a gourmet delight. Without activation functions, neural networks would simply be linear approximators, unable to grasp the intricacies of nonlinear relationships that define real-world data. With a plethora of activation functions such as Sigmoid, ReLU, and Tanh at play, each tailored to suit specific functionalities, the choice can greatly impact the network’s learning and efficiency. The decision to use a particular activation function often mirrors that of a conductor choosing the right instrument to craft a symphonic masterpiece—each function in perfect harmony with the task at hand.

This article aims to delve into the fantastic world of activation functions in neural networks, uncovering their indispensability and how they promise not just functionality, but finesse. From tackling simple pattern recognition to processing intricate datasets, these functions dictate the neural network’s ability to learn from, adapt to, and predict outcomes in today’s fast-evolving technological landscape. Through an exploration of their design, utility, and influence on modern AI, we will uncover how these small yet mighty components allow neural networks to emulate human-like thought processes, thus bridging the gap between man and machine.

As we journey through this article, let us appreciate the synergy between technology and intellect offered by activation functions, as they continue to drive AI to unprecedented heights, altering how we perceive and engage with the world.

H2: The Power Within: Understanding Activation Functions in Neural Networks—Description

In the monumental universe of machine learning, activation functions in neural networks are akin to the hidden gears moving tirelessly in a timepiece, integral yet often overlooked. These pivotal components are responsible for enabling neural networks to learn, complete complex tasks, and transform abstract data into tangible predictions.

H2: What’s behind the Magic of Activation Functions?

Activation functions determine the output of a network node in a neural network, playing an indispensable role in whether or not a neuron should be activated. They essentially decide if the information received is relevant and should be passed forward through the network. This decision mirrors a bouncer at a nightclub, allowing only those who meet certain criteria to enter and contribute to the overall atmosphere, ensuring the neural network’s functionality aligns with desired outcomes.

Diving into specific examples, we encounter the Sigmoid activation function, resembling an “S” shaped curve. While introducing non-linearity into the network, it also suffers from vanishing gradient issues, where gradients become too small for the network to learn effectively, especially in deeper layers.

Turning to other popular members in the repertoire of activation functions, the Rectified Linear Unit (ReLU) is known for its efficiency. By allowing only positive values to pass through, ReLU overcomes the vanishing gradient problem, becoming a staple in deep learning environments.

H3: Crucial Roles of Activation Functions in Neural Networks

Activation functions not only dictate a network’s learning process but can also affect convergence speed. A research study highlighted that the choice of activation function could drastically reduce training time, opening new pathways for rapid AI development. Imagine a car enthusiasts’ club where the type of engine installed determines speed and fuel efficiency—the wrong choice could lead to a long and tedious road to success.

Moreover, they impact the neural network’s ability to generalize beyond the training data. Selecting the appropriate activation function is crucial, akin to choosing the right gear in mountain climbing, ensuring the network is equipped to handle new challenges effectively.

Their role in neural networks extends beyond functional impact, influencing ascendancy in AI research and applications. From autonomous vehicles reacting seamlessly to real-time data, to voice assistants understanding spoken commands better, activation functions in neural networks are the unsung champions pioneering these advancements.

—Objectives of Activation Functions in Neural Networks:

In a nutshell, activation functions in neural networks shape the landscape of neural design and efficiency akin to how an artist selects distinct colors to create a masterpiece. Their strategic application determines how effectively a neural network can interpret and adapt to intricate data environments, ultimately driving machine learning innovations. Whether it’s enhancing learning rates or solving complex predictions, these functions are the keystones anchoring AI’s growth and potential.

H2: Illustrative Examples of Activation Functions in Neural Networks—Illustrations and Descriptions

Activation functions may sound like dry machinery running in the background, but picture them as virtual superheroes, each possessing unique powers to combat diverse challenges faced by neural networks. Unseen but mighty, their influence echoes through every inference and prediction a network makes.

With vibrant illustrations and comprehensive descriptions, the magic and utility of activation functions in neural networks are brought to life, offering a compelling narrative to enthusiasts and experts alike about their indispensable role in the fascinating world of AI.

—

This content provides a multifaceted approach to understanding “activation functions in neural networks,” blending education with storytelling, visualization, and expert insights to highlight their critical role in modern artificial intelligence.