- Testing Algorithms for Fairness

- The Role of Fairness in AI

- Understanding the Need for Testing Algorithms for Fairness

- The Impact of Unchecked Bias

- Delving into Fairness Metrics

- Toward a Fair and Equitable Future

- The Importance of Fairness Evaluation in AI Systems

- Key Considerations in Testing Algorithms for Fairness

- Addressing Socio-technical Implications

- Tools and Frameworks for Ensuring Fairness

- Toward Continuous Improvement and Accountability

- Ethical Implications in Algorithm Fairness

- Discussions on Testing Algorithms for Fairness

- How Can We Improve Testing Algorithms for Fairness?

- Fairness Auditing: More Than a Buzzword

- Frameworks and Tools for the Future

- 7 Illustrations Related to Testing Algorithms for Fairness

- Illustrating Fairness: How Visuals Make a Difference

- Turning Numbers into Narratives

- Impactful Storytelling through Cartoons and Diagrams

- How Illustrations Transform Understanding of Fairness

Testing Algorithms for Fairness

In today’s digitally driven world, algorithms have become the invisible architects shaping our daily lives. From deciding what news we see to determining our credit scores, these complex mathematical formulations wield tremendous power. However, with great power comes great responsibility, and the question arises: how can we ensure these algorithms act fairly and without bias? This issue isn’t just an abstract concern for data scientists; it’s a topic that touches the lives of everyday people. Imagine a scenario where a loan application is denied, not based on the applicant’s actual financial health, but due to an inherent bias within an algorithm. Therefore, testing algorithms for fairness has never been more critical.

In this brave new world of machine learning and AI, fairness is the currency of trust. Businesses that lead the charge in implementing testing algorithms for fairness not only stand to gain consumer trust but also lead by example in fostering a more equitable digital ecosystem. According to a study conducted by [Techno Research Group](https://www.techno.com/researchgroup), companies that are perceived as fair see a 30% increase in customer loyalty. This compelling statistic should make every company pause and reflect on the ethical implications of their tech deployments. Implementing fairness isn’t just an ethical choice; it’s a strategic business move.

But what does testing algorithms for fairness actually entail? At its core, it involves scrutinizing the results of an algorithm to ensure that they are unbiased and equitable. This means going beyond mere performance metrics and delving deeper into whose needs are being served—and at whose expense. This has led to an increasing demand for algorithm auditors, a new breed of tech-savvy individuals capable of identifying and mitigating bias.

The call to action is clear. Companies, tech professionals, and policymakers alike must rally around the cause of testing algorithms for fairness. It’s not just a trend; it’s a necessity. As we advance towards a future dominated by AI, fairness must be baked into the very code that defines our daily interactions. So, are you ready to lead your organization into this ethical frontier? Engaging with fairness testing services is the first step on this vital journey.

The Role of Fairness in AI

—

Understanding the Need for Testing Algorithms for Fairness

As modern life becomes more interwoven with technology, the demand for fairness in algorithmic decisions gains momentum. Whether it’s for assessing job applications, approving financial loans, or curating social media content, algorithms have a manifestly profound impact. However, this technological marvel brings about pressing ethical questions. Can we ensure these algorithms operate fairly? Is there a way to balance efficiency with justice? Enter the critical practice of testing algorithms for fairness—an effort born out of necessity, to not only promote equality but also to avoid the pitfalls of biased decision-making.

The Impact of Unchecked Bias

Unchecked bias in AI can lead to alarming consequences. Imagine an AI recruiting platform that continually favors one demographic over others, or a healthcare algorithm that doesn’t prioritize minorities’ healthcare needs. The societal costs are real and significant. Thus, the conversation around the importance of testing algorithms for fairness continues to grow. Algorithms should reflect the diverse ecosystem they serve, and in doing so, they can help bridge glaring societal gaps. With constant validation and checking, companies can avert discrimination and make data-driven decisions that align more closely with ethical standards.

Delving into Fairness Metrics

Fairness metrics serve as the backbone for evaluating the fairness of an algorithm. They act as a yardstick to ensure unbiased results. These metrics range from demographic parity to equal opportunity, each providing a different lens through which fairness can be evaluated. Data scientists and ethicists often debate which fairness metric holds the most weight, and rightfully so. The choice of metric can dramatically alter a system’s behavior and societal impact. As such, testing algorithms for fairness is not a one-size-fits-all solution.

Toward a Fair and Equitable Future

The goal of implementing fairness in AI systems is not just to prevent bias, but also to foster an environment where technology acts as an equalizer. This is where data scientists step in, acting as both creators and gatekeepers. Testing algorithms for fairness shouldn’t be an afterthought but a crucial part of the design process. By instilling fairness from the ground up, we can leap toward a more equitable tech landscape. As consumers, staying informed and holding companies accountable becomes our collective role in this journey.

The Importance of Fairness Evaluation in AI Systems

—

Key Considerations in Testing Algorithms for Fairness

Testing algorithms for fairness isn’t merely a buzzword; it’s a necessary step in the ethical development of artificial intelligence. But what factors do we consider when testing for fairness? First, understanding the context of the algorithm’s application is vital. Different sectors—be it healthcare, finance, or hiring—require distinct fairness parameters. Misapplication can lead to skewed results that do not reflect real-world needs.

Addressing Socio-technical Implications

Second, we must consider the socio-technical implications. This means acknowledging that algorithms don’t exist in a vacuum; they interact with the society in which they are employed. Thus, testing algorithms for fairness should encompass broader societal contexts. It’s essential to consider who the stakeholders are and how algorithmic decisions influence them. Assessing this impact holistically can prevent the entrenchment of harmful biases within systems.

Tools and Frameworks for Ensuring Fairness

Several tools and frameworks have been developed to aid in this process. Software like Fairness Indicators and AI Fairness 360 provides mathematical evaluations of algorithmic fairness. These tools offer companies the ability to measure and rectify bias, but they are not panaceas. The human touch, informed by nuanced understanding and ethical considerations, remains irreplaceable in the algorithm fairness testing process.

Toward Continuous Improvement and Accountability

Lastly, fairness testing is not a one-off task but a continuous process. Periodic reevaluation ensures that evolving data and societal changes are reflected in the system. Moreover, accountability measures should be put in place to ensure adherence to fairness standards. Testing algorithms for fairness must involve not just the internal data science teams but also external auditors to maintain objectivity. In conclusion, fairness in algorithms isn’t a destination but a journey—a communal effort towards a balanced digital future.

Ethical Implications in Algorithm Fairness

—

Discussions on Testing Algorithms for Fairness

1. Audience Awareness: How do businesses communicate their algorithm fairness practices to a non-tech-savvy audience?

2. Legal Frameworks: What are the current legal standards for algorithm fairness in different industries?

3. Societal Impact: How does algorithm bias perpetuate existing societal inequalities?

4. Corporate Responsibility: What role do companies play in ensuring algorithmic fairness?

5. Data Diversity: How does the source diversity of training data impact fairness outcomes?

6. Tech Transparency: How transparent should companies be about their algorithmic testing methods?

7. Consumer Education: What steps can be taken to educate consumers about AI and fairness?

8. Bias Identification: What are effective methods for identifying hidden bias in AI systems?

9. Advocacy and Policy: How are advocacy groups influencing policies around algorithm fairness?

10. Innovative Solutions: What novel technologies are emerging to address algorithm fairness?

How Can We Improve Testing Algorithms for Fairness?

While headlines often spotlight the allure of cutting-edge AI, they should equally dedicate space to the intricate dance of fairness. Testing algorithms for fairness is like assembling a puzzle where each piece represents stakeholder needs, ethical guidelines, and societal norms. The equation seems straightforward, yet the devil is in the details—a social matrix where oversight can lead to biases that evolve from subtle to systemic.

Fairness Auditing: More Than a Buzzword

Fairness auditing is becoming more than just a tech or regulatory checkbox—it’s a movement. Companies rushing to embrace digital transformation now find themselves scrutinizing not just datasets but the very fairness of their models. Take the instance of AI-driven hiring tools; these systems influence thousands of job outcomes. What’s their core criterion? Testing algorithms for fairness within these systems isn’t about making minor tweaks; it’s about resetting structural biases and recalibrating standards for fairness.

Frameworks and Tools for the Future

New frameworks like inclusive AI testing are emerging as lighthouses guiding brands towards equitable futures. But are these tools foolproof? Just adopting a framework isn’t enough; understanding its metrics, knowing its limitations, and creating an ecosystem of continuous learning and improvement is paramount. As fairness becomes a precondition rather than a postscript, industries must remain agile and current.

7 Illustrations Related to Testing Algorithms for Fairness

Illustrating Fairness: How Visuals Make a Difference

Visuals play a pivotal role in communicating complex ideas, like algorithm fairness. Think of visuals as the narrative illustrations that bring technical stories to life. Conveying a fairness metric might seem mundane on a spreadsheet, but animate it and suddenly it breathes life—engaging stakeholders who demand fairness yet lack technical expertise. Elegantly crafted visuals make these statistical elements relatable, turning abstract data into concrete insight that inspires action.

Turning Numbers into Narratives

While numbers are inherently objective, the stories they tell can be subjective—malleable in the hands of those who present them. This is why the challenge of illustrating algorithm fairness calls for creativity blended with ethics. Imagine an infographic visually dissecting the fairness journey, from initial data collection to final deployment. Such a visual storytelling approach can reach audiences on a level that raw statistics might never achieve.

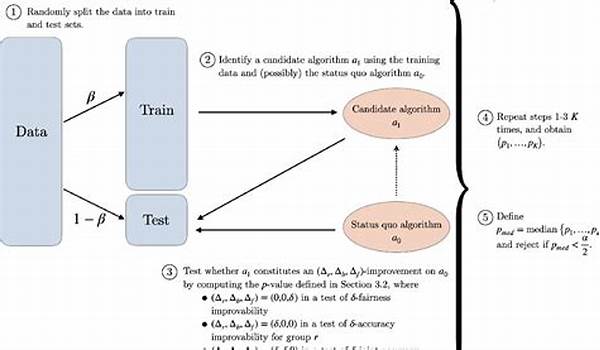

Impactful Storytelling through Cartoons and Diagrams

Imagine a cartoon characterized by an “Algorithm Detective,” diving deep into the world of zeros and ones, unraveling biases like a tech-themed whodunit. Or a diagram showing an algorithm fairness workflow, complete with emotive arrows depicting the human impact of each decision point. Such illustrations don’t just educate—they evoke thought, discussion, and eventually, change.

How Illustrations Transform Understanding of Fairness

Ultimately, illustrations are more than sensory embellishments; they’re tools of persuasion and clarity. As testing algorithms for fairness becomes crucial, decision-makers and tech novices alike must see the impact of bias and value of fairness through these visual lenses. This synergy of visuals and statistics becomes a technological Rosetta Stone, deciphering what’s truly fair in the arcane world of AI.