H1: Cross-Validation Strategies for Improved Prediction

Cross-validation is a fundamental technique in the data science toolkit, designed to enhance the reliability and generalizability of predictive models. It’s like taking your model on a practice lap before the big race, ensuring it performs at its peak. If you’ve ever wondered how to squeeze out every drop of predictive power from your algorithms, cross-validation strategies for improved prediction might just be your secret weapon. This technique divides your data into parts, trains your model on some parts, then tests it on the others. Whether you’re developing a recommendation engine that suggests the next binge-worthy series on a streaming platform, or you’re engaged in serious predictive modeling for market trends, employing cross-validation can make a world of difference.

Imagine you’re a chef preparing a dish for a prestigious cooking competition. Instead of just hoping for the best, wouldn’t you taste it several times during the preparation to ensure it’s just right? That’s cross-validation in the culinary world! Similarly, in data science, cross-validation strategies for improved prediction act as a litmus test for your models, making them robust against overfitting and adapting them to handle unseen data scenarios effectively.

The discipline of applying cross-validation ensures your algorithm isn’t just flaunting its skills on known data. It’s crucial for revealing how the model might behave in real-world scenarios, thereby making predictions more reliable. In a sense, cross-validation is the mentor that whispers wisdom into the ears of your algorithms, steering them clear of overfitting—a notorious pitfall where a model performs great on training data but flops in the real world.

No longer should your models fear the uncertain terrains of novel datasets. With cross-validation strategies for improved prediction, data scientists and machine learning enthusiasts can enjoy the perks of heightened confidence in their model’s performance. Additionally, cross-validation can sometimes unearth insights about the dataset that you weren’t expecting — like discovering a hint of lime in what was thought to be a chocolate-focused recipe!

H2: Understanding Key Cross-Validation Techniques—Discussion: Refining Model Accuracy with Cross-Validation

The pursuit of precision in machine learning models often feels like chasing perfection in a never-ending marathon. This is where cross-validation becomes more than a mere tool—it transforms into a steadfast ally. Cross-validation strategies for improved prediction slice the complexity of your data into manageable portions, scrutinizing each aspect of your model’s decision-making abilities. In the ever-evolving world of data-driven decision-making, who wouldn’t want their model to dodge the hurdles of overfitting like a pro?

Let’s delve into the brass tacks of why cross-validation holds significant importance. Every data scientist has faced the nightmare of deploying a model only to find it flailing when exposed to unfamiliar data. Cross-validation acts as a rigorous boot camp that prepares your model for the myriad of scenarios it may encounter post-deployment. By adopting cross-validation strategies, you’re essentially investing in a predictive polygraph test that unearths your model’s true capabilities.

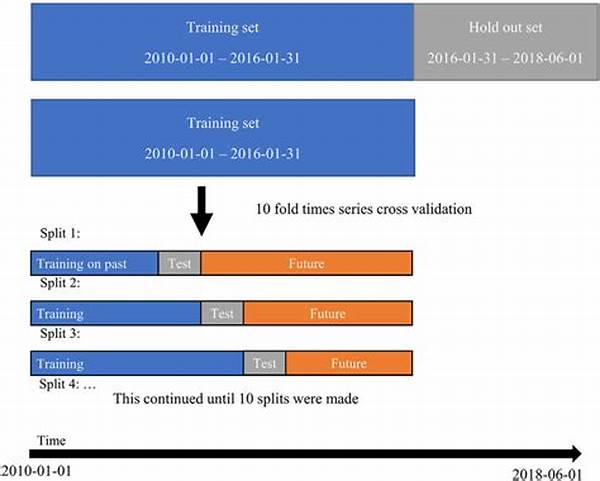

In the multi-hued palette of cross-validation, k-fold stands as a masterpiece. Picture this: your dataset is partitioned into ‘k’ equally sized folds. Subsequently, the model is trained on k-1 folds and tested on the remaining one. This cycle is repeated until each fold has served as a testing ground exactly once. The results are averaged to produce a model performance metric that embodies balance and precision. It’s methodical, efficient, and quite frankly, indispensable for model calibration.

Different models benefit from different cross-validation strategies. Because of this, opting for cross-validation strategies for improved prediction becomes crucial. Take non-uniform data distributions as an example; stratified k-fold cross-validation ensures each fold mirrors the entire dataset’s distribution, thus mitigating sampling bias. Meanwhile, leave-one-out cross-validation, albeit computationally taxing, can be precisely what’s needed for smaller datasets where every data point is worth its weight in gold.

Finally, from a narrative perspective, the story of cross-validation is one of resilience and adaptation. It’s akin to a martial arts student training under varied conditions to become an adept fighter. The battle isn’t merely with new datasets but with ourselves—to craft models that serve real-world applications with pinpoint precision. Cross-validation, thereby, not only heightens technical acuities but fosters an evolutionary mindset that hones data scientists into adept model artisans.

H2: Advanced Techniques in Cross-ValidationH3: Real-World Applications of Cross-Validation—Examples of Cross-Validation Strategies for Improved Prediction

—Introduction to Cross-Validation Techniques

Data science takes the front seat in this fast-paced digital era, drive by an intense quest for accuracy and precision. It’s a roller coaster ride, filled with intricate turns of datasets and unpredictable loops of patterns. And as exhilarating as it might seem, one needs robust strategies to secure the performance of predictive models. Enter the realm of cross-validation strategies for improved prediction, the lifeline for those on this analytical adventure.

You might wonder: why such a hullabaloo over cross-validation? Imagine you’re at a dance-off. You wouldn’t waltz onto the stage without rehearsal, right? Cross-validation ensures your model’s dance moves are well-practiced, testing its mettle on varied sequences before the grand performance. It orchestrates a symphony of data analysis, fine-tuning your predictive models to their utmost potential, enabling them to step out confidently onto the data-driven dance floor. It’s your backstage pass to a world of model resilience and predictive prowess.

H2: Elevating Predictive Accuracy through Cross-ValidationH3: Implementing Cross-Validation in Data Science Projects—Exploring Cross-Validation Strategies for Enhanced Predictions

In the universe of machine learning, designing models is an imaginative process teeming with creativity and potential. Yet, without the scaffolding of cross-validation strategies for improved prediction, the structure might collapse like a poorly assembled puzzle. It’s an insurance policy for your data science models, safeguarding them from the erratic storms of unexpected datasets.

Why are cross-validation strategies indispensable, you may ask? Similar to how superheroes train to harness their abilities, models require a regimen that tests and refines their capabilities. Cross-validation slices the dataset into different combinations, creating a vigorous training ground for models. By encountering multiple data scenarios during this rigorous trial period, models adapt, learn, and grow stronger—a kind of Mr. Miyagi-style tutelage for aspiring predictive engines.

Some data enthusiasts often think of cross-validation as the ultimate Swiss army knife in the classroom of data science. Different tasks call for different tools, much like the multifaceted nature of k-fold, leave-one-out, and bootstrapping. K-fold divides datasets into segments, ensuring every slice savors the test experience; leave-one-out focuses on individual scrutiny, perfect for nuanced models; bootstrapping channels a simulated cornucopia of data scenarios. It’s versatile, effective, and above all, transformative.

Cross-validation’s underpinning attribute is resilience. Models buoyed by cross-validation exhibit dexterity in confronting uncharted data landscapes and surmounted overfitting lattices. As we navigate through the data maze, cross-validation strategizes pathways, cutting through the labyrinthine complexity, leading to clarity—a luminous point where data meets perfect prediction harmony.

In summary, cross-validation strategies are not merely an option; they’re a necessity. They nurture models to become flexible, robust, and capable of tackling any data challenge that whispers from the horizon. By infusing models with these strategies, data architects ensure their creations don’t just predict—they excel, delivering insights that resonate with accuracy and impact.

H2: Implementing Effective Cross-Validation in Model DevelopmentH3: Benefits of Using Cross-Validation Techniques—10 Tips for Effective Cross-Validation Strategies

—Describing Cross-Validation: Essential Strategies

When it comes to designing resilient models, cross-validation is the secret sauce that data scientists swear by. Let’s face it, navigating datasets without this compass can feel like taking a ride through unfamiliar streets without a GPS. Harnessing cross-validation strategies for improved prediction is all about orchestrating your model’s dance through the dataset labyrinth with precision and flair.

Cross-validation isn’t just a safety net; it’s akin to a crash test for your data. Before releasing models into the bustling city of real-world data scenarios, running them through the gauntlet of cross-validation ensures they’re ready to handle unexpected curves. It’s the proverbial dress rehearsal that exposes models to every conceivable critique, building their gumption and resilience.

Despite its power, cross-validation doesn’t complicate—it’s like tuning your favorite musical instrument. Finding harmony between different types of validation techniques is about fine-tuning, getting those notes just right. It’s not one-size-fits-all, and that’s where the adaptability of cross-validation shines, offering flexibility akin to a maestro conducting a symphony of data permutations.

Ultimately, embracing cross-validation isn’t just good practice—it’s necessary practice. It allows models to peek behind the curtain of unknown datasets, gaining insights and tailoring predictions with precision. As the industry strides towards predictive excellence, those integrating cross-validation strategies for improved prediction into their workflow are setting the gold standard in thoughtful, precise, and impactful data science applications.

H2: Mastering Cross-Validation for Precise PredictionsH3: Integrating Cross-Validation into Everyday Data Analysis