Creating extensive and varied content such as articles and illustrations on a specific topic requires careful planning and understanding of the topic’s nuances. Here is a structured approach to create coherent and compelling content centered around the theme of “Strategies for AI Transparency Enhancement”.

—

Artificial Intelligence (AI) holds transformative potential for nearly every industry, from healthcare to finance, to education and beyond. However, with great power comes great responsibility. The opaque nature of many AI systems can often lead to trepidation and misunderstanding—an AI model deciding loan approvals could easily be labeled as biased without the who, what, and why of its decision-making process clearly elucidated. Enhancing transparency in AI is paramount not only for ethical reasons but also for end-user confidence and industry-wide integrity. This is where strategies for AI transparency enhancement come into play, becoming crucial elements of contemporary digital ethics and governance.

Why is transparency important, you ask? Because a peek behind the curtain can unveil the magic (or the logic) behind AI decisions—demystifying what may appear as an inexplicable black box. By understanding the ‘how’ and ‘why’ behind decisions, businesses can build and maintain user trust—a commodity as valuable, if not more so, than any tech innovation. Moreover, transparency can substantially mitigate risks associated with AI applications, ensuring compliance with legal standards while promoting ethical use.

Despite its significance, AI transparency often feels like catching a greased-up pig: tricky and elusive. Existing AI technologies offer incredibly complex architectures, often leaving even their creators befuddled. You might be thinking, “How on earth do we peel back the layers?” Well, that’s where innovative strategies for AI transparency enhancement step in. These strategies are the keys to unlocking productive partnerships between AI developers and stakeholders, who demand accountability and fairness. Ready to embark on this journey? Let’s delve deeper into these strategies.

Unveiling Transparency: A Dive into AI Practices

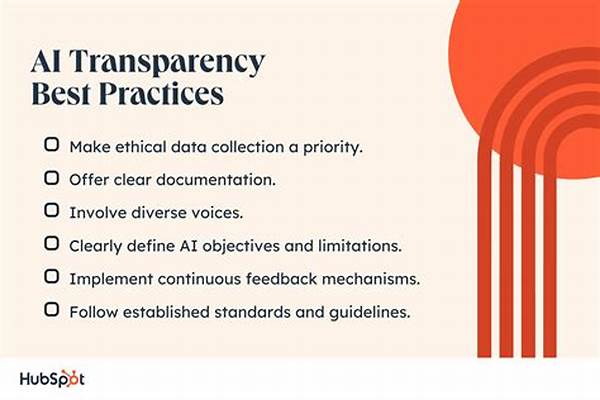

Although unveiling AI transparency may sound like a feat reserved for tech wizards, everyday practitioners and stakeholders can adopt practices to enhance AI openness. These strategies for AI transparency enhancement can be categorized into several actionable approaches that aim to transform the idea of AI ethics into a tangible reality.

Firstly, documentation is king. Comprehensive documentation of AI systems’ decision-making pathways enables both experts and novices to gain valuable insights. Collaborative platforms where developers openly share models and methodologies can ignite community discussions, leading to shared learning and improved transparency. Think of this strategy as open-sourcing the AI brain—a strategy both radical and rewarding.

Secondly, user interface design plays a pivotal role in AI transparency. Imagine an AI application wrapped in a user-friendly interface that offers textual or visual explanations for its actions—a delightful blend of transparency and usability! This strategy calls for human-centric AI interfaces capable of explaining decisions in layman’s terms, fostering user comprehension and trust.

—

Detailed Examination of AI Strategies

The journey from opacity to transparency in AI is a complex one, necessitating precise and effective strategies. In this section, we will discuss key methods that serve as catalysts in enhancing transparency within AI systems, creating an ecosystem of clarity and trust.

Methods for Implementing Transparency

Documentation and Open-Source Collaboration

To crack AI transparency, collaboration across the AI community is essential. Encouraging the documentation of AI models reveals hidden insights, fostering a culture of openness. Such transparency allows peer reviewers and stakeholders to not only understand but also contribute to improving existing systems.

Furthermore, open-source platforms play a pivotal role by democratizing AI development. By building a community-driven approach, developers can encourage scrutiny and collective improvement, vastly enhancing transparency.

Human-Centric Interfaces

Effective communication between humans and AI is fundamental. Developing interfaces that provide intuitive, human-centric explanations for AI’s decision-making processes ensures users are not left in the dark. Innovations such as natural language processing can be leveraged to provide explanations that demystify AI behavior, enriching user experience.

The use of storytelling in these interfaces is another strategy for further humanization of AI, bridging gaps between technology and its users, and offering clarity on the inner workings.

System Testing and Evaluation

Iterative testing and evaluation remain underutilized yet powerful tools in achieving transparency. By routinely subjecting AI systems to robust testing against diverse scenarios, developers can gain better insights into system behavior and potential biases, informing necessary adjustments to improve transparency.

—

Goals of AI Transparency Strategies

—

The discussed strategies for AI transparency enhancement demonstrate a commitment to making AI systems more open, reliable, and user-friendly. A transparent AI system not only supports ethical ideals but also contributes to societal acceptance and confidence in AI applications. Let’s embark on a transformational journey of AI integrity—one transparent step at a time.

Illustrating AI Transparency Strategies

These illustrative strategies offer a clear-cut roadmap for stakeholders interested in promoting AI transparency. As AI continues to evolve, adopting these measures could be the difference between systems that are merely functional and those that are trusted and embraced by users worldwide.

Remember, every journey toward transparency isn’t just an industry push; it’s a commitment to creating systems that respect and uphold user trust, ethics, and accountability.