Hey there, fellow data enthusiasts! We all know how thrilling it can be to build a machine learning model that fits perfectly with your training data. It’s as if you’ve found the holy grail of data analysis! But then, reality strikes. You run your model on new data, and it’s a total flop. Congratulations, you’ve just met overfitting! But no worries. There are plenty of techniques to combat overfitting, and I’ll introduce some of them to keep your models sharp and savvy.

Understanding Overfitting and Its Causes

Overfitting happens when your model is too tailored to the training data, like a bespoke suit that’s great for one occasion but awkward for every other event. It memorizes the data rather than learning the underlying patterns, which leads to disappointing performance on new datasets. Imagine you’ve mastered math problems by rote without understanding the concepts—you’d tank on a test with new problems. The key is finding techniques to combat overfitting, enabling your model to generalize better.

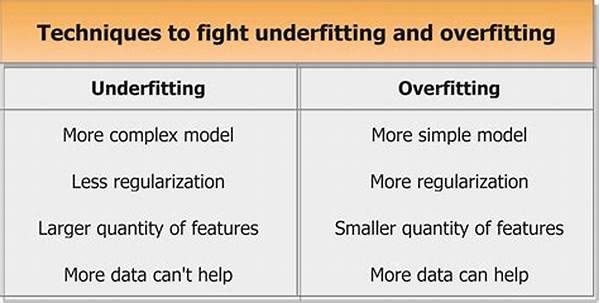

Data overfitting is often due to an overly complex model that tries to account for too many details in the training data. It might even capture noise as opposed to just the signal, leading to high variance when dealing with new data. To address this, you’ll need a toolkit of techniques to combat overfitting. This includes simplifying your models, gathering more data, or tweaking your training process. The goal is to create a flexible model that holds up and remains robust across various data scenarios.

So, what goes into crafting a versatile, overfitting-proof model? Let’s explore some techniques to combat overfitting, ensuring your machine learning adventures remain rewarding and enjoyable. With the right balance of strategies, you can unlock the power of your data and deliver models that shine even in unfamiliar terrains.

Top Techniques to Combat Overfitting

1. Simplify the Model: Complex models might seem appealing, but they often fall prey to overfitting. Simplifying the architecture is one of the primary techniques to combat overfitting. Try reducing the number of layers or units to manage complexity.

2. Gather More Data: The more data, the merrier! Collecting additional data can offer more perspectives for your model to learn from, which is one of the most straightforward techniques to combat overfitting.

3. Regularization: Adding a penalty for larger coefficients keeps your model from getting overly complex. It’s one of the essential techniques to combat overfitting, balancing model training with simplicity.

4. Use Dropout: Randomly dropping units during training can lead to more robust models. This randomness is a nifty trick and among popular techniques to combat overfitting in neural networks.

5. Cross-Validation: This technique involves slicing your data into multiple parts to better ascertain model performance across datasets, acting as a reliable technique to combat overfitting and boosting overall confidence in your model.

Regularization – Keep It Lean and Mean

Alright, let’s dive a bit deeper into regularization, which is basically the magic sprinkle every data scientist needs in their toolbox of techniques to combat overfitting. Regularization involves adding a penalty to the loss function, discouraging overly complex models that capture noise as opposed to real patterns. Think of it like cutting out all the unnecessary flourishes that could make your model too baroque!

Regularization comes mainly in two flavors: L1 and L2. L1 regularization (also known as Lasso) penalizes the absolute sum of coefficients, encouraging sparsity. It’s great when you suspect some features might be entirely irrelevant. L2 regularization (or Ridge) penalizes the square of the coefficients, shrinking them uniformly. Both bring their charm, nudging your model back to earth to avoid the overfitting abyss. When you pair these techniques to combat overfitting with others, your models become both nimble and resilient.

Data Augmentation – When More is More!

Data augmentation is another trick up our sleeve when looking at techniques to combat overfitting. Sometimes, capturing or collecting vast amounts of additional data isn’t feasible, so why not amplify the data you already have? This is especially useful in fields like image processing, where slight modifications—like rotating, flipping, or cropping images—can make a world of difference in model training.

Imagine training a model only on images of cats sitting. With data augmentation, you can introduce different versions of these images: flipped cats, rotated cats, or even zoomed-in cats! These variations allow your model to encounter a breadth of scenarios without you having to hunt down more data. By embracing data augmentation, you’re equipping your model with a dynamic learning environment, wielding techniques to combat overfitting like a pro.

Early Stopping and Beyond—A Balanced Approach

Among the techniques to combat overfitting, early stopping stands out as a fairly intuitive strategy. Essentially, it’s about not overdoing it. During your model training, you continuously monitor a validation set’s performance. Once it starts declining, you pull the plug. It’s like telling your model, “Enough, let’s stop while we’re ahead!” Models have a way of getting comfortable with training data, and early stopping keeps them on a tight schedule.

Just like a balanced diet, combating overfitting requires a mix of strategies. From model simplification to clever data tricks, employing various techniques to combat overfitting ensures no single approach bears the full weight. It’s about finding what works best in your specific context and being adaptable to shifts and changes during model development. Embrace the variety, and your models will thank you with stability and performance that’s impressively robust.

Conclusion – Mastering the Art of Generalization

So there you have it, a myriad of techniques to combat overfitting, all aimed at ensuring your models don’t trip up when facing new data. Think of overfitting as a hurdle in your data science journey—not an end. By harnessing these strategies, you transform this challenge into an opportunity to refine your models and deepen your understanding. Overfitting shouldn’t be a dead end, but a stepping stone toward better, more resilient models.

Techniques to combat overfitting are like secret ingredients that mold your models into savvy, data-agnostic tools. By executing methods like regularization, augmentation, and early stopping, your data science endeavors will be equipped against the volatility of real-world data. Embrace these techniques, keep iterating, and watch as your models grow in capability and finesse.

Happy data modeling, and may your encounters with overfitting be minimal and enlightening!