Machine learning has captivated the business and tech worlds with its promise to transform data into actionable insights. Yet, behind the curtain of every successful machine learning application is a rigorous process of model validation. Enter the unsung hero: cross-validation. This procedure is pivotal in assessing the robustness of a machine learning model’s predictive power. Welcome to our guide on effective cross-validation methods in machine learning, where precision meets opportunity.

Imagine crafting a model that seems perfect but falters when exposed to new data. That’s where effective cross-validation methods in machine learning come into play, acting as your model’s reality check. By systematically partitioning data, these methods allow practitioners to gauge the generalizability of their models. It’s like taking your masterpiece for a test drive on different terrains before unveiling it to the world. Let’s delve deeper into this essential aspect of machine learning to ensure your models aren’t just good—they’re foolproof.

Unlocking the Potential of Cross-Validation

Within the machine learning community, effective cross-validation methods in machine learning are celebrated for their capacity to strengthen model reliability. By splitting datasets into multiple segments, developers can ensure that each data point can serve as both a test and training piece. This iterative validation process not only refines model accuracy but also builds a robust safeguard against overfitting. Invest time in understanding these methods, and the returns—better, more dependable models—are worth every effort.

—

Structure for Effective Cross-Validation Insights

Exploring the landscape of effective cross-validation methods in machine learning is akin to navigating an intricately woven tapestry of analytical techniques. Each thread, significant in its role, contributes to the overall picture of model verification. With this structure, we’ll journey through the corridors of this fascinating subject.

Begin your exploration by spotlighting the inherent value these methods bring to developing foolproof machine learning models. From there, dive into various validation techniques. Cross-validation isn’t a one-size-fits-all approach; it’s crucial to identify which methods resonate with your specific needs. You could choose from methods like k-fold, stratified, and leave-one-out, each offering distinct advantages.

Proceed to unveil real-world examples, drawing connections between theoretical concepts and practical applications. Implementing effective cross-validation methods in machine learning is best understood through case studies that exemplify how they resolve common challenges like overfitting or underperformance. Share industry testimonials to enlighten readers on the profound impacts these methods yield.

Next, embark on an analytical perspective to address common pitfalls associated with these validations. Dispel the fog around misunderstandings by presenting data-fueled arguments that clarify the intricacies of each technique. Further the dialogue with interviews from seasoned professionals who have mastered the art of cross-validation.

Conclude with a visionary outlook, prompting your audience to imagine a future where machine learning models are flawless—models where effective cross-validation methods in machine learning play a pivotal role. Invite stakeholders and aspiring data scientists to partake in this journey, transforming data into reliable, actionable insights.

The Undisputed Benefits of Cross-Validation

Effective cross-validation methods in machine learning are not just trends; they are a trendsetter. When models are subjected to these rigorous practices, the benefits are clear: heightened accuracy, fortified reliability, and the peace of mind that comes from knowing your model can withstand the unpredictability of real-world data. As timelines collapse and innovation accelerates, adopt these methods as non-negotiable practices, ensuring excellence in machine learning applications.

—

Purpose of Cross-Validation

Incorporating effective cross-validation methods in machine learning is your ticket to precision. Whether you’re a seasoned data scientist or a budding enthusiast, these methods lay the groundwork for your journey towards building robust machine learning solutions. Join us as we explore and highlight critical strategies for successful validation and evaluation of your data models.

—

Discussion on Cross-Validation Techniques

Dissecting Cross-Validation Approaches

In the dynamic realm of machine learning, the utility of effective cross-validation methods cannot be overstated. These techniques form the backbone of any credible predictive model by offering an empirical basis for validating assumptions and outcomes.

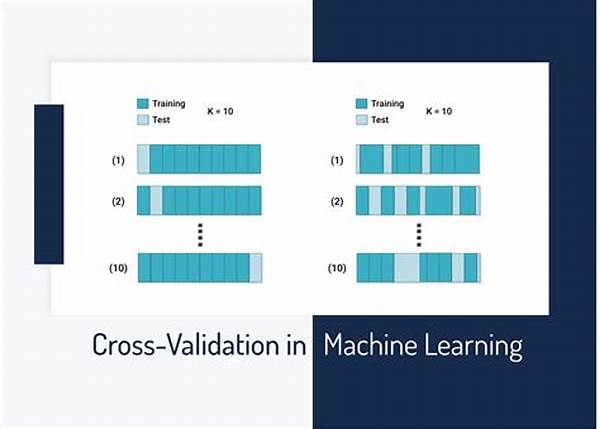

Kicking off this investigation, consider the statistical wonders achieved through k-fold cross-validation. By dividing the dataset into ‘k’ segments, this method enables multiple training and validation cycles. As a result, it provides comprehensive insights into the model’s behavioral pattern on unseen datasets.

In parallel, leave-one-out cross-validation (LOOCV) serves as a rigorous test for your model’s fortitude. While computationally demanding, LOOCV’s granularity ensures every data point is utilized for stress-testing the model.

Additionally, stratified cross-validation emerges as a champion in maintaining the desired distribution of target classes during data segmentation. Effective cross-validation methods in machine learning like these preserve class proportions, preventing skewness that can bias model performance.

Moreover, through the lens of industry case studies, we recognize these cross-validation techniques as catalysts for innovation. High-stake sectors—finance, healthcare, and transportation—lean on these methods for deploying systems that value reliability and precision above all.

In conclusion, embrace the expansive benefits of effective cross-validation methods in machine learning to foster models that are not only smart but truly insightful. As automation and machine learning continue their transformative march, let these techniques guide your journey toward data clarity and algorithm excellence.

Illustrations on Cross-Validation Methods

For those setting foot in the domain of machine learning or those who have been navigating its complexities, embracing cross-validation is non-negotiable. These methods ensure that your creations aren’t just solutions but are the pinnacle of innovation and reliability. Engage with these strategies, and witness your models evolve from ordinary to extraordinary.

—

Short Article: The Power of Cross-Validation in Machine Learning

Relevance of Cross-Validation

Every machine learning enthusiast understands the exhilaration of creating models that predict with near-omniscience. But behind every forward-thinking algorithm is a process centered around effective cross-validation methods in machine learning—a venture into ensuring our fascinating models are in sync with reality.

Exploring Effective Methods

Dive into the landscape where various cross-validation methods reclaim glory. K-fold cross-validation and LOOCV represent the proverbial Sword and Shield, balancing efficiency with granularity. They work tirelessly to ensure your model doesn’t just make predictions but sets the benchmark for accuracy.

Practical Application

Real-world applications paint an encouraging picture. Industries such as finance reap immense benefits by steering clear of pitfalls, safeguarded by the diligence of cross-validation. As competition intensifies, so does the necessity for models reflective of truth over promise.

Cultivating Precision

Invest in the realm of effective cross-validation, where every fold and iteration reiterates a story of commitment to precision. These methods empower diverse sectors to make informed decisions, leaving guesswork at the door and embracing data-driven narratives.

Bridging Innovation with Assurance

In a world where machine learning advances rapidly, the tether of effective cross-validation ensures models remain grounded. As new territories are charted in artificial intelligence, let cross-validation methods guide you, ensuring the terrain isn’t just unexplored, but safe and reliable.

Embrace the undeniable benefits of effective cross-validation methods in machine learning, ensuring your models aren’t just fit for purpose—they’re exceptional. Innovate with confidence, grounded in the certainty only cross-validation can provide.