- Understanding Cross-Validation Techniques

- Introduction to Cross-Validation in Machine Learning Models

- The Essence of Cross-Validation

- Goals of Cross-Validation in Machine Learning Models

- Importance of Cross-Validation in Machine Learning

- Delving Deeper into Techniques

- Illustrations of Cross-Validation in Machine Learning Models

- Features and Variations

- A Deep Dive into Cross-Validation in Machine Learning Models

- Why Consistent Evaluation Matters

In the dynamic realm of machine learning, achieving accuracy and reliability in predictive modeling is paramount. One method that stands as a cornerstone in the evaluation of machine learning models is cross-validation. This technique is not just a buzzword but a crucial step in ensuring that your model is not overfitting and can generalize well to unseen data. Cross-validation in machine learning models serves as a litmus test for model performance, shedding light on how a model might behave when deployed in real-world scenarios.

Attention: Imagine you’re a detective solving a mystery. You wouldn’t base your conclusion on evidence from just one angle, right? That’s exactly what cross-validation does for your machine learning models—it provides a multi-angled view of your data, ensuring that your model stands robust against variability in data samples. Sounds intriguing? Let’s delve deeper.Interest: Dive into the ocean of data science where models are continuously trained, validated, and tweaked to perfection. Cross-validation acts like a compass guiding data scientists in the vast sea of data, preventing them from drifting into the dangerous waters of overfitting. With cross-validation in machine learning models, you won’t just be guessing; you’ll be making informed decisions with confidence.Desire: How often have you trained a model, only to find it crumbles under new data? Cross-validation empowers you to sail smoothly by ensuring your model isn’t just memorizing data but learning patterns. It’s like having a safety net that catches you when your model missteps, providing accurate insights into its predictive power before even stepping into real-world applications.Action: Ready to transform your modeling journey from uncertainty to clarity? Embrace cross-validation in machine learning models and witness the perfect blend of accuracy and reliability. Whether you’re fine-tuning a regression model or crafting the next breakthrough in AI, cross-validation ensures your efforts hit the mark—a true game-changer.

Understanding Cross-Validation Techniques

Cross-validation techniques come in various flavors, each serving a unique purpose to enhance model evaluation. From k-fold cross-validation that divides data into k-subsets to leave-one-out cross-validation perfect for small datasets, understanding these methods can revolutionize how we approach machine learning problems. So why wait? Jump on the bandwagon of cross-validation and see your predictive models shine!

—

Introduction to Cross-Validation in Machine Learning Models

Machine learning models have become an integral part of modern technology—powering everything from recommendation systems to autonomous vehicles. However, creating a model that performs well isn’t just about feeding in data; it’s about ensuring that the model’s predictions are accurate and generalizable. This is where cross-validation in machine learning models comes into play. Let’s embark on this journey to demystify this critical concept.

Attention: The world is shifting, and data is at the heart of this transformation. As a data enthusiast, the challenge is not just to build models but to build reliable ones. Imagine assembling a car. If it works well only on a smooth road but falters on rough terrains, it’s not the masterpiece you envisioned. Similarly, cross-validation is like taking your model on a test drive across diverse landscapes.Interest: So why is cross-validation such a buzzword among data scientists and enthusiasts? It’s the secret sauce that can prevent your models from crumbling in real-world applications. Being able to evaluate your model’s performance on unseen data is like unlocking a new level of certainty in an unpredictable world. Harnessing the power of cross-validation in machine learning models is akin to having X-ray vision in the realm of data science.Desire: Ever wondered how top tech giants maintain their edge in predictive accuracy? One crucial element is their reliance on robust model evaluation techniques like cross-validation. Enter the realm of cross-validation and equip yourself with tools that ensure your models aren’t just academically sound but also battle-tested.Action: If you’re keen on pushing the boundaries of what your models can achieve, exploring cross-validation in machine learning models should be on your to-do list. Dive deep, experiment, and emerge with insights that can redefine how models are perceived and utilized. Whether you’re a novice or a seasoned data scientist, cross-validation elevates your work to new heights.

The Essence of Cross-Validation

At its core, cross-validation is about ensuring that when your model speaks, it speaks the truth. It evaluates its ability to predict new data, providing a safety blanket against overfitting. Techniques like k-fold cross-validation divide data into subsets, allowing multiple rounds of training and testing. This multifaceted approach ensures every data point gets its moment in the spotlight. Embrace cross-validation and elevate your models from promising to proven.

Exploring Various Techniques

Cross-validation isn’t one-size-fits-all. From simple techniques to sophisticated methodologies, exploring these options can significantly impact your model’s performance. The choice between k-fold, stratified, or leave-one-out can be daunting, but the rewards are well worth the effort. The more you delve into these methods, the more skilled you become at precise prediction and model certainty.

—

Goals of Cross-Validation in Machine Learning Models

Importance of Cross-Validation in Machine Learning

Machine learning is all about prediction accuracy and reliability. Cross-validation in machine learning models plays a pivotal role in achieving these two objectives. It acts as a safeguard, ensuring that models perform consistently across diverse datasets.

Attention: Picture this—you’re at a grand launching event, eager to unveil your latest machine learning model. But wait! Before going live, could your model stand the test of new, unseen data? Cross-validation ensures that courtroom drama is less of a possibility and more of a celebrated revelation.Interest: Cross-validation is an indispensable component in the machine learning toolkit. It allows data scientists to gain insights into how models will perform in real-world settings. This plays a crucial role in preventing overfitting, a common pitfall where a model may excel on training data but falter when faced with new inputs.Desire: With cross-validation, you can be more confident that your model’s stellar performance isn’t just a fluke. Imagine bringing that level of confidence to stakeholders—it’s a risk mitigation strategy that they will appreciate. When conversations shift from ‘what if’ to ‘how can we’, you know cross-validation has worked its magic.Action: Take the initiative to integrate cross-validation in machine learning models as part of your standard practice. It’s the bridge that connects theoretical success with practical implementation, giving your projects the sturdy support they deserve.—

Delving Deeper into Techniques

Cross-validation boasts a variety of techniques, each suited for different scenarios and datasets. The most popular method is k-fold cross-validation, where data is divided into k subsets. Each subset gets its turn as a test set, while the remaining subsets train the model. This ensures the model gets trained and tested in every possible way with available data, fostering a deep understanding of its capabilities.

Choosing the Right Method

With options like stratified k-fold and leave-one-out, the world of cross-validation might seem overwhelming. But fear not! By understanding the nuances and what suits your dataset best, you can harness the full potential of cross-validation, driving your models towards greatness. Whether you’re focusing on regression, classification, or clustering, cross-validation has your back—offering a strategic advantage in any machine learning endeavor.

—

Illustrations of Cross-Validation in Machine Learning Models

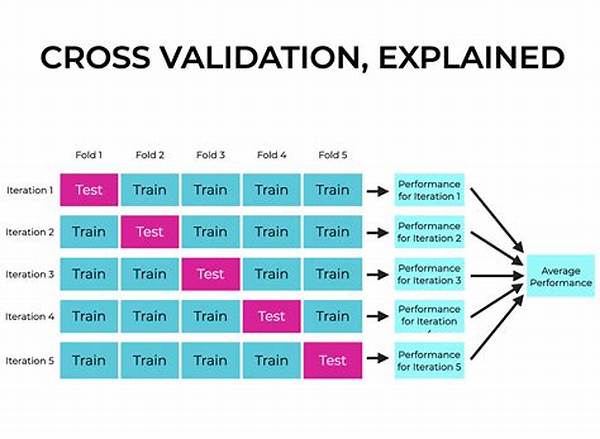

Cross-validation provides unparalleled insights into the performance metrics of machine learning models. Techniques like k-fold cross-validation are visualized in diagrams to show data splits and average model performance metrics over multiple trials.

Cross-validation essentially splits the data into distinct chunks—these could range from simple training and test sets to more sophisticated k-fold arrangements. Each segment allows the model to be trained and validated repeatedly, highlighting variabilities and offering a clearer picture of how the model performs on unseen data. By visualizing this process, data scientists can better pinpoint weaknesses and refine model features accordingly.

Attention: Every machine learning endeavor is unique. Each has its quirks and challenges, and that’s where cross-validation’s versatility shines through. By offering a suite of techniques in visualization, you’re armed with the capability to tailor your models to suit the narrative of your dataset.Interest: Imagine sitting at a drafting table, sketching the future of models—aided by cross-validation illustrations, you gain more than just numbers. You gather stories, insights, and directions for future exploration. Elevating your data interpretation with aesthetic visual portraits makes the modeling journey more intuitive and less arduous.

Features and Variations

Machine learning isn’t static—it’s evolving. Cross-validation goes beyond mere data divisions; it opens pathways for strategic innovation. By featuring various methods and visual stories, these models don’t just predict—they narrate, adapt, and evolve, offering unprecedented foresight and strategic planning capabilities to stakeholders and developers alike.

—

A Deep Dive into Cross-Validation in Machine Learning Models

Understanding a model’s underlying mechanisms is akin to peeling an onion—you uncover layers of complexity, insights, and potential pitfalls. Modularizing this complexity, cross-validation in machine learning models provides a systematic breakdown of model assessment strategies.

Attention: Every choice made in model development impacts its performance and reliability. Cross-validation acts like a magnifying glass, enhancing our ability to observe and act on these choices efficiently. It’s a tool that grounds our adventurous data explorations in reality, allowing for fortified models and credible insights.Interest: It’s common for machine learning enthusiasts to feel overwhelmed by the breadth of techniques and methods at their disposal. Cross-validation demystifies this process. By steadily revealing the landscape of model performance and variability, it provides the necessary focus to unravel complex data.Desire: Venturing into uncharted territories of model refinement, cross-validation does more than scratch the surface. It digs deep, probing biases and variances within models, ultimately yielding masterpieces that stand robustly in dynamic environments. It becomes a craftsman’s tool, shaping the prowess of predictive analytics until only the best outcomes remain.Action: Hesitation gives way to clarity when cross-validation is employed as a part of your robust machine learning strategy. Adopt this practice routinely for insightful, dependable, and actionable results. Whether we’re discussing dynamic AI solutions or casual analytics, the value of cross-validation isn’t just recognized—it’s celebrated.

Why Consistent Evaluation Matters

Consistent evaluation through cross-validation builds a reliable framework that instills confidence in predictive models. Providing a snapshot of model performance on various unseen datasets lets stakeholders rest assured, knowing that what’s promised aligns with real-world expectations. This alignment reduces risk, augments deployment confidence, and prevents potential loss of trust or credibility.

The Impact of Data Variability

Data variability is inherent and inevitable—yet it doesn’t have to be daunting. Cross-validation empowers data scientists to embrace variability, harnessing it as an ally rather than viewing it as an opponent. Treating variability as a source of insight rather than adversity transforms limitations into learning opportunities, setting the stage for impactful strategic decision-making.

By adopting and adapting cross-validation techniques, machine learning models don’t just promise—it delves, it delivers, and it dignifies the data-driven decisions that define success in a transitioning technological landscape.