In the fascinating world of neural networks, understanding the mechanics behind these complex systems is crucial for anyone venturing into machine learning. One of the pivotal components that contribute to the success of neural networks is activation functions in layers. Imagine a stage where actors—your data—need direction, and the activation function is the director telling them how to perform. Without this guidance, the performance would be lifeless and unorganized. Through this article, we’ll unravel the thread tying each layer together to create a masterpiece. Seamlessly blending humor, marketing insights, and technical knowledge, we’ll explore how these functions breathe life into data.

To kick things off, let’s delve into what activation functions truly are. Picture your brain processing information; it doesn’t react the same way every time. Similarly, activation functions in layers determine how a neural network processes inputs through its layers. They introduce non-linearity into the output, enabling the network to learn from data and make sophisticated predictions. Whether you’re a seasoned developer or a curious newbie, incorporating these functions correctly propels your projects to new heights.

A diverse range of activation functions awaits to perform specific tasks. From the famous ReLU (Rectified Linear Unit) to the classic Sigmoid, they all have their unique charm and purpose. The allure of activation functions in layers is their ability to transform mundane datasets into valuable insights. Let’s take a humorous angle: choosing the wrong activation function is like trying to play a subtle jazz piece with a heavy metal guitar—your data’s potential becomes limited.

The Science Behind Activation Functions

The mathematical prowess behind activation functions is nothing short of fascinating. These functions are the unsung heroes, tirelessly working to ensure that neural networks are performing optimally. By controlling the amplitude of outputs, they stabilize the learning process, preventing issues like exploding gradients. Activation functions in layers act as the crucial bridge between input and output, ensuring a smooth transition and flow of information.

The quest to master neural networks often feels like an epic journey through a land filled with both science and art. At the core of this journey lies the essential knowledge of activation functions in layers. As heroes of modern computing, these functions are what make machine learning models truly intelligent. It might sound complex at first, but imagine activation functions as the magical spells that transform ordinary numbers into powerful predictors.

Why Are Activation Functions Important?

Activation functions play a critical role in determining the network’s output. They dictate whether a neuron should be activated or stay silent. Here’s an intriguing thought: think of neurons as social media posts. Without engaging activation, they remain unseen, failing to impact the network or the intended audience. This analogy captures the essence of what happens when activation functions in layers are overlooked.

Exploring Different Types of Activation Functions

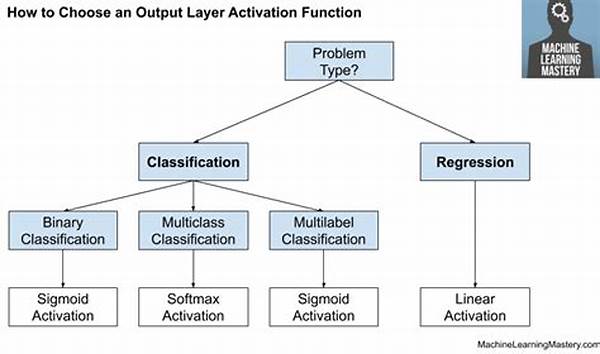

There’s an eclectic mix of activation functions, each suited to specific situations. The Rectified Linear Unit (ReLU) is a popular choice due to its computational efficiency. However, it’s crucial to remember that one size doesn’t fit all. Sigmoids are great for outputs normalized between 0 and 1, accentuating the importance of choosing wisely. Imagine buying a pair of shoes; selecting the right fit requires understanding your journey’s demands.

Ultimately, mastering activation functions in layers empowers developers to harness the full potential of neural networks. This knowledge transforms the boring into brilliance, fuelling the passion of those involved in crafting machine learning masterpieces.

Eight Key Actions Related to Activation Functions in Layers

Embarking on the neural network journey is like setting sail on uncharted waters. To navigate successfully, understanding the architecture of neural networks is imperative. The activation functions in layers act as the wind that propels your ship forward, guiding you to the treasure of accurate data prediction.

In the realm of machine learning, layers serve various purposes, from capturing initial trends to refining predictions. The magic happens as activation functions breathe life into each layer, transforming raw data into actionable insights. With the right application, these functions empower data enthusiasts to uncover hidden patterns, much like decoding a secret map.

To unravel this enigma, imagine each layer as a puzzle piece. Activation functions play a dual role, linking pieces while adding color and depth to the overall picture. The result is a cohesive and dynamic ensemble capable of producing life-like simulations and predictions.

The power of activation functions in layers lies in their ability to orchestrate data processing seamlessly. By mastering this crucial component, developers can create sophisticated models that adapt and evolve with the ever-changing landscape of data science. With the right blend of passion, creativity, and technical know-how, the future of machine learning promises to be an exhilarating adventure.

How Activation Functions Work

Understanding the operational mechanics of activation functions can feel like solving a riddle. These functions determine how the weighted sums of inputs in a neuron are converted to outputs, which will either be passed to the next layer or used as output. They are both the key and the lock, simultaneously protecting and revealing the mysteries of data science magic.

Enhancing Model Performance with Activation Functions

Model performance is the gold standard in the kingdom of machine learning. The correct application of activation functions in layers can drastically enhance model performance. This is where science meets art, as selecting the appropriate function requires both analytical skill and creative insight. Embrace this dual challenge to become a master of machine learning.

Eight Tips for Optimizing Activation Functions in Layers

Understanding activation functions in layers is akin to mastering the art of mixing colors. Each function has its unique signature that contributes to the network’s overall palette. Brightening or dulling the computational landscape can either guide you to the masterpiece or veer into the abstract.

Crafting a well-functioning neural network is part science, part artistry. It’s about choosing the right set of tools, embarking on creative experiments, and ultimately producing something profoundly impactful. Activation functions hold a dear place in this creative process, much like an artist’s brushstroke that transforms a blank canvas into a lively expression of intellect.

As you embark on this journey, remember that each layer is a potential masterpiece waiting to be painted with the right functions. Encourage open dialogue within the machine learning community to share these experiments, inspiring innovation and discovery.

The symphony of neural networks wouldn’t be complete without the melody provided by activation functions in layers. Each function is like a note, contributing to a larger, harmonious composition that breathes life into machines, allowing them to perceive, analyze, and predict with unprecedented clarity.

Through a vivid narrative, one can appreciate the magical transformation that occurs as mundane data points evolve into sophisticated interpretations, much like a caterpillar metamorphosing into a butterfly. This transformation plays a pivotal role in shaping technology’s future, promising a horizon filled with infinite possibilities and innovations.

The Role of Activation Functions in Layer Dynamics

Activation functions are the silent conductors in a grand orchestra of computations, ensuring that each neuron performs at its best. They decide which neurons should “fire” and which should rest, akin to choosing which instruments should play. Any misstep can dissonance, highlighting their indispensable role in this ensemble.

Fine-Tuning Neural Networks

One of the most engaging challenges is fine-tuning neural networks to perform optimally. Just as a chef delicately balances flavors, data scientists must balance activation functions in layers to achieve the ideal model performance. This harmony reiterates the beauty and potential nestled within the data, inviting you to explore and innovate.

By unlocking the power of activation functions, you lay the groundwork for groundbreaking advancements in machine learning, transforming challenges into avenues for discovery. Let this knowledge be both your canvas and compass as you navigate and sculpt the ever-evolving landscape of artificial intelligence.

Armed with this enriched understanding, you’re now equipped to venture deeper into the world of machine learning, ready to witness the profound impact these functions present in the wondrous symphony of neural networks.