Hey there, fellow data enthusiasts! Today, we’re diving into the world of machine learning, exploring some of the notorious villains known as overfitting and underfitting issues. Whether you’re a data science newbie or a seasoned veteran, these tricky problems can sneak up on you faster than a bug in your code! So, buckle up as we uncover what they are, why they matter, and how we can tackle these pesky issues.

Understanding Overfitting and Underfitting

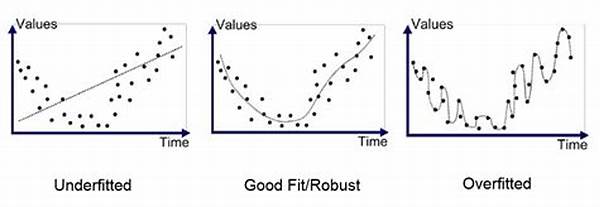

Alright, let’s kick things off by getting to know our two troublemakers: overfitting and underfitting issues. Think of overfitting as a friend who prepares for a party by memorizing everyone’s life story—only to find out it’s a casual hangout, and no one remembers any details. In the context of machine learning, an overfitted model learns the training data too well, capturing noise along with the signal. It works great on the training set but struggles with new, unseen data. On the other hand, underfitting is like the friend who shows up to the party and doesn’t even know whose birthday it is. It’s when your model is too simple to capture the underlying patterns of your data, leading to poor performance across both training and test datasets.

So, why do overfitting and underfitting issues even happen? Well, it’s all about balance. If your model is too complex or has too many parameters, it can become overly tailored to the specific quirks in your training set—hence, overfitting. Conversely, if your model is too simplistic, it may miss crucial patterns and correlations, resulting in underfitting. The sweet spot is a model that’s just right—one that generalizes well to new data.

Signs of Overfitting and Underfitting

1. Model Complexity: If your model resembles a complicated labyrinth, it might be a sign you’re facing overfitting issues. Simplifying the model could help.

2. Performance Discrepancy: Notice your model performing brilliantly on training data but flopping with test data? Classic overfitting symptom right there!

3. Bias and Variance: High bias can lead to underfitting, while high variance hints at overfitting. Balancing these can resolve the issues.

4. Validation Curve: Use this handy tool to spot overfitting and underfitting issues by plotting model accuracy against varying complexities.

5. Additional Data: Struggling with underfitting? Gathering more data or tweaking features might just be the fix you need!

Tackling Overfitting and Underfitting Issues

Now that we’ve identified what overfitting and underfitting issues look like, let’s chat about some strategies to tackle them. One way to combat overfitting is through regularization techniques like L1 and L2 regularization, which essentially penalize large coefficients in your model, encouraging it to be less complex. Another method is cross-validation, where you train your model on multiple subsets of your data and validate on the remainder, giving a more reliable performance estimate.

In the realm of underfitting, sometimes the solution is as straightforward as going back to the drawing board and adding more features or selecting different algorithms that better capture your dataset’s complexity. Remember, achieving the right mix might require a bit of trial and error. Keep experimenting, adjust parameters, and stay curious!

Real-life Applications of Overfitting and Underfitting

1. E-commerce: A recommendation algorithm that overfits can mistakenly push irrelevant products, while underfitting may suggest generic items no one wants.

2. Healthcare: Diagnostic models need to avoid overfitting on training data to generalize well and provide accurate predictions on patients.

3. Finance: Stock price prediction models can be misleading if they overfit, potentially leading to costly investment decisions.

4. Voice Recognition: Systems that underfit might fail to capture variations in accents or tones, decreasing efficiency and accuracy.

5. Weather Forecasting: Predictive models must balance complexity to avoid overfitting on historical data, ensuring reliable next-day forecasts.

6. Social Media: Content algorithms need to evade overfitting to provide a diverse range of content, meeting user preferences effectively.

7. Video Streaming: Recommend the right shows without overfitting on viewing history for the best user experience.

8. Gaming AI: Bot players should neither overfit to predictable strategies nor underfit, maintaining challenging gameplay.

9. Manufacturing: Predictive maintenance models should avoid overfitting historical failure patterns to prevent unnecessary interventions.

10. Robotics: Navigation systems must balance to adapt to various environments without overfitting on specific terrain data.

Tips for Balancing Overfitting and Underfitting

Striking the right balance between overfitting and underfitting issues is the name of the game. First, get cozy with your data—it’s always about understanding those quirks and patterns. Often, less is more. Sometimes simplifying your model can unexpectedly lead to better performance, so don’t hesitate to experiment with fewer parameters or simpler algorithms.

Cross-validation is your best pal in maintaining this balance. It can offer insights into how well your model generalizes with different data splits. If you find yourself underfitting, consider expanding your feature set or selecting a more precise model to capture the complexity.

Reflecting on Overfitting and Underfitting

Overfitting and underfitting issues aren’t just buzzwords but genuine challenges in the data science realm. Every time they rear their heads, they remind us of the importance of balance and adaptability. The very act of curating a model involves a bit of science, art, and intuition. The secret sauce lies in remaining open to exploration, regularly validating our models, and never losing sight of our model’s ultimate purpose.

In the end, both overfitting and underfitting issues teach us an invaluable lesson: that there’s no one-size-fits-all in the realm of data science. Each dataset is unique, necessitating custom strategies and an open mind. Dive into your datasets with curiosity, keep the learning mantra alive, and before you know it, you’ll find yourself weaving models that strike the perfect balance between complexity and simplicity.

And there we have it, the wild ride of overfitting and underfitting issues! Stay curious, keep experimenting, and most importantly, have fun along the way. After all, isn’t data science an adventure in itself?