In the ever-evolving landscape of data science and machine learning, the quest for perfection doesn’t end at simply creating predictive models. Instead, it extends to the meticulous process of evaluating these models to ensure their accuracy and reliability. Enter cross-validation—a statistical method that stands as a gatekeeper to model evaluation, offering data enthusiasts an insightful peek into their model’s performance. But why has cross-validation become such a buzz in the world of data science, and how does it hold the key to unlocking the true predictive power of models?

The magic of cross-validation lies in its ability to provide a robust assessment of model performance. By dividing the dataset into multiple subsets and allowing the model to train and test on these different portions, cross-validation ensures that the evaluation is not biased towards any single part of the data. This practice of evaluating predictive models with cross-validation has become an indispensable tool in the data scientist’s kit, allowing for a more generalized view of model capability. As a result, it garners both attention and respect in fields ranging from marketing analysis to scientific research, where accuracy and reliability are pivotal.

Moreover, cross-validation’s versatility is one of its most attractive features. It doesn’t discriminate among different types of predictive models—be it regression or classification, linear or nonlinear. This universality means that whether you’re a seasoned data scientist working on complex algorithms or a novice exploring machine learning for the first time, cross-validation will meet you where you are, ready to provide insights that can refine and redefine your model evaluation approach.

The Power of Cross-Validation

The capability of cross-validation to offer a comprehensive view of model performance and its tendency to prevent overfitting makes it a darling in the machine learning community. It’s as if cross-validation is your very own Watson to your Sherlock, tirelessly sifting through data to ensure that your conclusions are not only statistically significant but also broadly applicable.

Yet, it’s not just about precision. There’s a human element here—quite fitting in this era of AI and machine learning, where the ultimate goal is to augment human decision-making. Through evaluating predictive models with cross-validation, data practitioners attain a sense of confidence and trust in their models, empowering them with the credibility needed to base significant real-world decisions on these algorithms.

—

Understanding the Mechanics of Cross-Validation

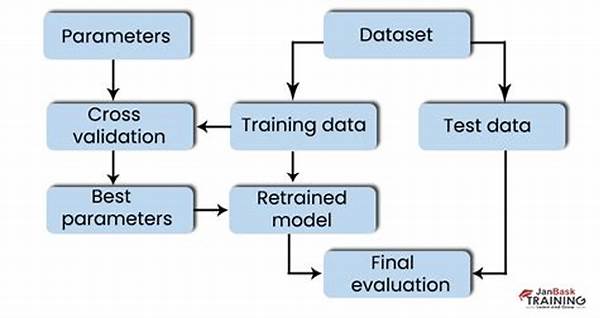

Cross-validation can seem mystical, especially for those new to the concept. It involves partitioning data, ensuring representation of each subset, and allowing models to train and test under varied conditions. By doing so, it guards against overfitting, a common pitfall in machine learning where models perform exceptionally well on training data but falter with new, unseen data. Evaluating predictive models with cross-validation safeguards against this by simulating multiple rounds of training and testing, allowing for a comprehensive performance evaluation.

Types of Cross-Validation

Among the popular types of cross-validation, K-Fold is perhaps the most recognized. Here, data is segmented into ‘k’ parts, and the model is trained ‘k’ times, each time leaving out a different fold for testing. Another variant, Leave-One-Out, is a special case where ‘k’ equals the number of data points, providing an exhaustive assessment. Stratified K-Fold is often employed in classification tasks, ensuring each fold is representative of the entire dataset’s class distribution.

But let’s not get drowned in jargon. Think of cross-validation as your insurance policy against model disappointment. It’s this meticulous, integral practice of evaluating predictive models with cross-validation that allows data scientists to sleep easy, knowing their models are primed for real-world application. This process, akin to a checklist or a dress rehearsal, ensures models are not just theoretically impressive but practically powerful.

—

Practical Steps in Evaluating Models with Cross-Validation

Why Cross-Validation Matters

The power of evaluating predictive models with cross-validation is often amplified by the visible results it produces. Cross-validation affords data practitioners a comprehensive lens through which to view their model’s capabilities and shortcomings. It’s not only about crunching numbers but also about fostering a deeper understanding of data patterns and behaviors. From a marketer’s perspective, imagine crafting a campaign that hinges on a fallible model—cross-validation prevents such blunders by ensuring models are scrutinized thoroughly before deployment.

As data becomes the new oil in today’s digital age, understanding the why and how of cross-validation is vital. Consider hearing a seasoned data scientist candidly express, “Cross-validation saved us from a potential flop,” validating its rightful place in predictive modeling. Indeed, evaluating predictive models with cross-validation plays an integral role in the data world, where accuracy is synonymous with success.

The Human Story

Every success story in the data realm often has an unsung hero like cross-validation working behind the scenes. Take the heartwarming tale of Jane, the data analyst. Initially overwhelmed by the intricacies of machine learning, Jane discovered cross-validation while exploring ways to improve her company’s predictive model’s accuracy. It was the turning point in her career, transforming her into the go-to person for reliable data-driven insights.

In conclusion, the tale of evaluating predictive models with cross-validation is one where the hero is not an individual but a method—a testament to the endless pursuit of perfection in the world of data science. Join the movement; implement cross-validation, and watch your models evolve into trusted advisors, guiding strategic decisions with unparalleled precision.

—

Implementing Cross-Validation in Your Workflow

Taking Action on Cross-Validation Insights

At the end of the day, evaluating predictive models with cross-validation isn’t just a statistical procedure; it’s a strategic tool that can redefine success within organizations. It challenges data practitioners to look beyond face value, diving deep into what their models reveal about their data. Don’t just create a model; ensure it has the breadth and depth to conquer real-world challenges, armed with insights gained through rigorous cross-validation.