Have you ever experienced the frustration of spending hours or even days designing a machine learning model, only to discover that its accuracy is lacking when put to the test? Don’t worry, you’re not alone. Many data scientists and machine learning enthusiasts face this hurdle. This article sheds light on how improving model accuracy with cross-validation can be your saving grace. In the fast-paced world where data is the new oil, ensuring that your models are both accurate and reliable is paramount. The magic wand you need could well be cross-validation — a technique that not only promises but often delivers improved accuracy and confidence in your models.

Model accuracy is the heart of any successful predictive endeavor. Without precision, predictions fail, leading to potentially costly repercussions whether in finance, healthcare, or marketing domains. So, how do data scientists cure the nemesis of poor model performance? Enter cross-validation. This ingenious method not only boosts confidence but lays down the framework for creating robust models that laugh in the face of bad forecasts. In our journey today, let’s take a peek into this game-changing technique and explore practical narratives where improving model accuracy with cross-validation turned the tides.

Running a model once isn’t enough to ascertain its proficiency. Serial entrepreneurs and tech giants alike lean on improving model accuracy with cross-validation — splitting datasets into multiple subsets, running repeated tests, and using the results to create a model that aces on every count. Digest this: it’s akin to spending a month perfecting a flawless dish by following a systematic feedback loop rather than a single attempt. By the end of this article, you’ll not only have a richer understanding but a powerful tool to make your ideas unstoppable forces.

Benefits of Cross-Validation in Enhancing Model Accuracy

Now, let’s focus on tangible benefits. Cross-validation creates multiple train-test splits which are critical for exposing models to different variations of data. There’s an unparalleled sense of assurance that emerges when your model gracefully tackles each split without flinching. Remember the story of blindfolded archers hitting bullseyes? Cross-validation can make your model that archer, shooting arrows with peerless precision, no matter the obstacle. Dive into the fine statistics and watch as your models become the unsung heroes behind successful ventures.

—

In the bustling IT corridors where every decision pulsates with the ripple effect of data, model accuracy isn’t just a necessity; it’s the credo of data science connoisseurs. Mastering model accuracy with cross-validation is not unlike an artisan crafting a masterpiece sculpture; every notch and detour is a tale. Stories fly around the globe about data scientists swooning over cross-validation as if it were a white-gloved magician revealing hidden secrets of the data. While the sciences may saturate data stories, understanding the culture of testing models through cross-validation offers a compelling narrative.

Cross-validation systematically divides the data into “k” folds — imagine slicing a cake so everyone gets a piece. Each slice represents a subset of the data used to train and test the model. The elegance of improving model accuracy with cross-validation is evident: it tests the model reliably across several perspectives, avoiding mere coincidences or lucky hits. Statistics paint a delightful picture: models embracing cross-validation are more versatile and less prone to overfitting than those wedged in static testing scenarios.

Ever heard the story of the analytics team that turned an average investment suggestion system into an award-winning recommendation engine? Their secret? Unwavering dedication to improving model accuracy with cross-validation. It wasn’t alchemy or a stroke of luck, but persistent cycles of training and testing, iteratively boosting accuracy levels akin to a savvy sports coach refining a team with every game. To outsiders, it might seem like black magic, but insiders know the trade to be a calculated dance of tests and balances.

Real-Life Testimonials: Behind the Scenes with Cross-Validation

When top-tier companies share their testimonials, one name surfaces repeatedly — cross-validation. Like a chef’s secret ingredient hidden away until just the right moment, cross-validation becomes the unassuming hero in their successful projects. From curating playlists that seem to read users’ minds to predicting stock trends that would make even Gordon Gekko green with envy, improving model accuracy with cross-validation is the behind-the-scenes actor that scripts a story of triumph and accuracy.

Examples of Implementing Cross-Validation

Implementing cross-validation is a cakewalk when broken down into palatable steps. Here are eight ways to get started:

—

Cross-validation stands as a verdict — the clearinghouse where different datasets are judged, tested, and reaffirmed before ascending the rungs of credible predictions. The narrative has evolved over years, striking a balance where accuracy and flexibility coexist. It’s mind-boggling how far-reaching the implications of improving model accuracy with cross-validation can be; models that stood uncertain now shimmer under its guidance.

How Cross-Validation Transforms Predictions

The crux is that cross-validation fosters an environment where predictions aren’t mere stochastic occurrences but a byproduct of robust validation. Imagine transforming a secret recipe into a remarkable culinary art where each flavor complements another. With consistent cross-validation efforts, predictions are polished from rough guesses to shining examples of accuracy, offering faithfully tested, reliable outputs for real-world scenarios.

Crafting Stories with Cross-Validation

Peek behind the velvet curtains to see how businesses weave stories where improving model accuracy with cross-validation is at the heart of smart growth strategies. Insurance industries predicting policy risk profiles, e-commerce platforms honing product recommendations, healthcare systems pre-emptively diagnosing diseases — the list goes on, each with a tale of how validated predictions cut errors and magnified profits.

Companies leading this charge share an awe-inspiring sentiment — if cross-validation is an investment, the returns exceed imagination. In a data-driven culture where algorithms spin tales, hear from sectors that have danced the dance and find yourself not just convinced but ready to embrace cross-validation with open arms.

—

When diving into the depths of data science, ensuring high model accuracy is akin to finding the philosopher’s stone — elusive but invaluable. Improving model accuracy with cross-validation, an age-old yet evolving approach stands resolute at the heart of our quest for precision. As you embark on this digital journey, get ready to become privy to an insightful exploration geared towards weaving excellence through meticulous modeling tactics.

Stats don’t lie, say the wise. Imagine curating a playlist that resonates with listeners or pivoting enterprise strategies based on highly accurate forecasts. This is where the art of improving model accuracy with cross-validation outshines isolated testing methods. Let’s outfit it with real-world examples seasoned generously with industry case studies, cementing its place as an ally for someone who’s serious about harnessing accurate models.

Skilled practitioners can vouch for this technique cutting down redundant guesswork; models tested across multiple cross-validation frames reveal true prowess while warding off pretender biases. By maintaining a persistent loop of validation, predictive models unfurl as astute predictors echoing accuracy that’s invaluable, regardless of the domain. So here’s your call to action — adopt cross-validation and redefine what’s possible with every dataset you harness.

Building Your Own Path with Cross-Validation Techniques

Exploit the many forms of cross-validation, each tailored for specific data idiosyncrasies. Whether implementing K-Fold or Leave-One-Out methods, there’s a fitting technique for every scenario. The results aren’t just numbers; they’re insights waiting to revolutionize strategies and domino deeper into more visionary forecasts than imagined.

—

If you’re ready to take a leap and improve your data models, here are ten invaluable tips:

1. Understand Your Data: Before splitting, comprehend details about its nature.

2. Choose the Right Cross-Validation Method: Decide between K-Fold, LOOCV, etc.

3. Stratify Your Folds: Ensure class balance across folds.

4. Preprocess Data Diligently: Properly scale or normalize data beforehand.

5. Limit Data Leakage: Keep training/testing partitions distinct.

6. Include Hyperparameter Tuning: Optimize your model freshly with nested validation.

7. Maintain Consistency: Fairly assign sets throughout cross-validations.

8. Monitor Variability: Track variations across different folds.

9. Use Repeated Techniques: Employ repeated k-fold for better robustness.

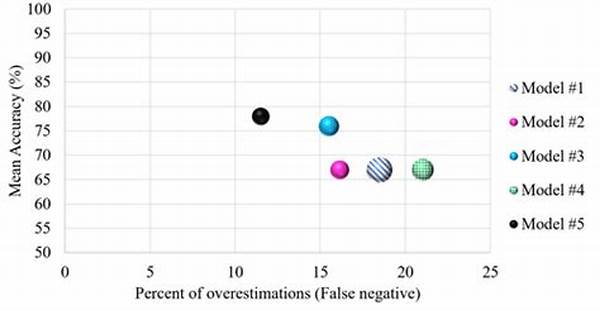

10. Visualize Results: Graph inaccuracies to troubleshoot efficiently.

Data Integrity and Cross-Validation Analysis

Never underestimate the power of thorough data analysis. Harnessing both statistic insights and creativity, improving model accuracy with cross-validation paves pathways to augmented understanding. What sets this strategy apart is fostering stable predictions informed by repeated validation exercises across varied data segments, each flush with fresh perspectives.

Bridging Experience with Innovation in Cross-Validation

Merge established wisdom with innovative approaches. By leveraging your understanding of foundational techniques alongside cutting-edge advancements in model testing, boost your ability to scrutinize, refine, and eventually improve model accuracy with cross-validation. Step forward with renewed confidence, knowing your strategic improvements lead to tangible, efficient outcomes.

—

How does one navigate this maze of possibilities and potential pitfalls? Enter the world of cross-validation — a methodical approach that polishes models to shine even in the most stringent tests. Diving into this art form might just uncover inspiration fueling improved structures and progressively refined models. If data is the new currency, improving model accuracy with cross-validation is the minting process.

These narratives unravel endless possibilities fueled by intelligent data handling. Improving Model Accuracy with Cross-Validation isn’t just backend jargon but a formidable driver behind industry success stories, with outputs that tick all accuracy score checkboxes. Whether you’re a rookie data enthusiast or a seasoned analyst, embracing cross-validation can turn the heat up in model performance.

Trust in the Technique: Transform Your Models

With each fold of testing and validation, rely on cross-validation as your trusted companion to elicit truth behind data specifics and anomalies. The payoff in spades’ worth makes an undeniable case for embracing this storied technique. So, ready your data models, sprinkle on some hope, and watch as they unfurl their wings, soaring with refined precision. The art of improving model accuracy with cross-validation is here — embrace it, leverage it, and let your data science dreams take flight.