- Language Model Training Techniques

- Deep Dive into Training Methods

- Key Components in Training Language Models

- Introduction to the Nuances of Language Model Training Techniques

- Crafting Effective Language Models: Techniques Uncovered

- Key Pointers on Language Model Training Techniques

- Exploring the Intricacies of Language Model Training Techniques

Language Model Training Techniques

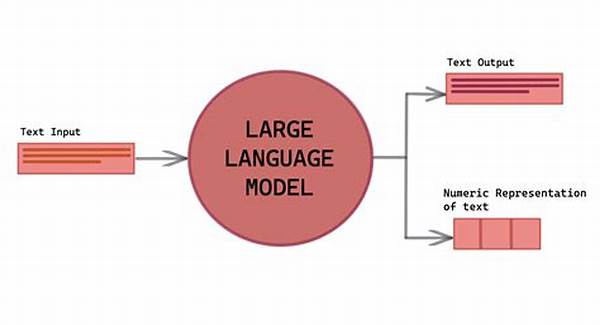

In the ever-evolving realm of artificial intelligence, the pivotal role of language models cannot be overstated. They’ve infiltrated numerous facets of our daily lives, from helping us compose emails to recommending what we should binge next on our favorite streaming platform. But how did these language models become so adept at mimicking human-like conversations and understanding context with such finesse? The answer lies in the sophisticated language model training techniques that continue to advance at a breathtaking pace.

The journey to perfecting language models is one full of intrigue and complexity. Think of it as teaching a child a new language, but on a more colossal scale. This “child” is none other than a computer program designed explicitly to understand and generate human language. However, unlike teaching a human, these language models require tremendous amounts of data and computational power to learn and improve. Large datasets filled with text from various sources are used to feed these models during the training phase. These datasets act as both the teacher and the playground for the language model as it learns the nooks and crannies of human language.

Among the foundational language model training techniques is supervised learning. This method involves training the model using labeled data, wherein the correct output is already known. Through repetition and adjustment, the model refines its predictions closer to the desired outcome. Supervised learning is akin to correcting a student’s homework until they understand the material thoroughly. But wait, there’s more! Unsupervised learning takes a different approach, where the model is given data with no explicit instruction on what to do with it. It’s a bit like giving a student a book to read with no guidance, letting them extract meaning and knowledge independently.

Deep Dive into Training Methods

The landscape of language model training techniques is vast and rich, with each approach offering unique perks tailored to various applications. Reinforcement learning is a standout methodology and integral to advancing language models. Through trial-and-error interactions with its environment, the model learns optimal output strategies—similar to how we humans learn to associate actions with rewards.

The Intersection of Techniques and Innovation

As technology progresses, so do the strategies behind language model training. Transformers, emergent architectures defined by their parallel processing capability, have heralded a new era of efficient model training. These aren’t your ordinary Transformers from sci-fi narratives, though. They efficiently handle long-range dependencies within the data, enhancing the model’s ability to understand context—a vital trait for more coherent language applications.

Yet, in the whirlwind of AI advances, it’s crucial to remember that these language model training techniques aim for a balance—precision without losing the essence of human-like interaction. To illustrate, think of the infamous, gentle AI assistant disrupting your family dinner to remind you of a Discord message. Striking the perfect balance between utility and harmony is the crux of successful training methods.

Key Components in Training Language Models

1. Data Preprocessing: Cleaning and preparing data is essential for optimal model performance.

2. Feature Extraction: Identifying the most relevant features that can improve model predictions.

3. Model Architecture: Choosing a model structure that can best capture complex language nuances.

4. Optimization Techniques: Implementing algorithms to refine model weights for better accuracy.

5. Evaluation Metrics: Setting benchmarks to gauge the model’s understanding and generation abilities.

6. Tuning Hyperparameters: Adjusting variables that dictate the training process for improved outcomes.

Introduction to the Nuances of Language Model Training Techniques

Have you ever wondered what happens behind the scenes of your voice command assistant? How does it understand your quirky requests with such precision? The answer lies in the intricate process known as language model training techniques. These techniques form the bedrock of artificial intelligence applications that handle human language. They’re akin to the magical elixir that transforms ordinary algorithms into sophisticated models that understand and interact through speech and text.

The training of these models is a journey marked by rigorous data ingestion, computational wizardry, and algorithmic finesse. Imagine feeding a voracious reader an endless stream of books, articles, and conversations – that’s similar to how a model is trained. Each piece of data contributes to its growing understanding of context, syntax, and semantics.

At the core, these language model training techniques revolve around various strategies that fine-tune the models for specific tasks. From the initial steps of gathering and preprocessing data to the complex phase of adjusting model parameters, every action aims to create a model that isn’t just a dull, mechanical construct but one that feels almost lifelike in its interactions.

The relentless research and development dedicated to these techniques promise an exciting future where language processors understand not just the what of our words, but the why and how. As these models continue their upward trajectory, they bring us closures to a seamless blend of human-like comprehension in AI interactions.

Crafting Effective Language Models: Techniques Uncovered

In the riveting world of AI, language models stand as towering giants, forever learning and adapting. But how do they attain such prowess at understanding language? The key lies in the diverse set of language model training techniques employed throughout their development.

Leveraging the power of vast datasets forms the backbone of any language model training endeavor. Large language models, like OpenAI’s GPT or Google’s BERT, don’t just rely on quantity—they’re trained to discern quality and context amid the data deluge. These language models undergo rigorous preprocessing and structuring to ensure the data is refined to its purest form before training commences.

Advancements in model architecture also play a pivotal role. In recent years, transformers have revolutionized how models manage data. Unlike traditional sequential models, transformers break down data into manageable segments, enabling parallel processing and enhancing the model’s overall efficiency and understanding.

Optimization algorithms are equally vital. They fine-tune the model’s internal parameters. Techniques like stochastic gradient descent adjust these parameters, leading the model closer to an optimal understanding and generation of language. But that’s not all—regularization methods like dropout and early stopping are incorporated to prevent overfitting and ensure the model generalizes well to new data inputs.

Key Pointers on Language Model Training Techniques

1. Data Preparation: The initial step which involves cleaning, normalizing, and structuring datasets for effective learning.

2. Algorithm Selection: Picking the right learning algorithm to align with model goals.

3. Training Objectives: Defining clear objectives that the model must achieve during its training phase.

4. Model Scalability: Ensuring models are scalable to handle increased data without losing efficiency.

5. Error Analysis: Implementing strategies to analyze and reduce prediction errors.

6. Cross-validation: Utilizing cross-validation methods to improve model performance consistency.

7. GPU Acceleration: Leveraging powerful hardware for quicker model training cycles.

8. Monitoring and Adjusting: Continuously observing model outputs and adjusting training parameters as needed.

9. Continuous Evaluation: Periodically assessing the model’s ability to adapt to new language inputs.

10. User Feedback Integration: Incorporating user feedback to iteratively improve model interaction.

Exploring the Intricacies of Language Model Training Techniques

The realm of AI is exciting and dynamic, with language model training techniques leading the charge. But what makes these techniques essential, and how do they shape the future of technology as we know it?

At its core, language model training taps into the immense potential of data. These models require enormous amounts of text data to improve their understanding and generation capacities—a practice known as data-driven teaching. Through various stages of learning, refining, and adjusting, these training techniques ensure that models keep pace with the ever-changing nuances of human language.

Understanding the role of algorithmic innovation in model training is crucial. Algorithms guide the learning journey, equipping models with the ability to predict, classify, and generate language. From Bayesian networks to neural networks, the arsenal of algorithms is broad and tailored for different language tasks.

What sets successful language model training apart is its adaptability. As languages evolve and new expressions emerge, these models must stay relevant and accurate. Semi-supervised learning offers a brilliant solution by combining labeled and unlabeled datasets to train models more flexibly, allowing them to grasp new terminologies and shifts in language use.

In a world where timely communication and precise translation are paramount, these training techniques promise an exciting horizon where human-AI interaction is not just functional but also instinctively human-like.

These initiatives open doors to numerous possibilities, crafting models that serve humans with a level of accuracy and efficiency once deemed the realm of science fiction. But the adventure doesn’t stop here. Continuous innovation in language model training techniques ensures a future where AI is more relatable and integrated within our everyday lives more than ever.

—Language Model Training Techniques and Their Future

Understanding and implementing language model training techniques is crucial for advancing AI technology. They go beyond mere mechanics, representing a blend of data, algorithms, and adaptability—a trifecta pushing the limits of AI capabilities and reaching new frontiers in language processing and interaction.