Bias Mitigation in Machine Learning

In an era where artificial intelligence and machine learning are not just buzzwords but are actively revolutionizing industries, the topic of bias mitigation in machine learning is not only pertinent but pivotal for both ethical AI and equitable outcomes. Imagine casting a net across the digital ocean to trawl for insights, only to discover the net favors one type of fish. That’s akin to an unmitigated bias in machine learning models. Machine learning, for the digital universe, acts as both the net and the captain, decisioning around the massive sea of data we swim in daily.

Picture this: a machine learning model tasked with screening job applicants. Within minutes, it scans thousands of resumes, selecting only the “best” fits. However, unbeknownst to its human operators, the model has learned biases from historical hiring data. It favors certain demographic characteristics over others, namely those that have historically benefited from systemic advantages. This oversight doesn’t just cost in terms of diversity but also in innovation and profitability. This illustrates why bias mitigation in machine learning is crucial not just ethically, but also economically.

The quirky yet pressing question now becomes: “How do we teach a model to decide equitably?” Think of it as etiquette training school for your algorithms. This involves everything from recognizing inherent biases in training data, tweaking learning algorithms, to implementing fairness constraints. Here’s where the magic (and hard work) of bias mitigation starts. Moreover, we cannot discount the burgeoning industry focused on providing these solutions professionally. From bespoke consultancy services to out-of-the-box fairness tools, businesses today are presented with an expansive suite of options designed to tackle bias.

The Need for Bias Mitigation

The need to address bias in machine learning is more urgent than ever. With machine learning systems making critical decisions across sectors, the potential for harm from unchecked biases is high. Consider healthcare systems that use AI to decide treatment options or loan approval algorithms in financial institutions. Even a trivial bias can lead to severe real-world consequences, particularly for marginalized communities who might find themselves unfairly disadvantaged by these systems.

By implementing rigorous bias mitigation in machine learning, we can strive for fairness and inclusivity—allowing these models to promote just outcomes without inadvertently perpetuating historical biases. Trust in AI technology can only be built when users know it will treat everyone impartially. This is why the conversation about bias in machine learning is not just optional but imperative in the ongoing dialogue about the future of AI and its role in shaping equitable societies.

Discussion on Bias Mitigation in Machine Learning

Let’s delve deeper into what makes bias mitigation such a critical aspect of machine learning. The journey to creating unbiased machine learning models starts by identifying the various sources of bias and understanding their implications. Without realizing it, developers can introduce bias at many stages within the machine learning lifecycle, whether through training data biases, algorithmic biases, or biases within user interactions.

Understanding Sources of Bias

Identifying and understanding the sources of bias in machine learning is akin to detective work. The data source is often ground zero for bias creep, given that historical data can reflect societal prejudices. If biased data train the model, the outcomes will inevitably be skewed, magnifying existing disparities. Understanding these biases is crucial in creating more fair and balanced models.

Implementing Bias Detection

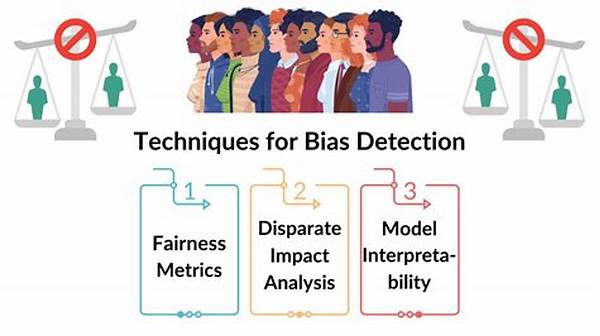

Once you’ve identified potential biases, the next step is implementing effective bias detection mechanisms. Think of bias detection as the anxiously awaited new tech gadget—transformative yet purposeful. There are various tools available for this purpose, each offering unique features to sift through data, analyze patterns, and highlight discrepancies. Insights gained through proper bias detection methods empower developers to make smarter, fairer decisions.

Human oversight remains integral even with sophisticated detection tools, ensuring that the machine learning models don’t rely on unjust preconceptions. Real-life examples, such as chatbots or customer service AI, help illuminate how useful bias mitigation strategies are in crafting seamless, unbiased user experiences.

Strategies for Mitigation

There’s an exciting buffet of strategies available to tackle bias, from algorithmic tweaks to data pre-processing techniques. Data augmentation, fairness constraints, and algorithmic transparency are among the myriad of strategies developers can adopt. Each approach has its distinct advantages and limitations, and often a combination yields the best results.

Continuous monitoring and Ethical AI

Bias mitigation in machine learning isn’t a one-shot deal; continuous monitoring and improvement are necessary. Consider this an ongoing relationship, with periodic check-ins to ensure alignment with desired goals. Continuous learning is key here, fostering an evolving relationship with the data.

Creating ethical AI systems void of bias is a constantly evolving challenge but a necessary pursuit. Organizations must regularly review their models, operating within an ethical framework ensuring decisions remain impartial. As bias mitigation in machine learning strategies advance, they hold the promise of crafting an equitable digital ecosystem.

Seeking Professional Help

For organizations feeling befuddled by the technical intricacies involved, professional consultancies can provide tailored solutions with expert insights—think of them as the personal trainers of the AI world, dedicated to fine-tuning your models for actionable fairness.

To sum it up, the journey towards bias-free machine learning is both intricate and invaluable. Through deliberate interventions, organizations can harness the full potential of machine learning while upholding principles of fairness and equality.

Objectives of Bias Mitigation in Machine Learning

Exploring Bias Mitigation Techniques

Addressing bias in machine learning is a nuanced venture that requires precision and dedication. As professionals dig deeper into this labyrinth, the tools available offer an enlightening guide towards ethical innovation. Bias mitigation in machine learning is no longer just a technical requirement but a moral compass directing us toward a future where AI benefits are equally distributed.

Tools and Technologies

A new wave of technological solutions is transforming how bias can be mitigated within machine learning. From open-source libraries that deploy fairness algorithms to specialized software solutions that monitor and rectify biases, the options at hand are diverse and empowering. Each tool, like a sculptor’s chisels, enables precision in crafting models that stand the test of fairness and equality.

Organizations also have the option to engage external consultants specializing in ethical AI. These experts bring insights and tailored strategies to guide companies through the maze of bias, helping them emerge with systems that not only work but do so justly. In the end, bias mitigation becomes not just an exercise but a collective mission, unifying innovators on a shared path to equitable AI.