H1: LSTM versus Traditional RNN

In the cutting-edge realm of machine learning and artificial intelligence, Recurrent Neural Networks (RNNs) have been both heralded as revolutionary and critiqued for their limitations. Enter Long Short-Term Memory (LSTM) networks, which have swooped in with promises of solving the notorious problems associated with traditional RNNs. But the question remains, do they really outperform the conventional models, or is it just a new packaging of an old story? The battle of “LSTM versus Traditional RNN” is not just an academic debate—it’s a pivotal decision point for businesses, developers, and innovators looking to harness the power of AI for practical applications. Let’s dive into this intriguing tale of two neural network architectures, exploring the nuances and understanding their impact on the future of AI technology.

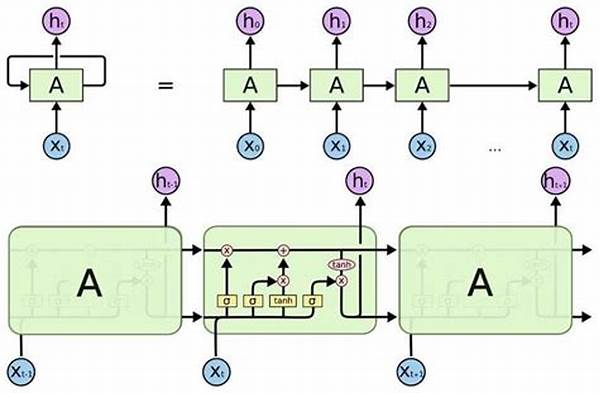

In the traditional sense, RNNs have been utilized for sequence processing tasks due to their inherent ability to handle sequential data. However, traditional RNNs encounter challenges such as vanishing and exploding gradients, which severely limit their capability to learn long-term dependencies. Many would liken this to trying to remember what you had for breakfast. As the day progresses, the details grow hazy. LSTM networks, however, swoop in like brain-boosting supplements, effectively addressing these hurdles with their unique architecture that includes additional gates to manage memory over longer periods. With features like a forget gate, LSTMs can decide when and what information to retain—a game changer in the realm of sequential data processing.

The newfound prowess of LSTM versus traditional RNN is akin to the transformation from video tapes to streaming services. Just as the latter revolutionized how we consume media, LSTMs transform the way sequence data is processed. Their application in natural language processing, speech recognition, and even stock price prediction has shown significant improvements over their RNN predecessors. Thus, as we stand on the cusp of the AI revolution, the choice between LSTM and traditional RNN becomes not just about technology, but about envisioning a future where machines understand and anticipate our needs in ways we once thought impossible.

H2: Navigating the Decision between LSTM and Traditional RNN

In the intricate dance of AI and machine learning advancements, the decision to use LSTM versus traditional RNN can feel like picking the lead dancer for a grand performance. LSTM has gained favor for its sophisticated design and ability to remember the long-term context, something traditional RNNs struggle with. However, the decision isn’t always clear-cut. Let’s explore further into the core functionalities and how these technologies impact real-world applications.

LSTM networks, with their forget gate, input gate, and output gate, offer a remarkable method to manage information flow. In comparison, traditional RNNs, though simpler, are like the rock bands of the past—great in their heyday but limited by the technology of their time. Businesses and developers often find themselves weighing the complexity and resources required by LSTM against the simplicity and speed of traditional RNNs. For tasks involving straightforward sequential data and shorter context requirements, RNNs might still hold their ground.

H2: Advantages of LSTM over Traditional RNN

While pondering the “LSTM versus traditional RNN” decision, one can’t ignore the statistical evidence gleaned from various research and case studies. For example, in tasks like language modeling and video classification, LSTM models have significantly outperformed RNNs, particularly for data with long-term dependencies. Their ability to mitigate the vanishing gradient problem means LSTMs can reach optimized training results much faster and with better accuracy. This lends a unique selling point to LSTMs, making them an attractive choice for industries looking for robust AI solutions.

The narrative of RNNs being left in the historical dust might be overdone but isn’t entirely unwarranted. However, when time and resources in training are limited, and tasks don’t demand extensive context, traditional RNNs can still perform respectably well. This is why the “LSTM versus traditional RNN” conversation still echoes in boardrooms and tech seminars, with considerations deeply rooted in project requirements. For every dramatic change attributed to technological advances, there’s always a strategic analysis involved that could swing the decision either way.

Attention now turns to the implementations and case studies that showcase where each model shines. Imagine a world where translational apps understand nuances in dialect or where AI-driven customer service recognizes emotional undertones. These are the advanced outplays attributed to LSTM applications. For stakeholders, researchers, and tech enthusiasts, this battle is much more than a choice—it’s a quest for unlocking the true potential of AI.

In the next sections of our discussion, we will delve even deeper into specific use cases, exploring the impact of “LSTM versus Traditional RNN” in various sectors. We’ll share insights from field experts, data-backed testimonials, and real-world analogies that hopefully provide clarity on why one might be chosen over the other. Whether you’re a tech-savvy startup or a large organization navigating the AI landscape, understanding this dichotomy is crucial for future-ready solutions.

In conclusion, as AI continues its transformative journey, the importance of selecting the right neural network architecture becomes more critical. The debate surrounding “LSTM versus Traditional RNN” isn’t merely academic but reflects broader technological, strategic, and economic contexts that shape our digital future.