Hey there, fellow netizens! Ever found yourself pondering the wild world of AI and the military? Yep, it’s as sci-fi as it sounds! But once you peel back the layers, there’s a whole lot of reality packed into accountability in AI military actions. So, grab your coffee and let’s dive into this intriguing topic where robots and responsibility collide.

Peering into the Ethics of AI Warfare

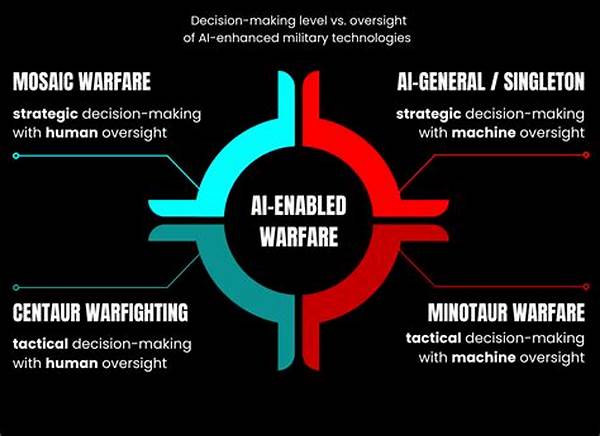

So, what’s the big deal with AI in military settings? Let’s break it down. AI is like the tech superhero, stepping into situations where human capabilities hit a wall. However, this superhero needs a manual labeled “Accountability in AI Military Actions.” When algorithms decide who’s friend or foe, who’s keeping them in check? This is where ethical questions storm the scene. Who’s responsible when AI makes a blunder on the battlefield?

The thing is, AI doesn’t feel guilt or responsibility—only humans can do that. But in AI military deployments, accountability becomes a hot potato nobody wants to hold. We’re talking about life and death decisions, folks! It’s a unique conundrum when a cold algorithm determines military moves. The issue boils down to trust and oversight. Strong lines of accountability in AI must be established to ensure that blame doesn’t vanish into thin air when things go south.

Military tech might be shooting for the stars by integrating AI, but the ethical nitty-gritty of who’s accountable keeps crashing the party. Imagine a robot drone making split-second decisions—who’s responsible when it all goes haywire? Clear rules and regulations on accountability in AI military actions are essential. Otherwise, we’re just opening up a Pandora’s box filled with futuristic dilemmas that need addressing.

Tangible Steps Towards Accountability

1. Clear Guidelines: Establishing robust guidelines is critical. This ensures accountability in AI military actions isn’t an afterthought, but the core focus of operations.

2. Human Oversight: Even the smartest AI systems need human supervision. This serves as a reality check in accountability in AI military actions.

3. Tech Transparency: Opening the black box of AI systems can demystify decision-making, promoting transparency in accountability in AI military actions.

4. Training and Education: Empowering military personnel with AI knowledge ensures accountability. They can spot discrepancies and uphold accountability in AI military actions.

5. Public Policy: Governments must play catch-up with tech developments to ensure robust policies for accountability in AI military actions.

Challenges of Enforcing Accountability

Navigating accountability in AI military actions is like solving a complex puzzle. There’s the tech component—ensuring AI systems are reliable and accurate. But even more complex is establishing who owns the mistakes made by machines. It’s not just about coding the perfect algorithm; it’s also about ensuring these systems adhere to humanitarian laws.

Beyond the tech challenges lies societal concerns. Public perception plays a massive role. If the masses don’t trust AI at war, upholding accountability is a tough sell. Strengthening this trust demands open dialogue about AI’s role in military actions. And let’s not forget international laws, which currently struggle to cope with rapid AI advancements.

How do we address these overwhelming challenges? It begins with incorporating accountability from the ground up. Instead of retrofitting solutions post-implementation, accountability must be baked into AI systems’ design and deployment. Robust frameworks and clear accountability in AI military actions can bridge the gap between innovation and responsibility.

The Human Factor in AI Accountability

1. Policy Makers’ Role: Lawmakers must act fast to update laws. New tech demands new accountability frameworks in AI military actions.

2. Military Training: Comprehensive training helps military staff grasp the complexities of AI and accountability in military actions.

3. Cultural Shift: The military’s culture should evolve to embrace both AI innovations and the importance of accountability in actions.

4. International Cooperation: With AI as a global phenomenon, accountability in AI military actions demands international collaboration for ethical guidelines.

5. Learning from Mistakes: Analyzing incidents where accountability in AI military actions faltered can teach invaluable lessons.

6. Public Engagement: Involving the public in discussions allows for a diverse outlook on accountability in AI military actions.

7. Ethical AI Design: Designing AI systems with ethical decision-making frameworks promotes a higher level of accountability in military actions.

8. Tech Companies’ Responsibility: Developers must shoulder part of the accountability in AI military actions through responsible innovation.

9. Decentralized Decision-making: Having a distributed decision-making process in military operations can enhance accountability in AI actions.

10. Future-proofing: Planning for future advancements can ensure accountability principles are enduring and adaptable.

Moving Forward with AI and Accountability

Steering the ship of military AI towards accountability is like navigating stormy seas. You’ve got to keep your eyes on the horizon but always be ready for uncharted waters. The journey to ensure accountability in AI military actions is laden with challenges—technical, ethical, and legal. To make sure we’re going the right way, clear guidelines and protocols are vital.

The conversation about accountability doesn’t stop with the military. It’s a collective issue that touches every facet of society. It rests upon tech developers, policymakers, and citizens to lock arms and ensure the ethical deployment of AI in military scenarios. So, when a flying bot decides to interfere on a battlefield, the protocols of accountability in AI military actions hold strong.

A future where military AI operations are seamless and accountable isn’t just desirable—it’s essential. Treading these waters with care means prioritizing accountability, ensuring all parties involved are aware and prepared for the consequences. This is not just about implementing AI but about enabling technology to harmonize with human ethics and responsibilities.

The Broader Implications of AI Accountability

Ultimately, the broader implications of accountability in AI military actions spill into everyday life too. As more industries adopt AI, the military provides a blueprint for other sectors. If AI accountability isn’t prioritized, even benign tech can morph into a liability. The stakes are high everywhere, so we need a universal approach.

Remember, it’s about connecting the dots—from boardrooms to battlefields. The accountability culture nurtured in the military can ripple across other sectors, fostering trust and safety in an increasingly digital world. Ensuring accountable AI isn’t just a military mandate; it should be everyone’s priority.

The intricacies of AI accountability weave through our lives like never before. Military applications teach vital lessons in understanding and cooperation, calling for a more standardized practice of accountability everywhere AI operates. That’s the fine print—the decisions we make today shape the AI-infused world of tomorrow. Let’s make the right ones.