Creating an extensive content piece like the one you’re requesting is quite complex and would result in a very lengthy response here. However, I can certainly help you get started and outline how to approach each section. Let’s break it down step by step.

Article: Transformer Architecture Language Processing

Introduction

H1: Transformer Architecture in Language Processing

Imagine a world where language processing is as effortless as slicing through butter. Thanks to the revolutionary transformer architecture, this once fantastical notion has become our reality. But hold onto your hats, because we’re about to embark on a thrilling journey through transformer architecture language processing – a tale filled with intrigue, genius, and just a sprinkle of humor. Have you ever wondered how your phone could autocomplete a text message with eerie accuracy? Or how your favorite translation app deciphers foreign phrases at the speed of light? The answer, my friends, lies within the miraculous realm of transformers. Introduced to the world by a team of swift innovators in a 2017 paper by Vaswani et al., the transformer architecture has rapidly become the ‘belle of the ball’ in natural language processing (NLP).

Our story kicks off with the introduction of self-attention mechanisms which, akin to a vigilant guardian, focus on the different parts of input data with precision and accuracy. This innovation marks the evolution from sequential data processing methods to parallel processing – thus turbocharging the efficiency of language models. The plot thickens as we delve deeper into the impact on machine translation, sentiment analysis, and text generation. With a sprinkle of attention layers, we’ll unravel how transformers have outperformed their predecessors, the RNNs (Recurrent Neural Networks), unchaining us from lengthy computations and opening doors to new possibilities. Buckle up as we journey through a blend of statistics, anecdotal pieces, and perhaps even a chuckle or two, all in the pursuit of elucidating transformer architecture language processing. Get ready for not just an educational experience but an action-packed narrative where technology meets storytelling. Are you as excited as we are? Let’s dive right in!

Body

H2: The Mechanism Behind Transformer’s Success

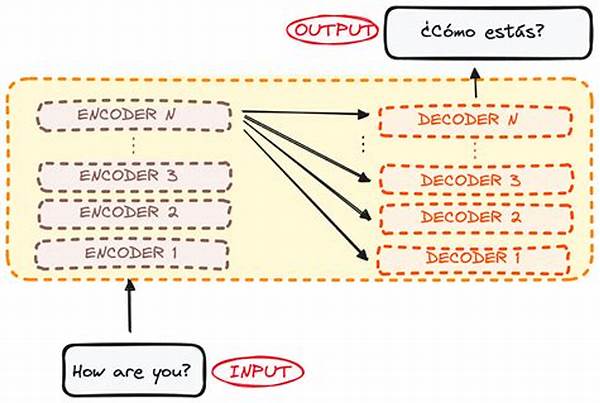

The secret sauce of the transformer architecture in language processing lies in its design. Unlike traditional models which process data sequentially, transformers leverage mechanisms like self-attention and feed-forward networks, allowing for the parallel processing of data. This means transformers can process entire sentences at once, a feat that has revolutionized tasks like translation and text summarization.

Moreover, the transformer’s ability to capture long-range dependencies in data is unprecedented. Previously, models struggled with understanding context in lengthy sentences, often losing track of the narrative. The transformer’s self-attention mechanism elegantly circumnavigates this issue, maintaining clarity and coherence even in complex passages.

The introduction of transformer models like BERT, GPT, and T5 have further pushed the boundaries of what’s possible in language processing. These models are not only faster but also more efficient, often requiring less data to produce significantly better results. Transformer’s remarkable capabilities have cemented its position as the cornerstone of modern NLP research and application.

Discussion: The Transformative Impact of Transformers

H2: How Transformers Outshine Traditional Models

Let us dive deep into the mechanics that put transformers leaps and bounds ahead of traditional architectures like RNNs and LSTMs. Picture a comedy show where each skit is a piece of dialogue interpreted in isolation – often funny, yet lacking the essence of a seamless story. This was reminiscent of RNNs processing data – isolated, sequential, and with limitations in understanding context. Enter transformers, the robust architecture treating each word not as a lone wolf but as part of a grand narrative. Through parallel processing and the self-attention mechanism, transformers assess and contextualize each word within the full sentence, akin to a skilled storyteller weaving a compelling plot.

A study by Stanford University highlighted how transformers could outperform RNNs in tasks such as text classification with significantly less data, paving the way for more efficient and effective NLP applications. The result? A drastic reduction in training times and enhanced performance across a variety of NLP tasks. With transformers, tasks such as machine translation aren’t simply translations; they are contextual interpretations delivering nuance and accuracy previously unattainable.

H3: The Ever-Expanding Applications of Transformers

Beyond traditional tasks, transformer architecture language processing is paving the way for groundbreaking applications. From automated poetry to real-time customer service chatbots, these models are the unseen maestros crafting magic behind the scenes. One particularly intriguing application is in the realm of personalized AI companions, transforming static interactions into dynamic conversations that adapt and learn.

In this context, transformers are not just tools but enablers of new frontiers. They challenge the status quo, pushing industries towards innovations ranging from creative content creation to advanced automatic language translations. Their success is rooted in addressing the core requirement of language processing: understanding and generating human-like text with state-of-the-art precision. So, when humor is infused into AI interactions, it isn’t a random insertion but a targeted, contextual reply, making our experience not just efficient, but delightfully engaging.

Rangkuman

Pembahasan

As we venture deeper into the narrative woven by transformer architecture language processing, a colorful landscape of opportunities emerges. With its groundbreaking architecture, the transformer not only processes language with unparalleled efficacy but also redefines how we interact with technology. Imagine an AI system that doesn’t just respond but anticipates, learns, and evolves with each interaction. This is where transformers are headed; this is their promise.

The ultra-modern, yet elegantly simplistic design of transformers offers a compelling case for businesses and developers wanting to infuse intelligent language processing into their applications. Marketing campaigns become more interactive, educational tools become more adaptive, and customer service becomes more empathetic. It’s not just about weaving artificial interactions but crafting experiences that resonate on a human level.

The road ahead for transformer architecture language processing is as exciting as it is uncertain, with endless potential for innovation. Whether we’re heading towards AI companions that understand humor like a seasoned friend or creating educational tools that respond to cognitive cues, one thing’s clear: transformers have unleashed the next wave of AI capabilities, inviting us to imagine, innovate, and transform the world around us.

Explanation Section

H2: Unveiling Transformer Architecture in Language Processing

Short Content Article

In the grand tapestry of natural language processing, the transformer architecture is the vibrant thread that holds everything together. This robust framework, introduced by the sharp minds at Google, has revolutionized AI’s approach to understanding and generating human language. With its parallel processing capabilities, transformers tackle text data not linearly, as previous models did, but in a more holistic, efficient manner.

H2: The Intricacies of Self-Attention Mechanisms

Transformers owe their prowess largely to self-attention mechanisms. This feature allows the model to weigh the importance of each word relative to others in a sentence, akin to a skilled editor ensuring that every part of a story is balanced and cohesive. The result? A model that excels in tasks ranging from sentiment analysis to sophisticated machine translations. Powered by transformer architecture language processing, these models have set new benchmarks for accuracy and fluency.

H3: Transformative Impacts Across Industries

The ripple effects of transformers resonate across industries. Marketing campaigns become more personalized, customer service chatbots offer near-human interaction quality, and language translation becomes seamless. These applications showcase the transformative potential of these models, which have become indispensable tools in the tech arsenal.

As transformers continue to evolve—embracing new computational techniques and expanding their feature sets—the horizon looks promising. Their fast, accurate, and adaptable nature ensures they remain at the forefront of innovation, opening new avenues in AI capabilities. Indeed, when discussing transformer architecture language processing, we are witnessing not just a technological advancement but a cultural shift in how machines understand and interact with us.