In today’s digital world, the demand for intelligent systems has reached a fever pitch. Businesses, researchers, and developers are all tapping into the marvels of neural networks to power everything from recommendation engines to disease detection systems. Yet, while neural networks promise significant breakthroughs, they can be complex and resource-intensive. This is where the magic of neural network optimization strategies comes into play. These strategies are the unsung heroes that ensure our models not only perform accurately but also operate efficiently, making AI technology accessible and effective for real-world applications.

Read Now : **heuristic Evaluation In Usability Testing**

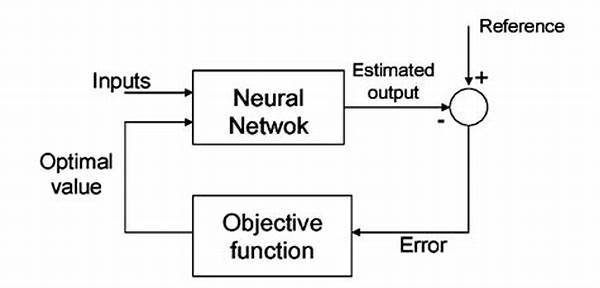

Imagine constructing a luxurious, high-performance sports car. Without the right tuning and adjustments, even the most sophisticated car might falter in a high-speed chase. Similarly, in the intricate world of machine learning, optimization strategies are crucial. They breathe life into raw neural architectures by refining parameters, reducing computational burdens, and enhancing model efficiency. But what does it mean to optimize a neural network? Essentially, it’s about finding the best configuration of weights and biases to minimize error without excessive computing resources. This pursuit represents a delicate balance between performance, speed, and accuracy.

Moreover, neural network optimization strategies tackle overfitting, a significant challenge in machine learning. By fine-tuning hyperparameters and employing regularization techniques, models can generalize better to unseen data, making predictions more reliable in practice. Imagine having a reliable GPS – that’s effective optimization, always pointing you in the right direction without unnecessary detours. Whether it’s adjusting learning rates, experimenting with batch normalization, or utilizing advanced algorithms like Adam and RMSprop, each strategy offers unique benefits and insights into the neural universe dynamics.

Yet perhaps the most fascinating aspect of these strategies is their dynamic nature. As technology and research progress, our optimization arsenals expand. From the newest architectures to time-proven techniques, the realm of optimization is in constant evolution. This vibrant field invites curious minds and innovative thinkers to explore, experiment, and expand the frontier of artificial intelligence.

The Science Behind Neural Network Optimization

To delve deeper into neural network optimization strategies, we must embrace the science and enigmatic art behind them. At the heart of neural optimization lies various algorithms, hyperparameter settings, and learning rate adjustments. Each plays a pivotal role like seasoned chess players meticulously plotting moves to ensure victory on the AI battlefield.

For instance, consider how gradient descent, an optimization algorithm, operates. It’s akin to hiking down a mountain, seeking the most efficient path to the base without unnecessary detours. Yet, gradient descent has siblings, such as Stochastic Gradient Descent (SGD), Adam, and RMSprop, each offering unique insights and advantages to boost neural effectiveness. These algorithms power the backbone of every neural network, unlocking the true potential hidden within layers of data.

Beyond algorithms, neural network optimization strategies also tap into hyperparameter tuning. This realm is where magic meets logic, allowing model makers to experiment with configurations that feel like running a bakery of APIs, neural vibes, and pure AI love. It’s a smorgasbord where predictive powers get enhanced, producing cakes – or in this case, models – that dazzle tastebuds with their accuracy.

Understanding these elements allows you to craft more sophisticated networks. Much like the secret sauce in your grandma’s famous recipe, mastering optimization is a blend of tradition, experimentation, and a touch of digital flair. As new theories and applications arise, so does the demand for mastery in this domain. It encourages every enthusiast to unravel the potential of machine learning through meticulous optimization, one creative solution at a time.

Neural Network Optimization Strategies in Action

How are neural network optimization strategies applied in real-world scenarios? Picture social media applications recommending the perfect content, online shops predicting your next purchase, or healthcare systems mapping out personalized treatments. Each instance is imbued with carefully optimized models, enabling businesses to thrive and innovate.

Read Now : Enhancing Threat Prevention Mechanisms

This is your ticket to the neuroscience amusement park. Each ride is offered via jazzy neural tunes making waves in industries and research circles worldwide. It’s not merely tech; it’s revolution, redefined by neural charm.

Below, explore a stellar lineup of neural network optimization strategies that keep our digital dreams running smoothly.

Pioneering Examples of Optimization Strategies

In the vibrant world of machine learning, neural network optimization strategies play a pivotal role in producing effective AI solutions. Let’s explore some illustrative examples that showcase their impact.

Understanding the Core of Optimization

Understanding neural network optimization strategies is crucial in today’s AI landscape. Models transform raw data into real-world solutions, paving the way for breakthroughs in multiple fields.

Therefore, delving into optimization science is more than merely a technical endeavor. It’s a journey of exploration, innovation, and creativity. It connects scientists, developers, and businesses with a common goal: maximizing the potential of machine learning to propel society forwards.

Harness your inner neural gymnast, and step into the arena of optimization. The world awaits your unique imprint – a realm where knowledge meets ingenuity, creating ripples that continually reshape the digital cosmos.